State of QA 2025

Introduction

The objective of this report is to provide an overview of how companies at different growth stages, as well as large enterprises, approach Quality Assurance within their engineering processes. It highlights the flaws in these approaches, explains why traditional test automation tools often fall short, and describes how an AI-based strategy can drive efficiencies: reducing bugs, accelerating time to market, and ultimately improving customer experience.

This report focuses specifically on UI test automation and is intended for engineering leaders at growth-stage companies or enterprises who want to leverage artificial intelligence to improve their processes and build teams capable of delivering 10 times faster, without increasing headcount.

How businesses are solving QA today

Four types of companies.

- Companies with QA teams (tech-driven and business-driven).

- Companies that use brute force testing with their employees.

- Companies without testing that rely on monitoring and rollback.

- Companies that make developers own testing (bug bashers and automators).

Companies with QA teams (tech-driven and business-driven)

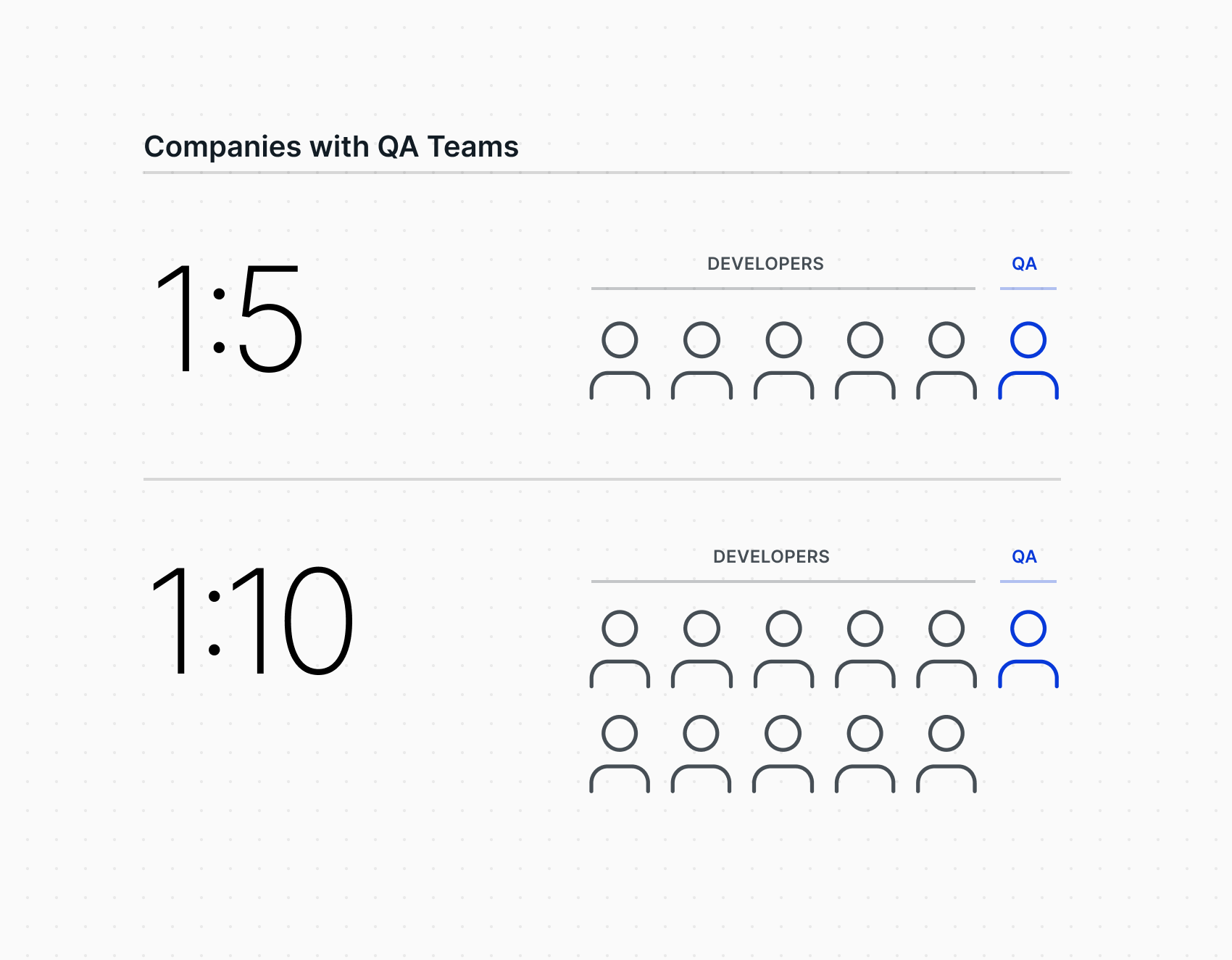

Companies with a dedicated QA team typically have a ratio of 1:5 to 1:10 QA to developers.

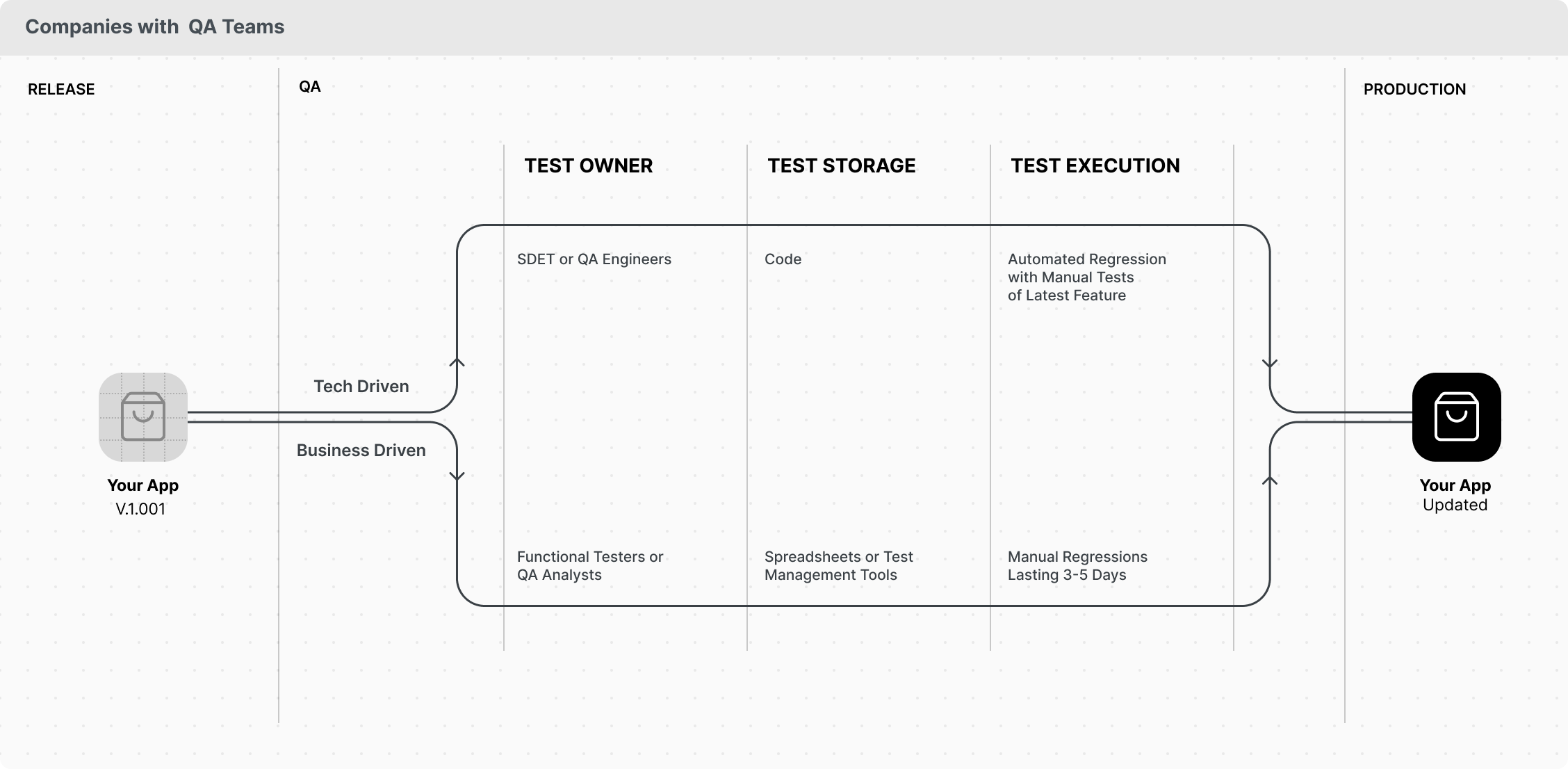

From these ones, we can split them into tech-native companies and more business-driven companies.

Business-driven companies are usually more akin to legacy industries such as financial services, healthcare, and brick-and-mortar retail, among others. In these industries, manual testing remains prevalent, with very low automation coverage metrics. Leveraging the output of a so-called Functional Tester or QA Analyst to review the quality of developers' delivery before deploying to production. They typically use a Google Sheets or test management tool, such as Jira Zephyr, XRay, or QMetry, which is stacked with thousands of test scenarios that are manually triggered each time a new release is made. Their release process typically consists of the following steps: when a new request arrives in the testing phase, a smoke test is performed first, covering only key cases. If any bugs are found, the deployment is sent back to development, and a rework is required. If the smoke test passes, a full regression test follows, which typically includes more detailed test cases, often related to the module that was impacted by the development, due to the limited capacity to run tests (since they are executed manually). QA typically takes about 3 - 5 days to complete, and regression testing is usually carried out every two weeks. Teams usually work in 15-day sprints. A clear bottleneck to shipping fast to production and thoroughly testing.

In more tech-native companies, there is a presence of QA Engineers or SDET roles that generate platforms to automate testing using scripting frameworks such as Playwright, Cypress, or Selenium for Web and Appium for mobile. These companies typically aim to automate the regression of main flows and manually test the latest feature. Once the latest feature is stable, automate testing for it and add it to the regression. We will see the drawbacks of this strategy in the next chapter.

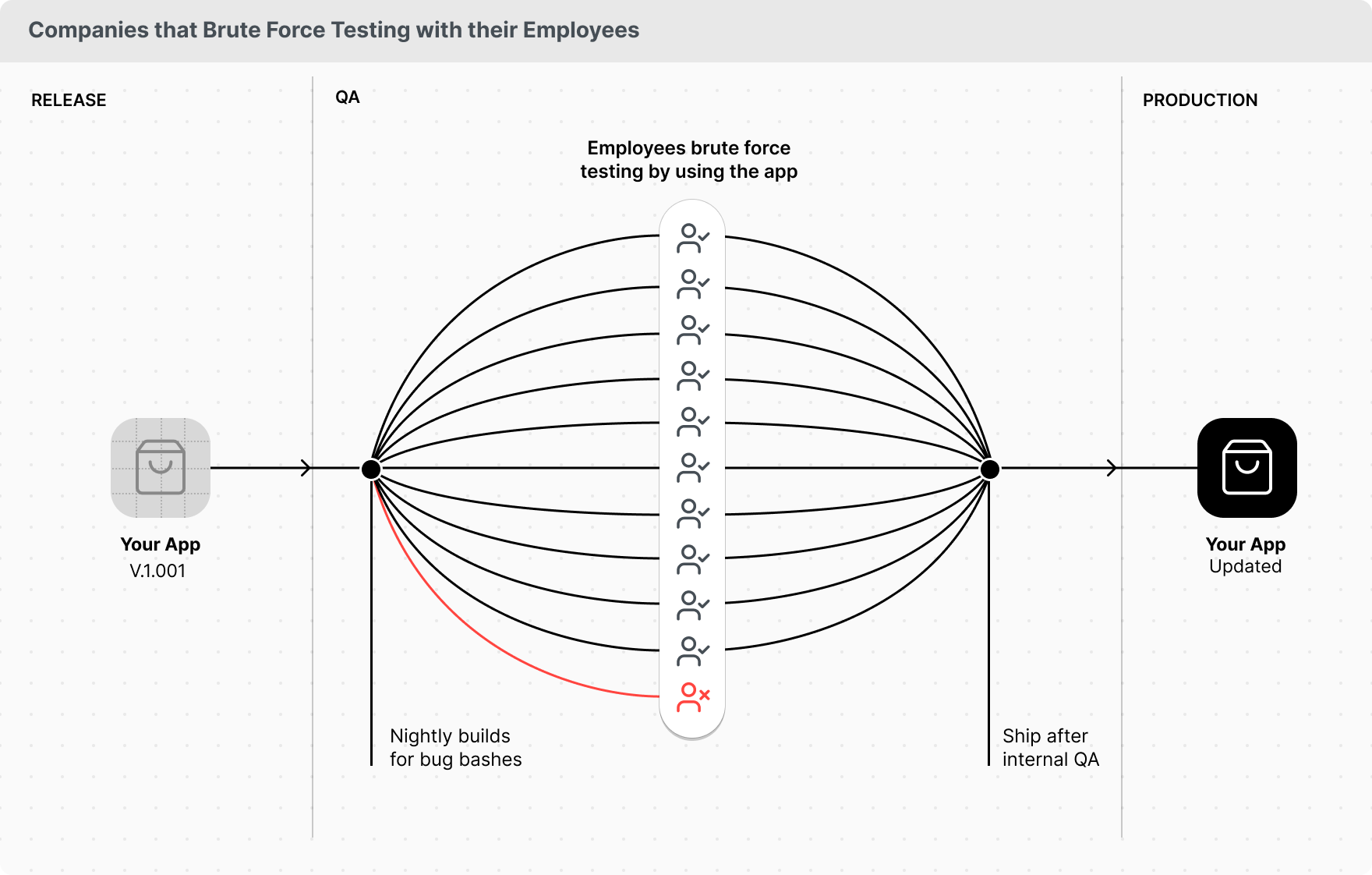

Companies that brute force testing with their employees

There is a third rare cohort of companies, usually B2C, where employees are users of the product and can build a nightly build. Employees then test the latest release to find bugs by using the product. With a considerable number of employees, you can conduct extensive bug bashing and cover a large surface area. Food delivery apps or digital wallets are ideal for this strategy.

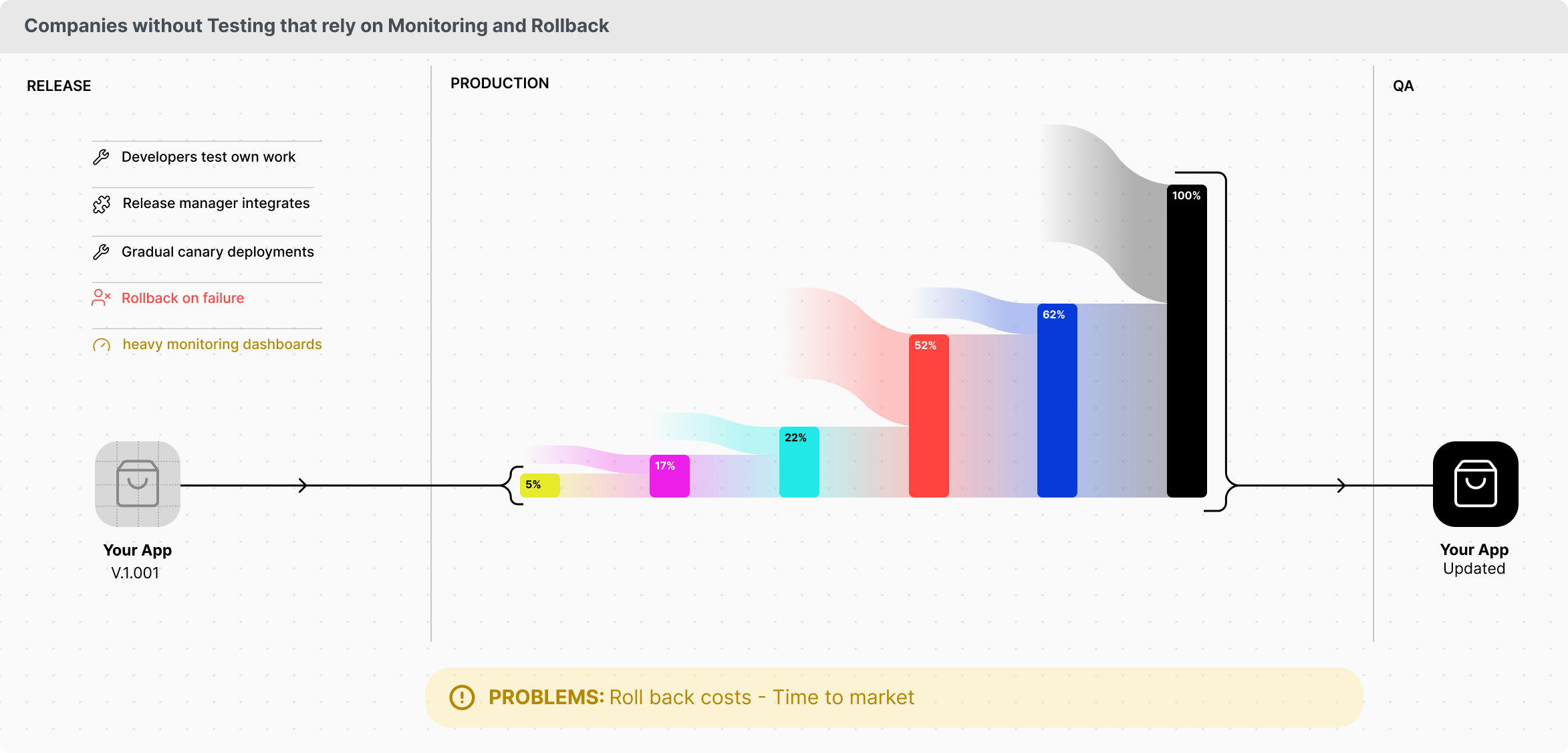

Companies without testing that rely on monitoring and rollback

Last but not least, some highly technical companies have abandoned the burden of building complex testing frameworks and instead accepted that bugs may reach production, focusing on minimizing their impact when they do. In this approach, each developer is accountable for the quality of their deliverables and tests their own work, while a release manager integrates and pushes deployments to production. Rather than releasing to all users at once, they use gradual rollouts (known as canary deployments) to limit exposure. This is supported by monitoring dashboards and an attentive support team to quickly identify and address issues affecting only a subset of users. If problems arise, a rollback mechanism is triggered to restore the previous version before expanding the rollout. This strategy works well for B2C web applications where bugs have a low user cost, but is less suitable for B2B environments involving critical transactions or mobile apps, where bugs are more costly and rollbacks are more complex.

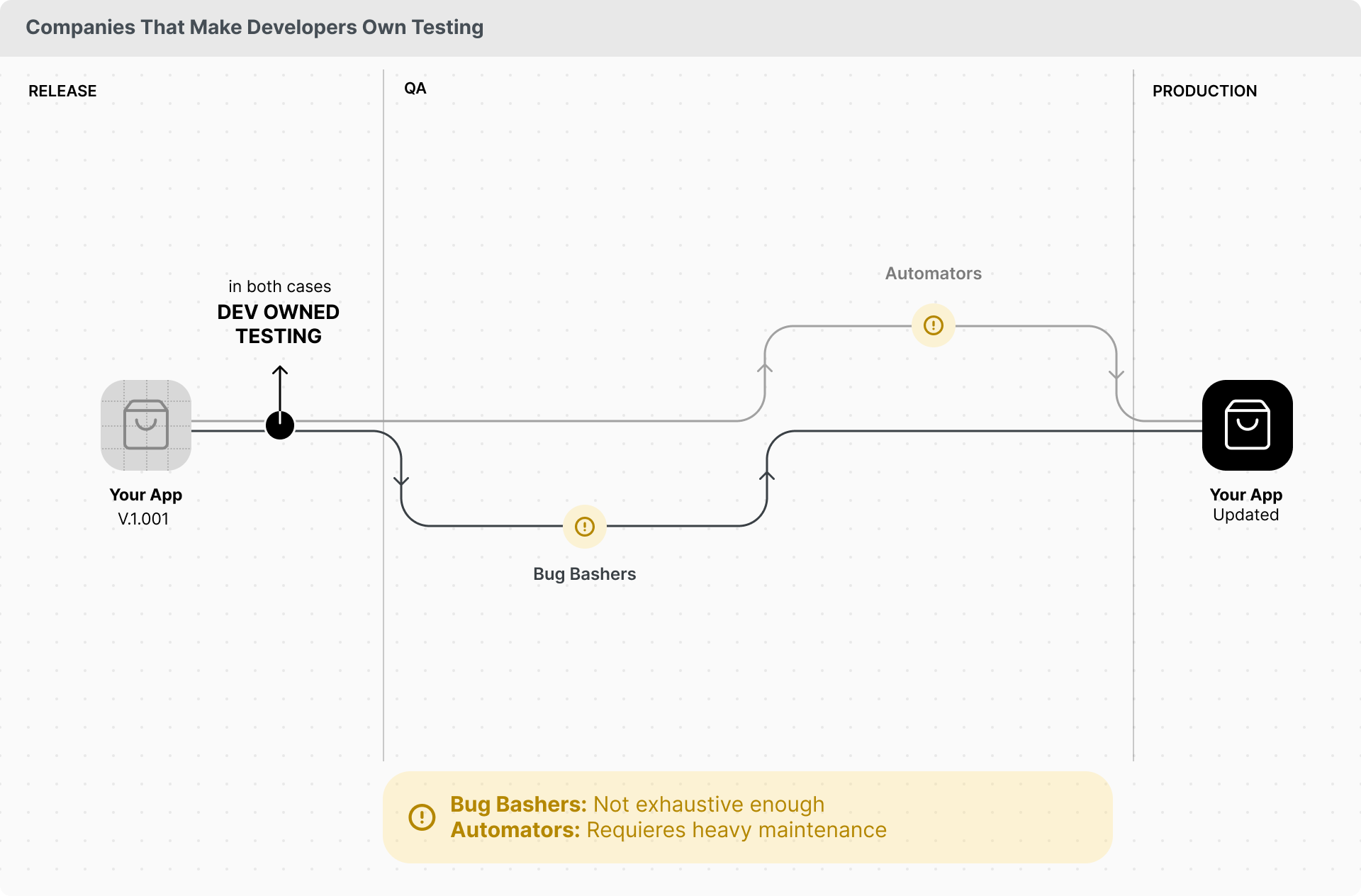

Companies that make developers own testing (bug bashers and automators)

Finally, we see companies that force developers to own testing. These can also be split into two subsets: bug bashers and automators. Bug bashers are companies that run bug-finding sessions, where developers spend valuable time manually testing flows before they go into production. It’s obvious why this is not a good use of resources.

Automators, on the other hand, are more aspirational than real. This subset makes developers build automated testing pipelines. At first, the effort may seem reasonable—especially with AI tools like Cursor or Codeium—but maintaining them quickly becomes unmanageable. Just a couple of UI redesigns can break all the tests, rendering them a skippable linter that no one trusts, and ultimately failing to serve their intended purpose.

Why test automation with traditional tools is a lost battle

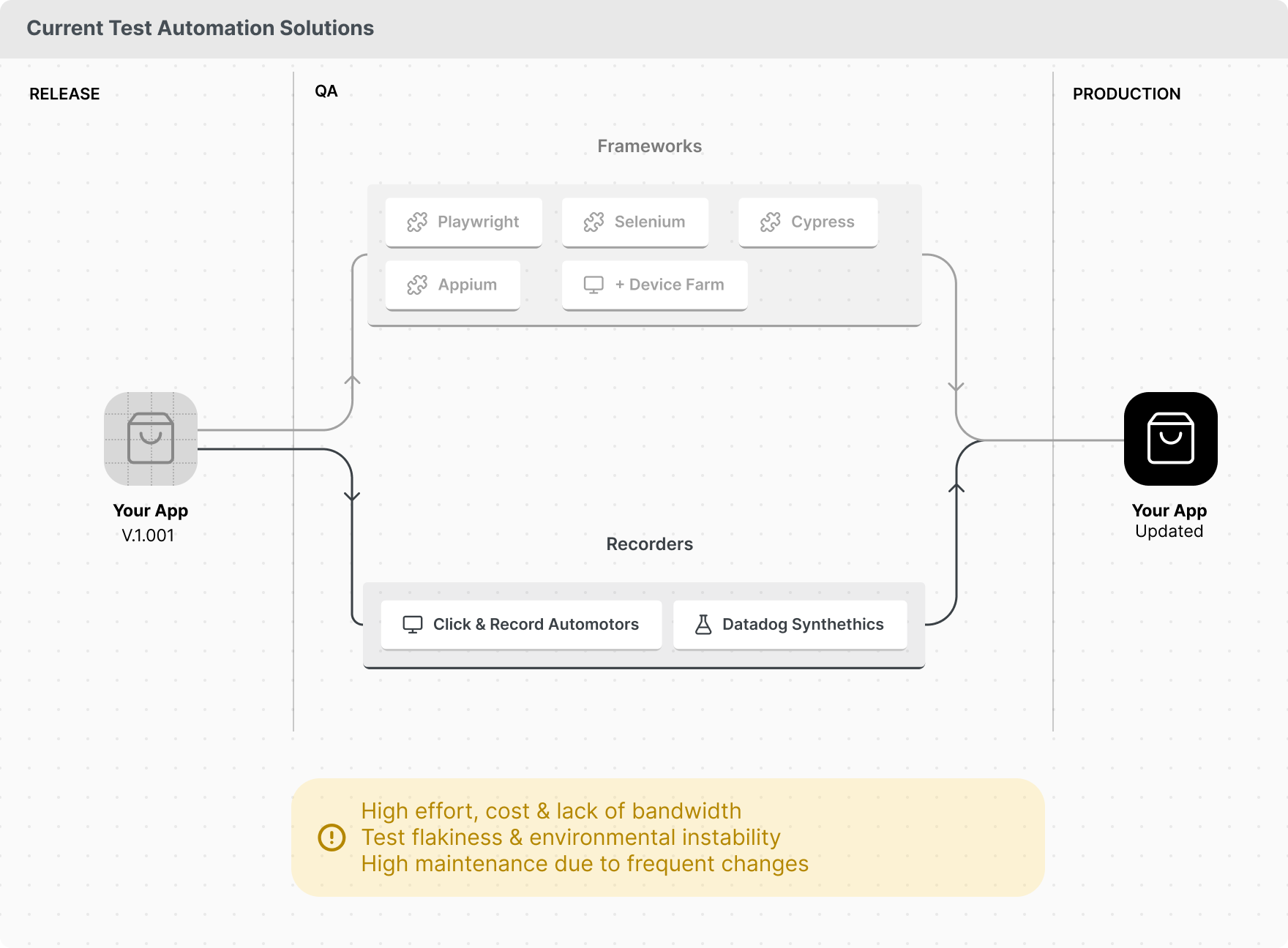

To make this case, we must first understand how it's done.

Everyone who has ever attempted to generate end-to-end tests has encountered the same issue. They are so flaky that the effort required to maintain them is not worth the pain.

Some companies try it anyway and end up building complex frameworks on top of solutions like Playwright, Selenium, Cypress, or Appium, which are usually connected to either internal infrastructure or device farms like BrowserStack or AWS Farm to run these tests.

Others rely on click-and-record solutions like Datadog Synthetics, but end up finding that they are only useful for happy paths and simple flows, as they break with every commit.

At the end of the day, everything is challenging here; building the test itself is complicated, maintaining a proper testing infrastructure is equally complicated, and finding the time or talent to dedicate to these tasks is equally challenging.

That being said, the three main reasons as to why test automation is a lost battle are:

- High Maintenance Burden Due to Frequent Changes

- Test Flakiness and Environmental Instability

- High Effort, Cost, and Lack of Bandwidth for Implementation

Let's examine each one individually to gain a deeper understanding.

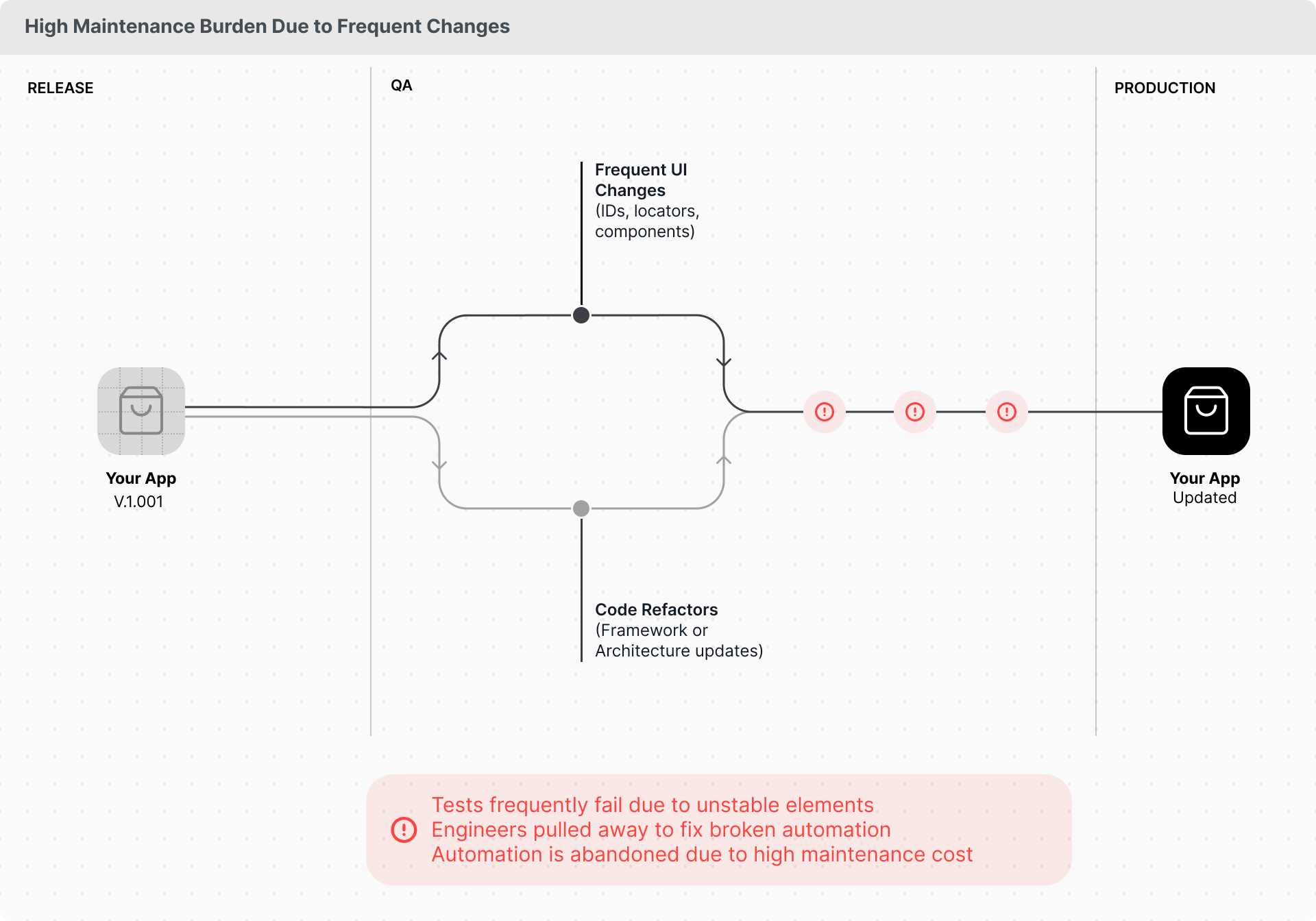

High Maintenance Burden Due to Frequent Changes

Automated test suites, especially UI end-to-end tests, require significant effort to maintain, often breaking when the application's user interface or underlying architecture changes. This is a particularly acute problem in fast-paced startup or scaling environments where frequent changes are common. Changes in UI elements like IDs or locators force updates to test scripts, and even broader architectural shifts or framework updates (like React refactoring) can break large portions of the test suite. Some companies have even stopped automating because things change too much with each sprint. This constant need for updates diverts engineering time, making the investment in automation feel less worthwhile compared to manual testing or focusing on new features.

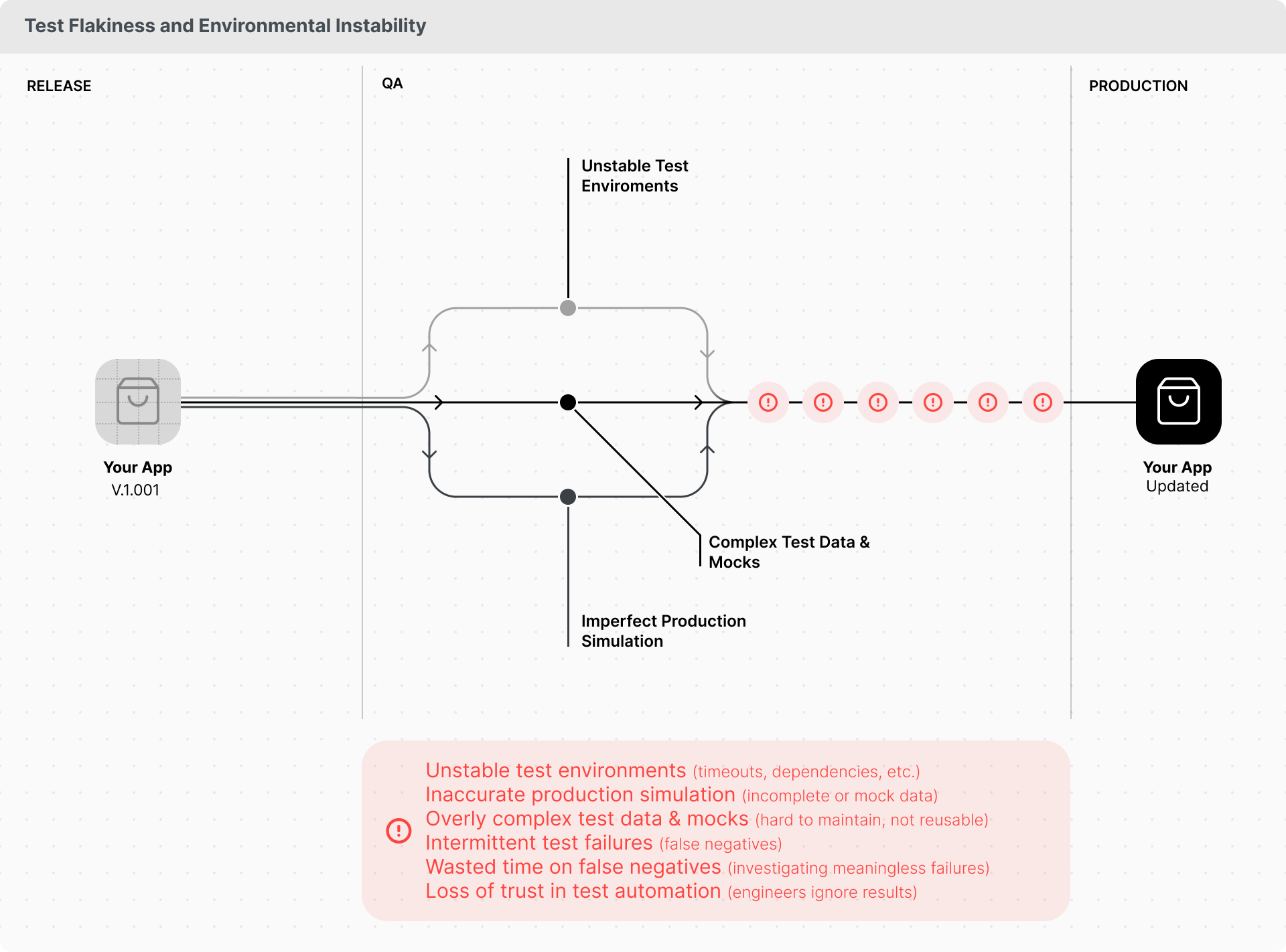

Test Flakiness and Environmental Instability

Automated tests frequently suffer from flakiness, where they pass or fail inconsistently without a clear defect in the application, making their results unreliable. This instability can be caused by various factors, including underlying infrastructure latency or unstable testing environments. A major pain point mentioned by multiple companies is the difficulty in setting up and maintaining stable, realistic test environments. These environments often don't perfectly replicate production, and the process of generating reliable test data (data seeding) or mocking external services is complex and time-consuming. Test flakiness leads to time wasted on investigating false positives, reduces confidence in the test suite, and ultimately hinders the ability to rely on automation for critical release decisions.

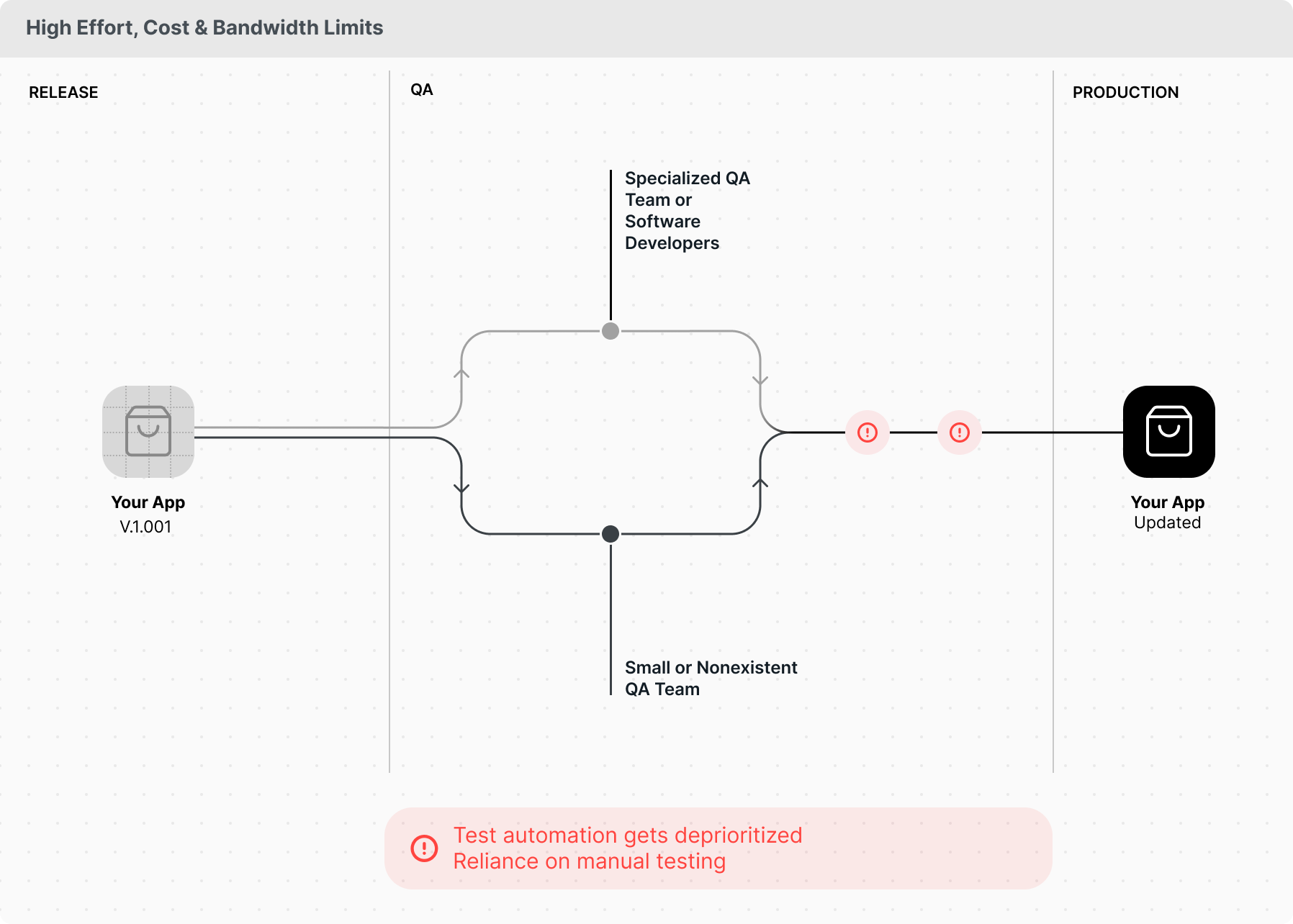

High Effort, Cost, and Lack of Bandwidth for Implementation

Building a comprehensive automated test suite from scratch or significantly increasing coverage is a substantial undertaking that many companies, especially those in earlier stages or with lean engineering teams, find difficult to prioritise or staff. It requires dedicating significant time and technical expertise from developers or hiring specialised QA automation engineers. Most companies have small or no dedicated QA teams, and upskilling manual QAs or expecting developers to take on this responsibility is a slow process. The immediate pressure to deliver features and move quickly often overrides the investment needed for proactive quality measures, such as automation. This lack of capacity and prioritization means that even when the value of automation is recognized, resources are often allocated elsewhere, leaving automation coverage low and reliance on slower, less comprehensive manual processes.

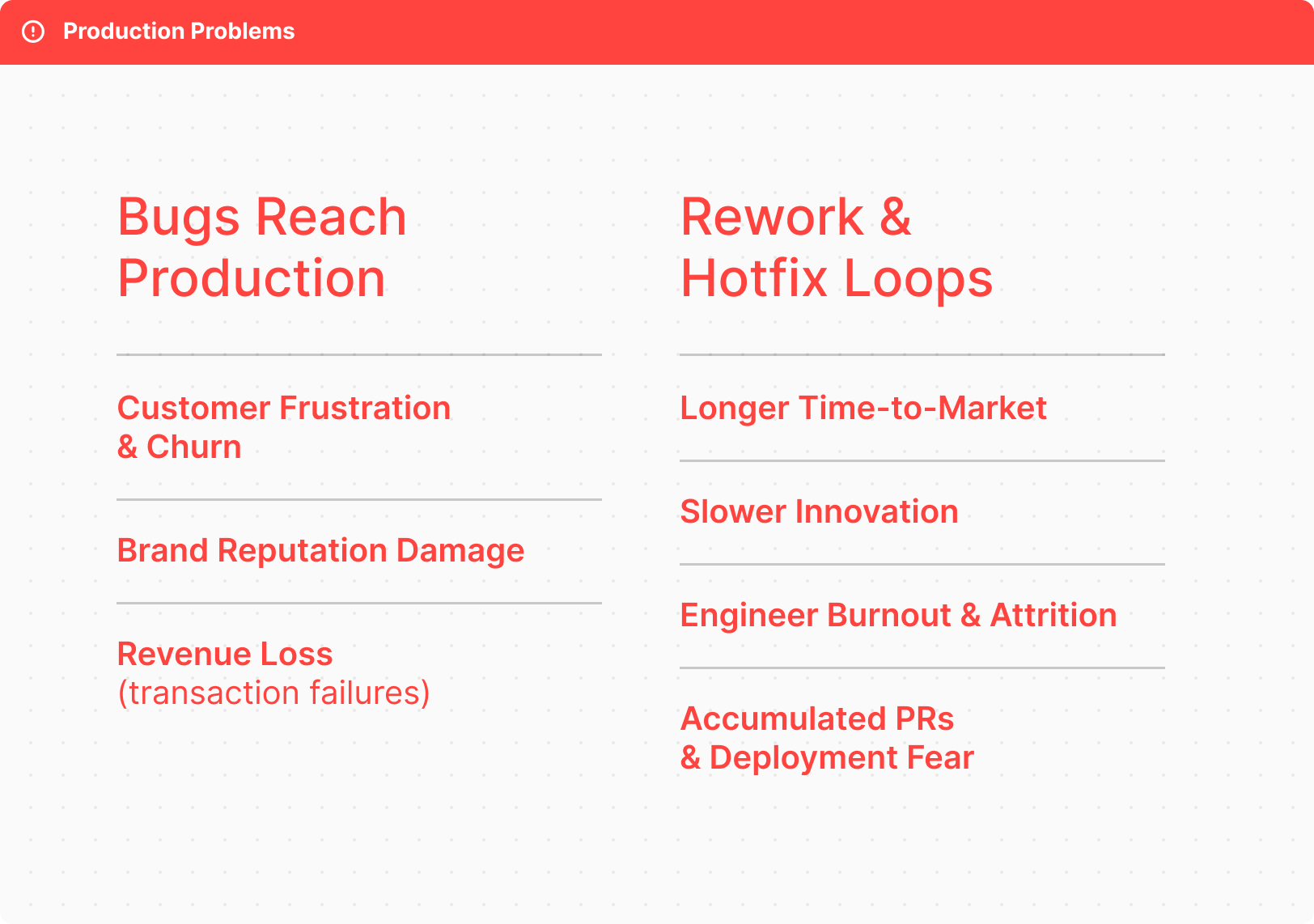

The hidden cost of a bad QA process

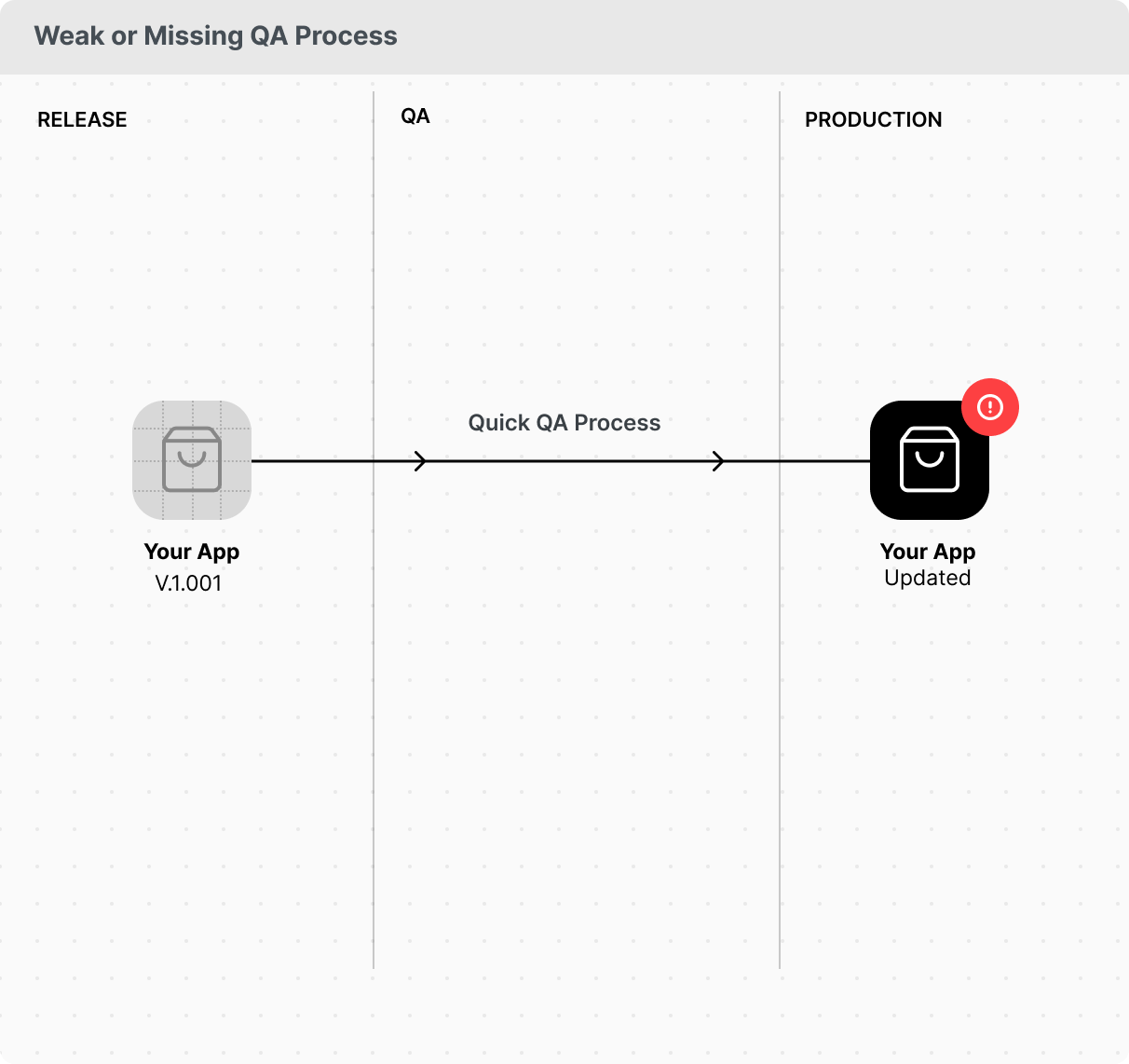

The problem with avoiding a QA process is that you just ship bad software. Bad software can negatively impact your business metrics.

Therefore, you must have a process in place. Let’s analyze in what ways a bad QA process can impact your business.

- Bugs block revenue-generating transactions.

- Users can’t order food through your app.

- Users can’t deposit money in your finance app.

- Engineering ends up reworking the same features and pushing hotfixes because bugs are found too late.

- More engineering hours per feature → higher costs.

- Slower time-to-market → delayed impact on users.

- Less innovation reaching users → higher churn and weaker acquisition.

- Engineers are forced into constant context switching instead of deep work → higher burnout, lower motivation, and increased attrition.

- Fear of production pushes grows → pull requests pile up, releases slow down, and every deployment feels risky.

- Poor customer experience often leads to churn and a loss of users.

- Users have high standards: if your product is buggy, they will likely switch to a different provider. Only monopolies survive buggy products — you don’t have that privilege.

- Bugs damage your reputation and spread through word of mouth, directly impacting sales you never close.

- Countless companies have faced claims and complaints due to buggy software — you don’t want to be in that position.

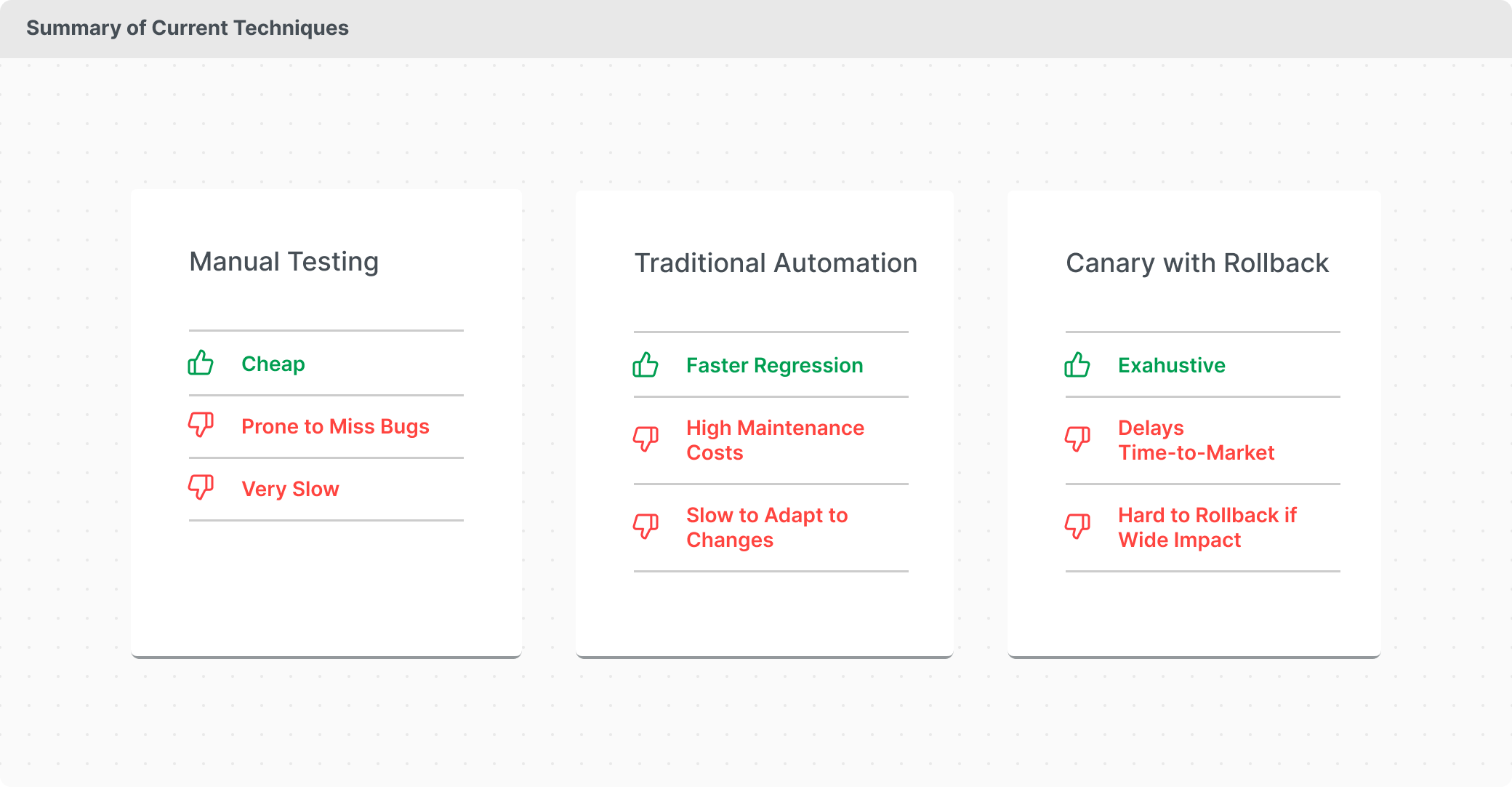

Most current QA approaches have significant drawbacks. Manual testing is slow and never thorough enough. Automation demands significant resources and ongoing maintenance. Canary deployments with heavy monitoring may look impressive, but they slow down time-to-market and are only an option for a few companies.

So, what’s the alternative? It’s time to rethink QA from the ground up. With AI, we can design workflows that just work — faster, leaner, and smarter. That’s QA 2.0.

QA 2.0 in the AI Era

At Autonoma, after studying how thousands of companies handle QA and gaining a deep understanding of the foundations of AI and software development, we propose a new way to test, one built on new processes, roles, and technologies. We are convinced that this approach is superior: those who adopt it will outperform the competition, while those who don’t will be overwhelmed by their own complexity.

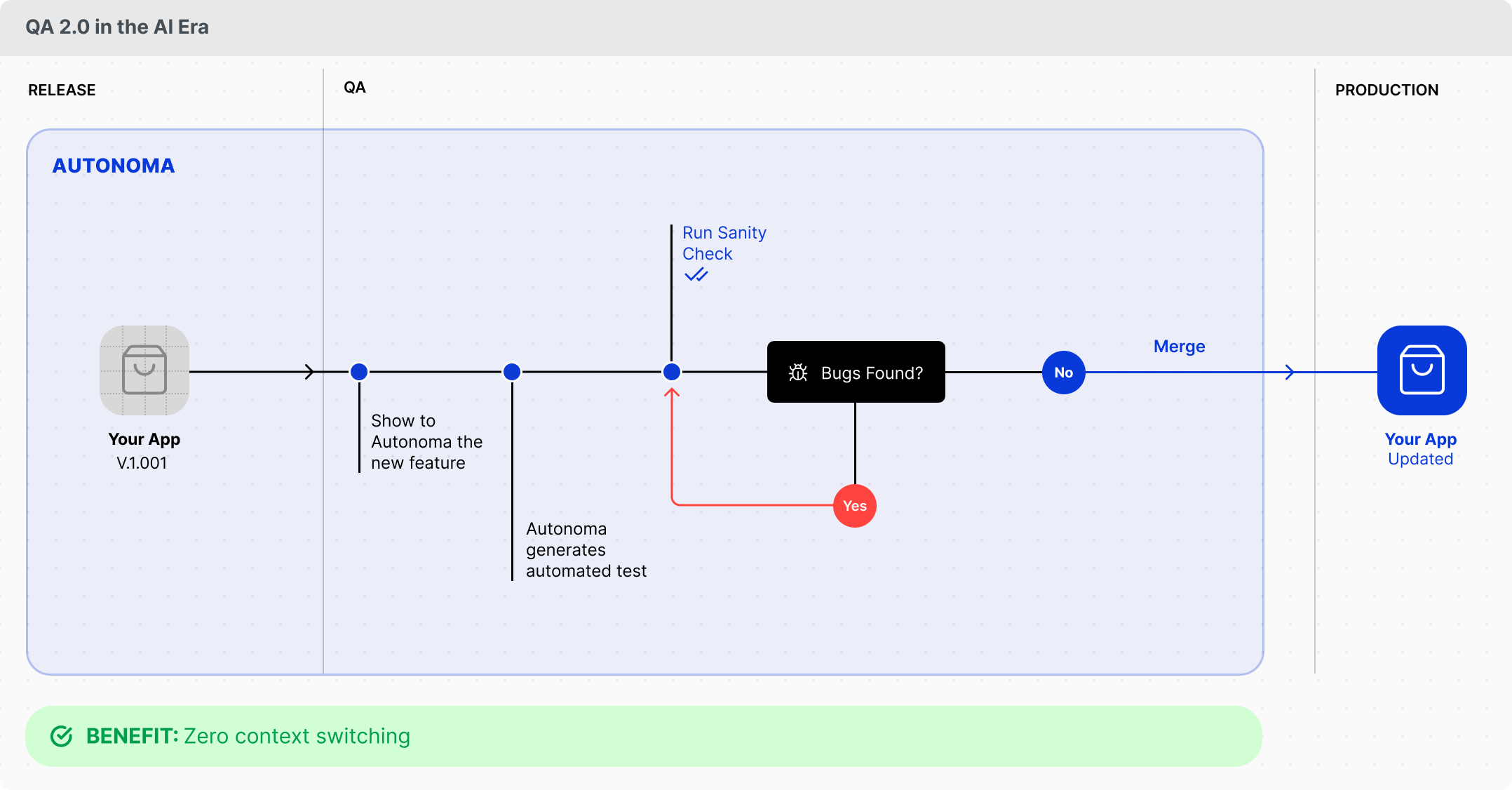

The first step is to abandon the idea that testing happens in bulk after development is complete. We understand why this pattern exists, but with highly automated processes, you can shift testing left, enabling each developer to validate their work earlier, catch inconsistencies while building, and avoid costly context switching later.

You may wonder how to build a truly automated process when current tools are so inefficient. The answer is AI. With Autonoma, instead of writing and maintaining brittle scripts, you simply tell the system what to test, either by showing it through a recording or by describing the steps in natural language. Anyone who can show or tell Autonoma what to test can now create an automated test. We refer to this role as Test Workflow Designer. We call this role Test Workflow Designer.

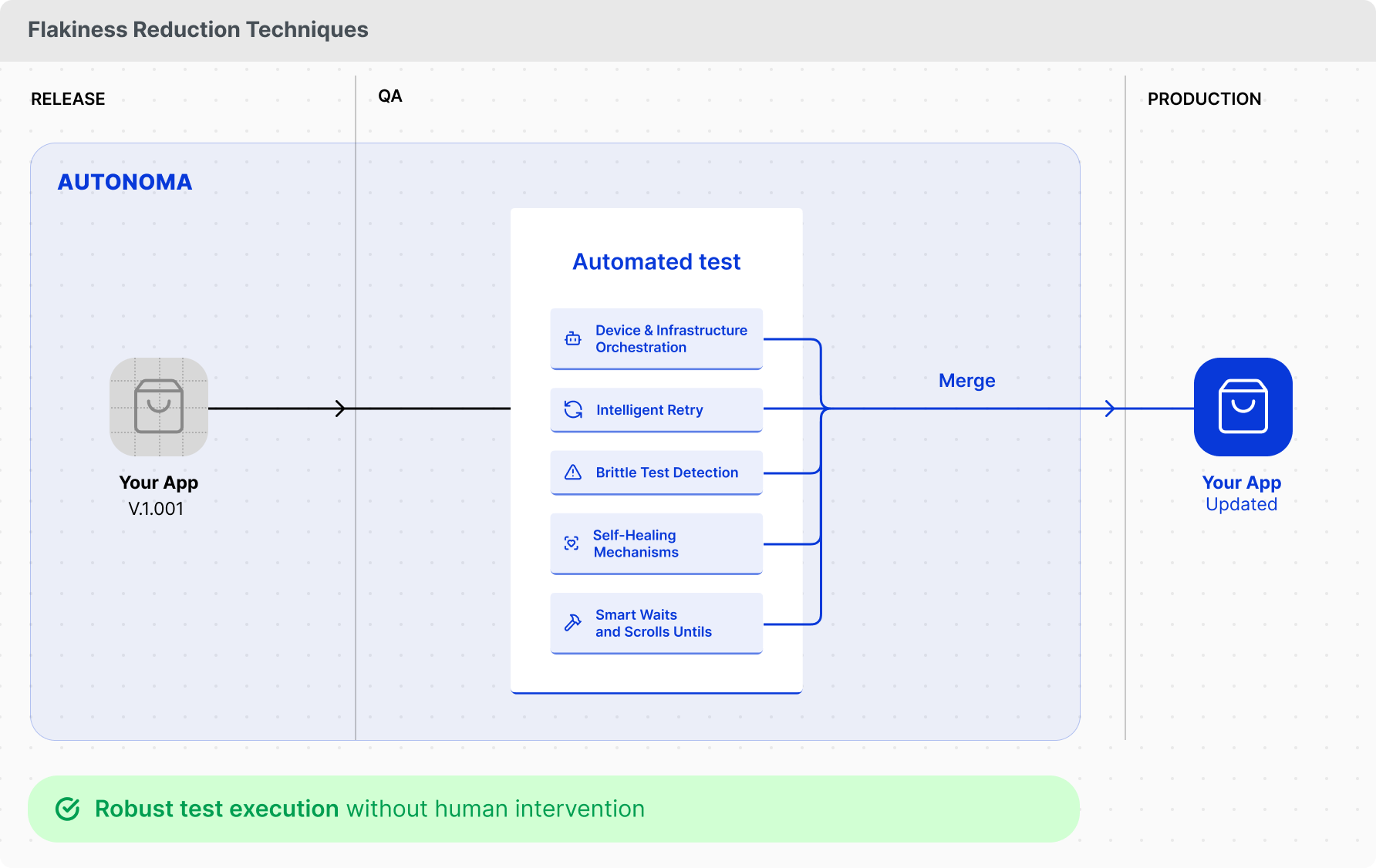

But what about maintenance when the UI changes? That’s where Autonoma is different. Because we capture the natural language intention, tests adapt to UI redesigns and self-heal without needing manual updates to selectors or components.

And what about the brittleness of testing infrastructure, both on the product side and the device side? Autonoma handles this too. Intelligent algorithms retry flaky tests, flag them when errors aren’t reproducible, and manage all device and infrastructure setup automatically. You never have to worry about deploying browsers or managing devices. It all works out of the box.

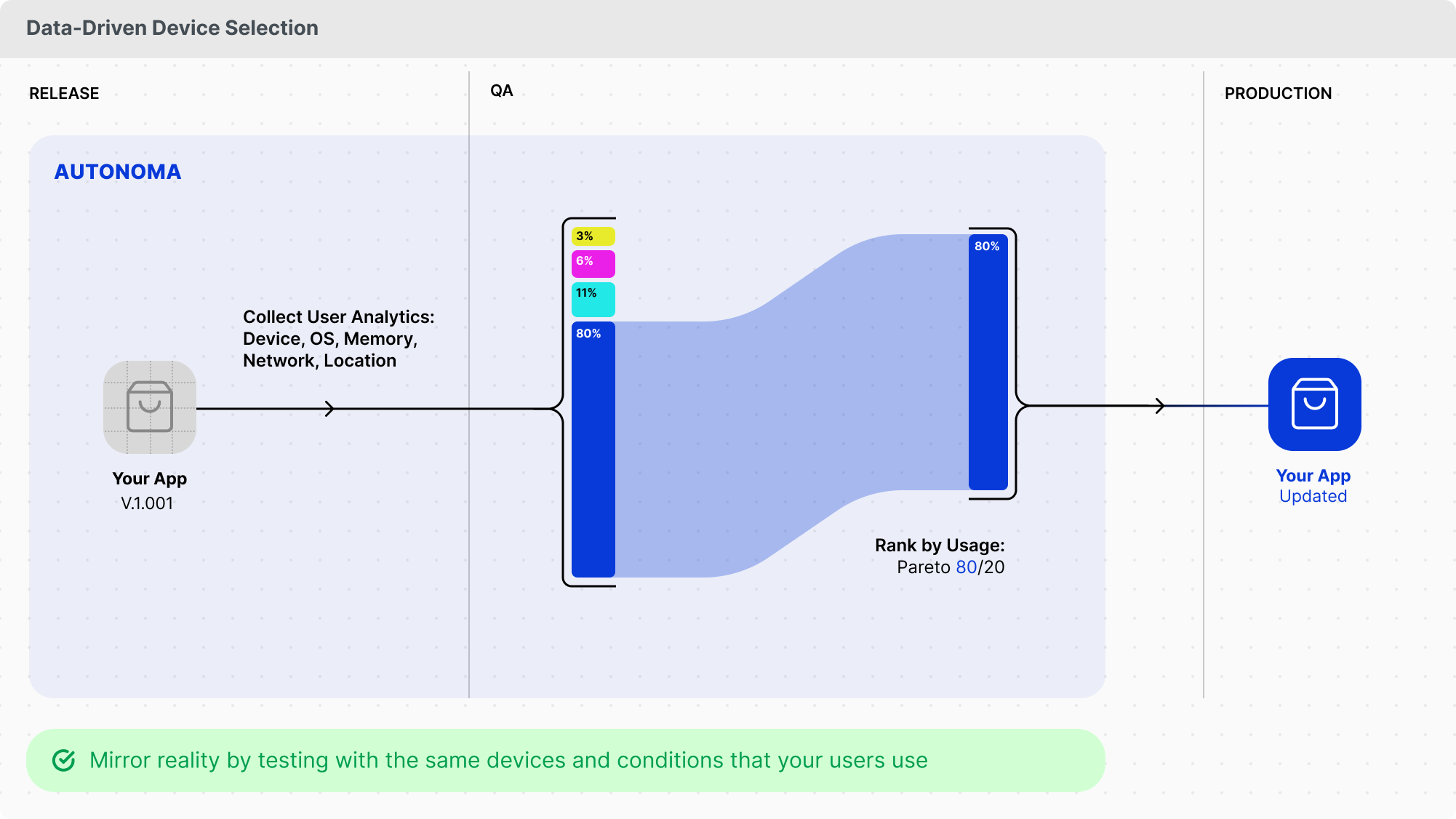

That’s not all. Our best clients take a data-driven approach to device selection. Instead of testing on every device or just one, they apply the Pareto principle: focus on the devices and conditions where 80% of users actually access the app. Screen size, OS version, memory, connection quality, location, and testing under real conditions, not just guesses.

And what about the latest feature? With Autonoma, developers can generate a test for it in just 5 to 10 minutes as part of their deliverables. The result is that you maintain automation coverage at nearly 100 percent with minimal additional effort.

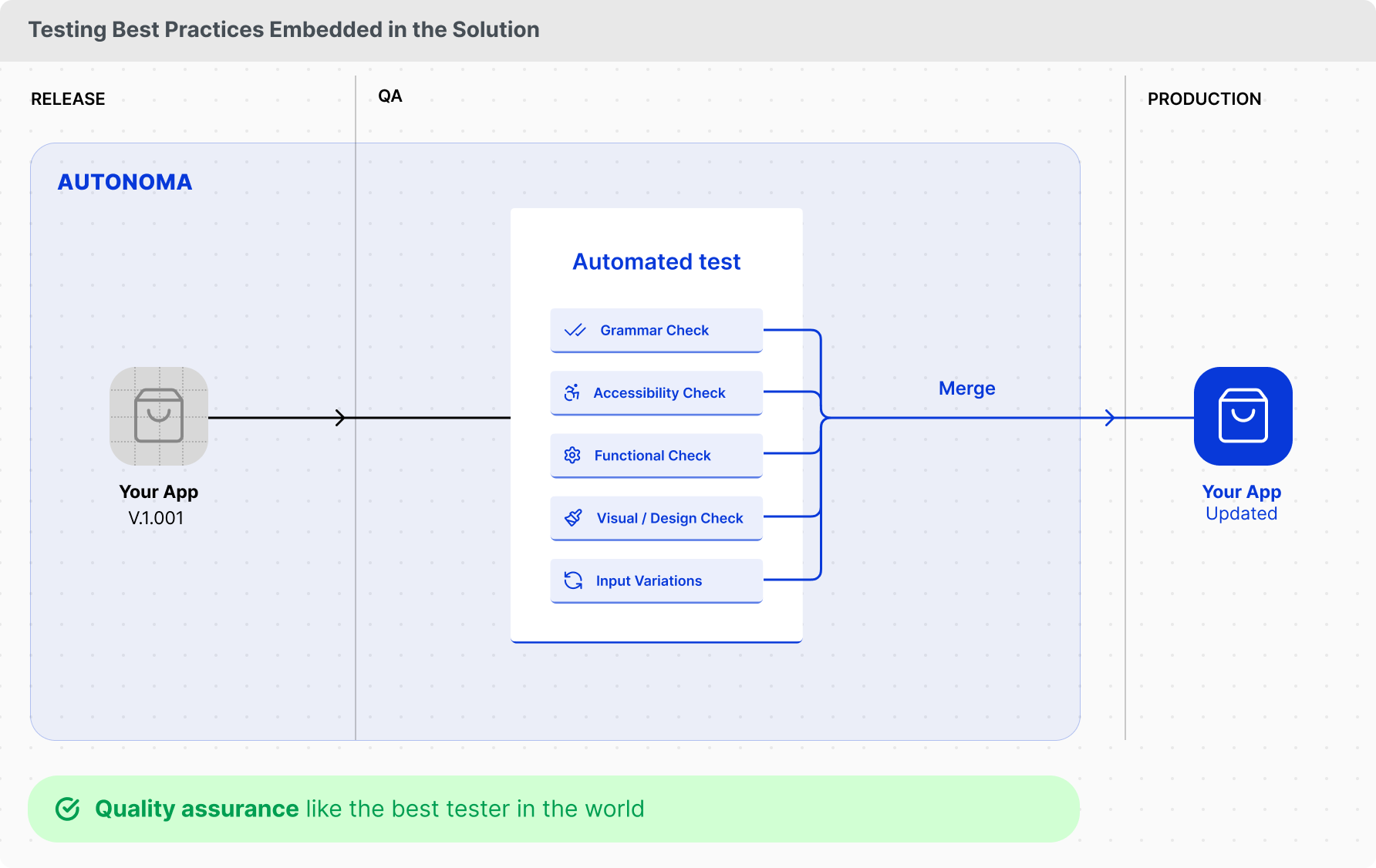

Finally, building good tests is an art, and Autonoma delivers them at AAA quality out of the box. Every screen a test passes through is automatically checked for grammar issues, accessibility problems, overlapping elements, cut-off text, and other design or functional inconsistencies. You don’t need to validate every detail manually because Autonoma does it for you. The same goes for inputs. Instead of testing one value at a time, you can simply prompt Autonoma to try multiple combinations and catch the ones that break the system.

Let’s review how this looks when integrated into the software development life cycle.

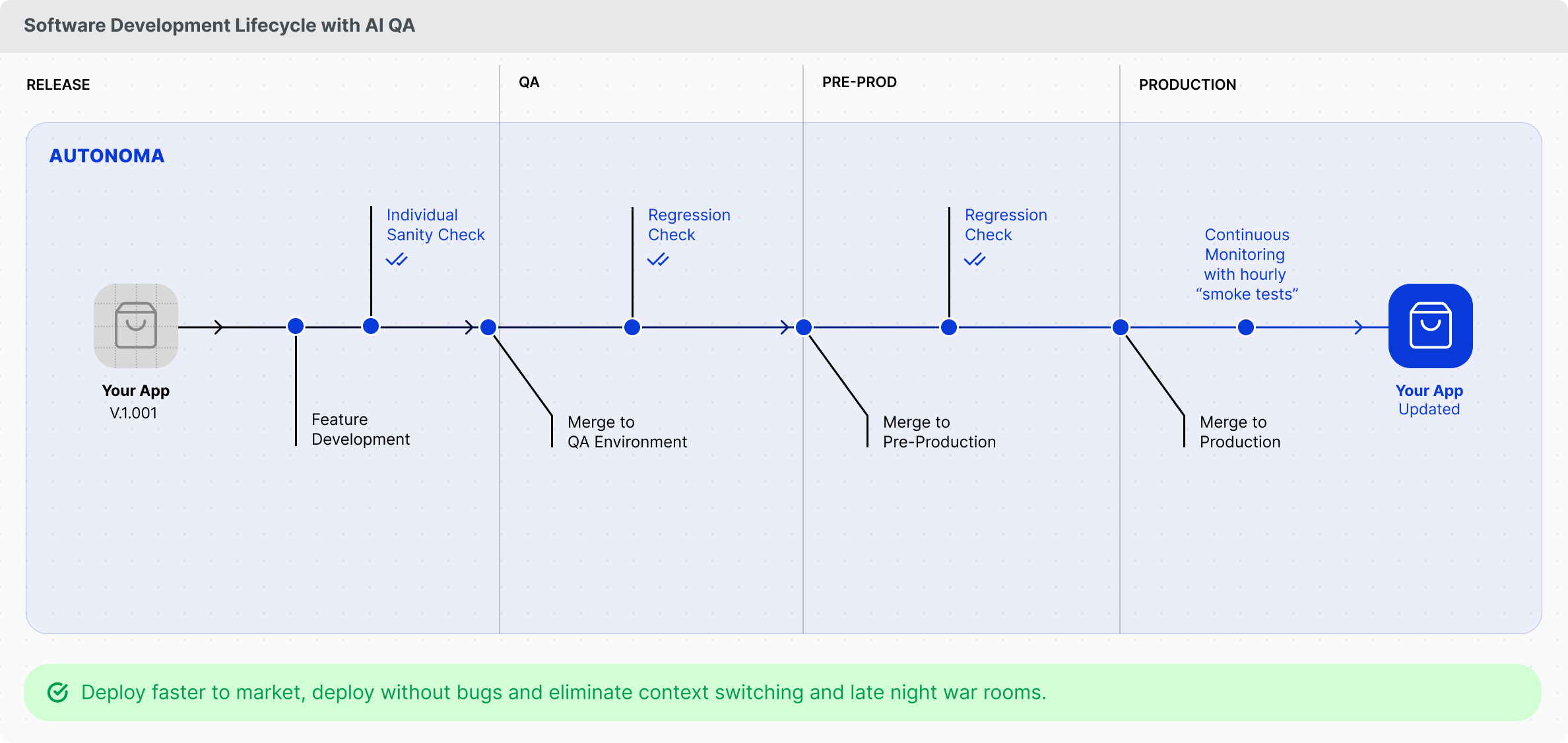

Developers build their features and immediately run an automated Sanity Check on the module they are working on. If a bug appears, it gets fixed right away, while the developer is still in the zone, with zero context switching.

Each developer does the same. Once all Sanity Checks are complete, the code is merged into a QA environment where a Release Manager triggers a full Regression Check. If everything passes, the build will move to a pre-production environment that shares a database with production, making it as realistic as possible. Here, another Regression Check is triggered. If no critical bugs are found, the release is ready to go live.

In production, teams run Smoke Tests daily or even hourly to confirm that critical functionality works as expected. Depending on the company, Canary or Blue-Green deployments can also be used to achieve even faster response times in the event of incidents.

Throughout the process, all Regression and Sanity Checks run across multiple devices and configurations, mirroring real user conditions and covering edge cases. If a regression is found to have a bug, Autonoma automatically alerts the responsible team, assigning a priority based on the importance of the flow.

Key Value Drivers of implementing an agentic approach to testing

Let’s look at the key value drivers of this process. They fall into two groups: technical and business. We always advise clients to track both. At the end of the day, business drivers are what really matter, but there’s often a delay between errors and their business impact. Technical indicators serve as proxies, enabling you to detect problems earlier and respond more quickly, before they impact the business.

Technical drivers

- Time-to-test

- Testing Coverage

- Performance of regression (time to run)

- Bugs found before production

- Amount of reworks needed per feature

- Hotfixes per feature

Operational business drivers

- Amount of support tickets

- SLA of resolution

- Time to market (fewer reworks, ship faster)

- Customer experience (NPS, CSAT, etc.)

- Engineers attrition

- Customers churn because of bugs

Closing

If you are looking to change your approach to testing, feel free to schedule a call with us, and let’s plan a deployment together with our experts.

Thanks for reading this.

Eugenio Scafati - Chief Executive Officer