Autonomous Software Testing: How Intelligent Test Automation Saves Millions

Quick Summary: Autonomous testing represents the third generation of software testing—after manual and automated. Using AI agents that self-generate, self-heal, and self-maintain, autonomous testing eliminates the economics that made QA expensive. Real enterprise results: $2M workforce cost optimization (60 manual testers repurposed), 10% workforce reductions without quality loss, and solo CTOs completing full E2E testing that normally requires teams. For VPs at established enterprises, autonomous testing is not optional—it's the competitive advantage separating enterprises that ship weekly from others that ship quarterly in 2026.

The $2 Million Wake-Up Call

A VP of Engineering at a financial services company sent me a Slack message that changed how I talk about testing:

"We had 60 manual testers. After deploying intelligent test automation with Autonoma, we repurposed them to strategic work. That's $2 million in workforce cost optimization, and we're shipping faster than ever."

This is the power of autonomous testing: eliminate maintenance, scale infinitely, ship faster.

$2 million. Not from firing people. From elevating them.

The manual testers didn't lose their jobs. They gained better ones. They moved from repetitive regression testing to exploratory testing, to analyzing user behavior, to working alongside product managers on feature validation.

The testing still happened. In fact, more testing happened. It just didn't require humans to click through the same flows 500 times per sprint.

It's not traditional automation. Automation still requires humans to write and maintain scripts. Autonomous software testing eliminates the maintenance entirely.

And it's transforming the economics of software quality.

What Autonomous Testing Actually Means

The term "autonomous testing" gets misused. Let me be precise about what makes testing truly autonomous versus simply automated.

The Three Generations of Testing

Manual testing had humans clicking through applications. Automated testing had scripts running those clicks. Both still needed humans to maintain them. Autonomous testing uses AI to create, run, and fix tests automatically, eliminating maintenance entirely.

The critical difference: Automated testing replaced manual execution. Autonomous testing replaces manual maintenance.

Speed vs Maintenance: The Core Tradeoff

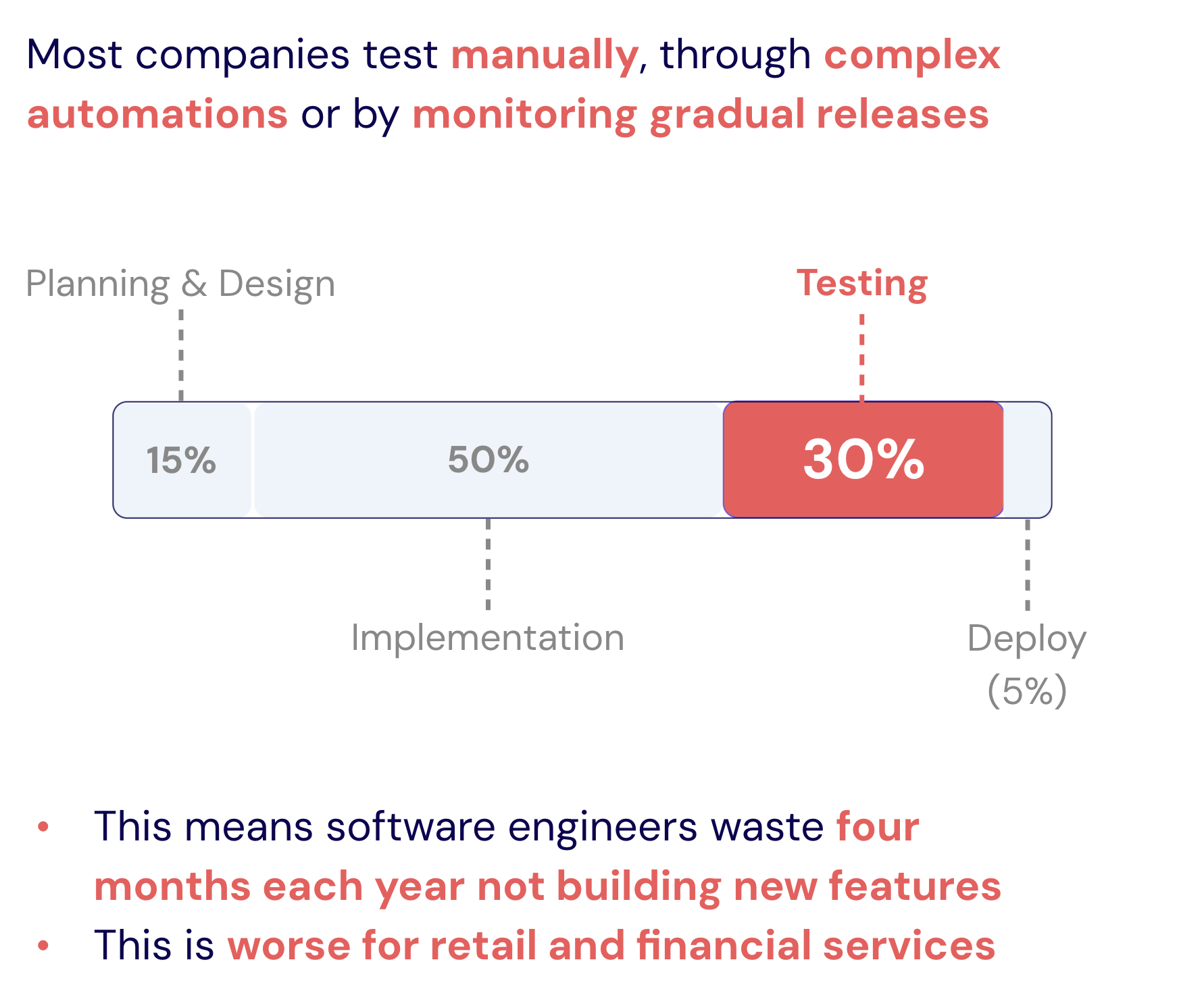

Manual testing is immediate to start but slow to scale. Create your first test instantly by clicking through the app. But execution is human-paced, and scaling requires linear headcount growth. For 50 tests, you're looking at $180K-240K annually in QA labor.

Automated testing is faster but more brittle. Creating each test takes 30-60 minutes of coding. Once built, tests run quickly. The problem? Maintenance consumes 30-40% of engineering time. UI changes break tests constantly. Engineers spend more time fixing brittle selectors than writing new tests. Annual cost for 50 tests: $120K-180K for the automation engineers, plus the hidden cost of constant maintenance.

Autonomous testing is fastest where it matters. Record a test in 2-5 minutes. Tests run at full automation speed with unlimited parallel execution. When UI changes, tests self-heal automatically. Maintenance time: zero. Annual cost for 50 tests: ~$60K for platform and oversight.

The Real Difference: What Happens When Your UI Changes

This is where the generations diverge completely.

Manual testing requires re-running everything by hand. Documentation gets outdated. Test coverage degrades.

Automated testing collapses. Tests break. Engineers spend days updating selectors. False positive rate spikes. Teams either maintain comprehensive broken tests or abandon them entirely (most choose abandon).

Autonomous testing adapts. AI recognizes intent, not just selectors. When your designer changes the login button from button[type="submit"] to .btn-primary, the AI infers "submit login form" and finds the new button automatically. Tests continue passing. Zero human intervention required.

What Makes Testing "Autonomous"?

True autonomous testing requires five capabilities working together, but they're not all equally transformative.

Self-generation and self-healing are the foundation. Without them, you're just automating manual testing. Self-generation means AI creates tests from requirements (no human scripting). Self-healing means tests adapt when your UI changes. These two capabilities eliminate the setup and maintenance burden that makes traditional testing expensive.

Self-execution matters for scale. Tests run continuously in CI/CD pipelines without human intervention. It's faster than self-generation but less revolutionary. Automated testing already does this.

Self-analysis and self-optimization are where the real intelligence emerges. The AI distinguishes real failures from false positives and learns which tests catch bugs. Traditional automation can't do this. An engineer gets a notification: "Checkout flow broken. Payment API returning 500." Not "Test #47 failed."

Most "AI testing tools" only offer partial autonomy (usually self-healing or AI-assisted test generation). True autonomous testing requires all five capabilities working together.

Why Established Enterprises Need Intelligent Test Automation

When I talk to startups about autonomous testing, they're curious. When I talk to VPs at established enterprises (banks, insurance companies, healthcare organizations), they lean forward in their chairs.

They understand immediately why this matters. They're living it.

The Testing Burden You Know Too Well

If you're at an established firm in a regulated industry, you'll recognize these challenges:

Compliance isn't optional: Financial services can't skip testing transaction paths. Healthcare can't compromise on HIPAA validation. Insurance must verify every calculation. Each regulation adds test cases. The burden grows every year.

Integration complexity: Twenty-year-old mainframes integrated with modern web apps. COBOL backends talking to React frontends. Testing these integrations demands deep expertise, significant time, and careful coordination.

The safety tax: When downtime costs millions per hour, thoroughness isn't paranoia. Multiple QA rounds. Manual verification. Sign-offs from stakeholders. These necessary safeguards slow everything down.

Many established enterprises have 50-100+ testers. At fully-loaded costs, QA labor becomes a multi-million dollar annual expense that grows with your application.

Banks can't afford bugs, so validation can take a quarter. Where tech companies ship daily, established enterprises ship monthly or quarterly. Not because they don't want speed, because ensuring quality takes time.

The Competitive Threat

Here's what keeps enterprise VPs up at night:

Fintech startups ship features weekly. Established banks ship quarterly.

Health tech companies iterate in days. Hospital systems iterate in months.

InsurTech competitors launch new products in weeks. Established insurers take quarters.

The slower you ship, the less competitive you become. Testing is the primary bottleneck.

Why Autonomous Testing Is the Answer

Autonomous testing solves the enterprise testing problem at the root. It eliminates QA labor costs (no more 60-person manual QA teams). AI agents execute testing continuously at near-zero marginal cost. See The Economics section below for detailed cost comparison.

Tests that took weeks now take hours. Deploy daily instead of quarterly. AI agents excel at testing complex integrations, edge cases, and legacy system interactions that manual testers struggle with.

Autonomous tests run every deployment, ensuring continuous compliance validation without adding manual burden. Your best QA minds move from regression testing to strategic initiatives.

Traditional enterprises that invest in autonomous testing become more competitive. Those that don't fall further behind.

Real Enterprise Results: The Numbers Don't Lie

Here are four companies that deployed autonomous testing. The numbers are real.

Story 1: Kavak's Proactive Incident Detection

Context: Kavak is Latin America's largest used car platform, processing thousands of vehicle transactions daily across multiple countries. Any downtime directly impacts revenue.

Challenge: Before Autonoma, Kavak relied on a SWAT team monitoring social media and manually browsing kavak.com to catch issues like missing vehicle data, wrong images, or broken flows. This reactive process meant customers often discovered problems before the team did.

Solution: Kavak deployed Autonoma's intelligent agents to run simulated user journeys across all critical flows continuously. Tests run in production, automatically creating Jira tickets when anomalies are detected before real users encounter them.

Results:

- Transitioned from reactive (users report issues) to proactive (AI catches issues first)

- Significant reduction in user complaints and social media reports

- SWAT team repurposed from detecting errors to improving customer transaction experience

- Automated verification of recurring issues directly from Jira tickets

Visual validation caught issues traditional automation misses (wrong images, cut-off elements, inconsistent data display). The AI continuously monitors customer-facing flows, catching problems the moment they appear. Read the full case study

Story 2: The Solo CTO Achievement

A CTO at a YC-backed startup found us through our Vercel Marketplace partnership:

"I completed full E2E testing coverage by myself in a couple of days by reading your docs. Something that normally takes entire teams to accomplish."

This wasn't a small application. This was a production SaaS product with complex user flows, payment integrations, multi-tenant architecture, and role-based access control.

Normally, achieving comprehensive E2E coverage requires:

- 2-3 QA engineers working full-time for weeks (realistically $2-3K/month per person even offshore, best case)

- A QA lead to design the test strategy

- Coordination with developers to access staging environments

- Ongoing maintenance as the UI evolves

This CTO did it alone in a couple of days using Autonoma's free tier through Vercel. Self-healing meant he never had to maintain them.

Instead of hiring a QA team or accepting bugs in production, startups can now achieve comprehensive testing at zero upfront cost. For teams on Vercel, getting started with autonomous testing is free.

Autonomous testing doesn't just reduce cost. It fundamentally changes what's possible with limited resources. Startups can't afford bugs but can't afford QA teams either. Autonomous testing solves both problems.

Story 3: 10% Workforce Reduction Without Quality Loss

A major Latin American fintech company with thousands of employees recently underwent organizational optimization to improve operational efficiency.

Like many established fintech companies, they carried significant QA overhead from manual testing processes. As the application evolved and features multiplied, the QA burden grew unsustainably.

The company deployed Autonoma to handle regression testing, visual validation, and continuous monitoring of critical user flows. AI agents took over repetitive testing work that previously required significant manual effort.

Results:

- ~10% workforce reduction across the organization

- Many reductions came from QA and similar testing-related areas

- Quality metrics improved (more comprehensive testing, faster bug detection)

- Deployment frequency increased as testing no longer bottlenecked releases

The workforce reduction wasn't about sacrificing quality. It was about eliminating redundant manual work that AI performs better. The company leadership verbally confirmed that Autonoma's improvements enabled this optimization. Comprehensive AI testing is more consistent than human testing, catching edge cases manual testers miss.

Story 4: $2M Workforce Optimization (60 Manual Testers)

Back to the story that opened this article.

Large financial services company with complex regulatory requirements and legacy systems integrated with modern web applications.

Challenge: 60 manual testers executing regression suites before every release. Each regression cycle took 2 weeks. Deployment frequency: once per month.

Deployment:

- Recorded critical user flows in Autonoma (2-5 minutes per test)

- Converted 80% of manual regression tests to autonomous tests

- Deployed autonomous tests in CI/CD pipeline

- Reduced regression cycle time from 2 weeks to 2 hours

Results:

- $2 million annual workforce cost optimization

- 60 manual testers repurposed to higher-value work (exploratory testing, security validation, user research)

- Deployment frequency increased from monthly to weekly

- Defect escape rate decreased (more comprehensive testing)

- Time-to-market for new features improved by 4x

What they didn't do: Fire 60 people.

What they did do: Elevated 60 people from soul-crushing repetitive work to strategic, creative, high-impact work that humans excel at.

The $2M savings came from not hiring additional QA as the company scaled. They grew engineering headcount 40% over two years without growing the QA team.

QA capacity scales with your product, not with your headcount. Learn more about how QA automation services compare to AI-powered solutions.

The Economics: Why Traditional Testing Can't Scale Like Autonomous Testing

Let's be honest about how traditional testing economics actually work. It's not sustainable. It's a trap.

Option 1: Manual Testing (The Unsustainable Scaling Trap)

You start by hiring manual testers at $1,000/month offshore. Seems reasonable.

At first, it works. Your team of 3-5 people tests your application. Features get validated. Life is good.

Then you start shipping faster. New features arrive every sprint. Your testing backlog grows. You have two choices:

A) Test only the latest features (skip regression testing) B) Keep hiring more testers

If you choose A, old features break in production. Customer complaints spike. You lose trust.

If you choose B, you're in an unsustainable scaling trap. Every sprint adds features. Every feature adds test cases. Every test case requires more testers. The cost grows exponentially. You're trapped.

Here's the cruel reality: adding more people doesn't solve the problem. Testing is like assembling a car or painting a wall. Add more people up to a point, and you get faster. But that gets quickly capped. Imagine 100 people trying to assemble a single car. They'd be stepping on each other, duplicating work, creating confusion. It would actually take longer than if you had 2-5 people working efficiently.

By year two, you have 15-20 manual testers. At $1,000/month, that's $180-240K annually. And you still can't keep up.

Option 2: QA Automation Engineers (The Maintenance Trap)

You decide to "automate" testing. You hire QA automation engineers (really just developers specialized in Playwright or Appium).

Even offshore, you're looking at $2-3K/month minimum (and that's underpaying). A team of 5 automation engineers costs $120-180K annually.

The trap shifts from scaling to maintenance:

Problem 1: Tests are ready after you deploy. By the time engineers write automated tests, the feature is already in production. You're validating yesterday's work, not preventing tomorrow's bugs.

Problem 2: Maintenance becomes the bottleneck. Tests break every time UI changes. Engineers spend 30-40% of their time fixing brittle selectors. More features = more tests = more maintenance. The cost compounds.

And just like with manual testing, throwing more engineers at the problem doesn't solve it. You can't have 100 automation engineers maintaining the same test suite. They'd spend more time coordinating, resolving merge conflicts, and stepping on each other's work than actually fixing tests. The optimal team size is capped at 2-5 people, but the maintenance burden keeps growing.

You're not testing faster. You're delaying deployments, accumulating test debt, and burning engineering time on maintenance. This is why banks take quarters to ship. Not because they want to, but because testing can't keep up.

Option 3: Autonomous Testing (Breaking the Trap)

Autonomous testing eliminates these cost traps entirely.

Create 10 tests or 1,000 tests: same cost. AI generates them in minutes. UI changes? Tests self-heal automatically. Zero engineer time wasted on selector updates.

AI creates tests from requirements, not from finished features. Validate before shipping. More features don't require more people. The AI scales automatically.

For a mid-size enterprise, the comparison is stark:

- Manual testing: $180-240K annually (and growing)

- Automation engineers: $120-180K annually (plus 30% maintenance time)

- Autonomous testing: Platform cost + 2-3 strategic QA engineers (~$60K total)

We bring the agility of a startup to an established enterprise, without sacrificing the safety of E2E testing, powered by AI.

And this doesn't account for hardware, infrastructure, cloud costs, hiring/firing overhead, or the opportunity cost of slow deployments.

How Autonomous Software Testing Actually Works

Here's how we built Autonoma to deliver true autonomy:

Phase 1: Test Creation

Traditional automation requires engineers to write test scripts. Writing one test takes 15 minutes. Maintaining it across UI changes takes 15 minutes every sprint. Multiply by 500 tests = 125 hours per sprint on maintenance.

Autonomous testing eliminates the code. Navigate to your application. Click through the flow once. AI records your intent (not selectors). Test is ready in 2-5 minutes. Maintenance time: 0 minutes.

Phase 2: Self-Execution

Tests run continuously: every commit, every PR, on schedules, or in production monitoring. Unlimited parallel execution across web, iOS, and Android. Traditional platforms cap parallelization. Autonomous testing scales infinitely.

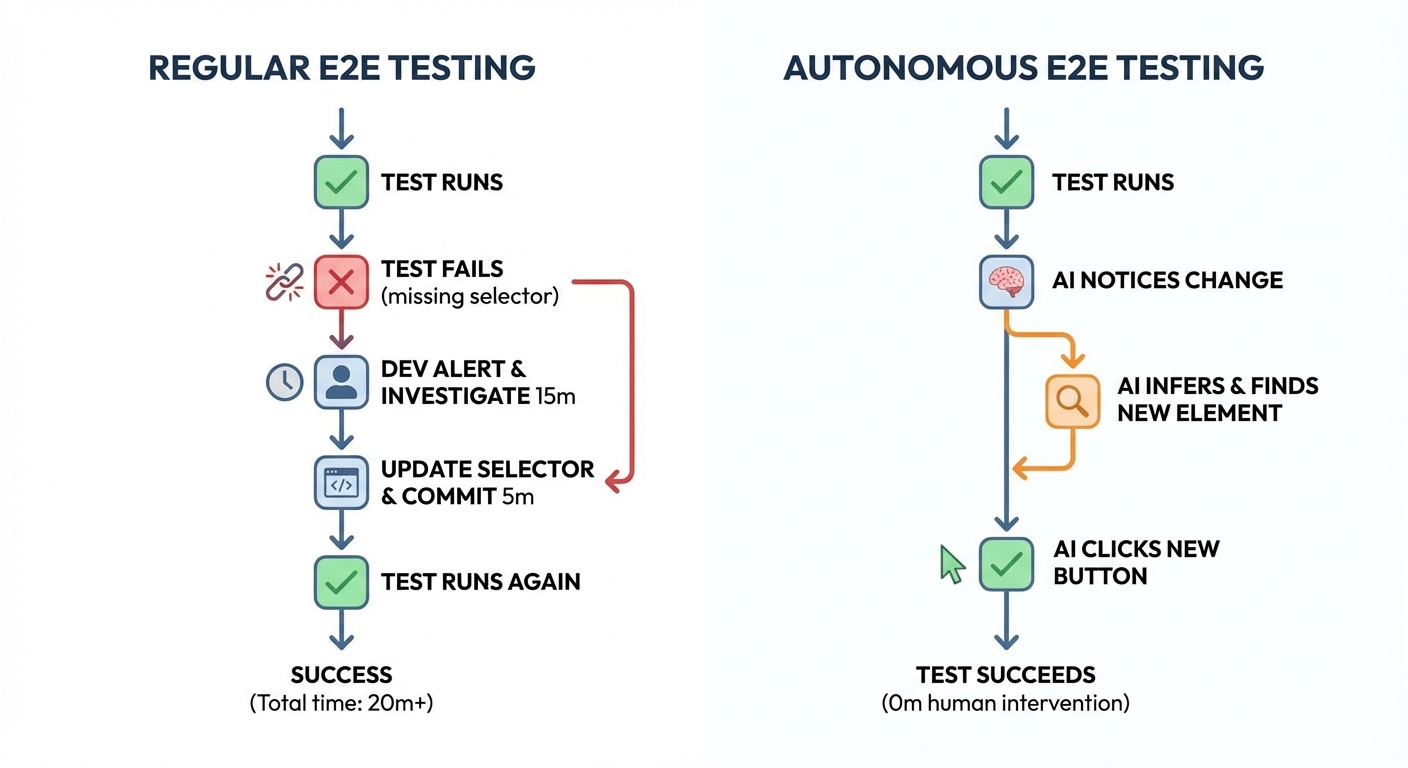

Phase 3: Self-Healing (The Critical Difference)

Your designer updates the login button from button[type="submit"] to .btn-primary.

Automated testing: Test fails. Developer investigates (15 min). Updates selector (5 min). Commits fix. Multiply by however many tests broke.

Autonomous testing: AI notices the button changed. Infers intent ("submit login form"). Finds new button using computer vision + DOM analysis. Clicks it. Test succeeds. Human intervention: 0 minutes.

This happens automatically, invisibly, continuously. UI changes don't break tests.

Phase 4: Intelligent Analysis & Routing

AI analyzes failures to distinguish real bugs from false positives. Instead of "Test #47 failed," engineers receive: "Checkout flow broken. Payment API returning 500."

The system routes issues intelligently to the right team, tracks team improvements and failures, and provides visibility to all stakeholders (not just developers). Everyone understands what failed and can properly prioritize fixes.

Phase 5: Continuous Optimization

The system learns which tests catch bugs, identifies redundant tests, optimizes execution order, and validates coverage meets compliance requirements. It gets smarter over time (the opposite of traditional test suites that accumulate technical debt).

Implementing Autonomous Testing: What to Expect

You're a VP at an established enterprise. You're thinking: "This sounds good, but what's the actual implementation reality?"

Week 1: Pilot (4-6 hours)

Sign up, connect to staging, record 10-15 critical flows (30-90 minutes total). Run tests to validate accuracy. If you're on Vercel, getting started is free. Zero risk.

Common questions: Will this work with our legacy systems? Yes, if it has a UI. Autonomous testing doesn't care if your backend is COBOL from 1985. It tests the interface. Complex authentication (SSO, MFA)? Record it once, reuse across all tests.

Month 1: Staged Rollout (20-30 hours)

Expand to 50-100 tests covering critical paths. Run autonomous tests in parallel with existing suite. Compare results. Train 3-5 team members. Set up CI/CD integration.

Track: Test creation time (90%+ reduction), maintenance time (100% reduction), regression duration (50-90% reduction), false positive rate (70-90% reduction), bugs caught pre-prod (30-50% increase).

By end of month 1, you'll have data proving the ROI case.

Quarter 1: Migration Decision

If you already have E2E tests working well, don't preemptively migrate. But as tests break, rebuild them in Autonoma. If you're struggling with maintenance or don't like your current solution, full migration makes sense.

Many companies consider building autonomous testing in-house. We've seen this play out dozens of times, including with very well-known companies famous for being builders.

Here's what building autonomous testing actually requires:

- AI/ML expertise for self-healing algorithms and intent recognition

- Computer vision systems for visual validation

- Device infrastructure for cross-platform testing (web, iOS, Android)

- Continuous R&D investment as AI/ML capabilities evolve

- 2-3 years minimum to reach production-grade reliability

The reality? We've been doing this for years. We know how hard it is. Companies try to build it, burn 6-12 months of engineering time, and come back to us after failing to reach the reliability their production systems need.

Your engineers should be building your core product and competitive advantages, not reinventing testing infrastructure. Get autonomous testing solved with Autonoma, and focus your team on what actually differentiates your business.

Buying Autonoma means immediate deployment, zero maintenance burden, and letting your engineering team focus on your actual product.

For established enterprises on Vercel, starting is free. Want more information? Contact us for enterprise pricing and custom solutions.

Common Objections (And Honest Answers)

Let me address the concerns VPs raise when evaluating autonomous testing. I'll be honest about limitations. Not every objection has a perfect answer.

Objection 1: "AI can't understand our complex business logic"

The concern: "Our application has complex workflows with conditional logic, multi-step processes, and business rules. AI can't reason about this."

The honest answer: Autonoma excels at UI and visual validations (testing user interactions, form submissions, navigation flows, and catching visual bugs). We don't look at your code, so we don't do unit, integration, or business logic testing.

But because it's so easy to create tests with Autonoma, you can add many E2E tests and figure out where problems arise. Most bugs manifest in the UI eventually.

The solution: Use autonomous testing for comprehensive E2E and UI testing. Keep unit/integration tests for business logic validation. Companies should have a combination. That said, someday we strive for autonomous testing so easy and fast that you won't need unit tests, just add a bunch of E2E tests instantly.

Objection 2: "We need human judgment for quality"

The concern: "Testing isn't just pass/fail. It requires judgment, intuition, experience. AI can't replace that."

The honest answer: You're absolutely right. Autonomous testing removes the need for human execution of repetitive tests, not human judgment.

What AI replaces: Clicking through the same flows 500 times. Running regression tests. Maintaining test scripts when UI changes.

What AI doesn't replace: Exploratory testing. Usability evaluation. Edge case discovery. Security testing. Accessibility auditing.

The goal is to elevate QA from mechanical execution to strategic thinking. A company should never third-party their quality. Being proud and owning quality is critical. That's why Apple is Apple. They look at every detail.

We see ourselves as a bulldozer, making testing much easier, but always orchestrated by qualified individuals. The CTO who completed E2E testing alone didn't eliminate quality judgment. He automated execution so he could focus judgment on what matters.

Objection 3: "We have security/compliance concerns"

The concern: "We can't send production data or credentials to a third-party AI testing platform. HIPAA, SOC2, regulatory requirements prevent this."

The honest answer: Valid concern. Here's how Autonoma handles it:

Data isolation:

- Tests run in your environment (staging, not production)

- Use synthetic test data (not real customer data)

- Credentials stored encrypted, never exposed to AI models

- Self-hosted deployment available for very large enterprises (much more expensive)

Compliance certifications:

- SOC 2 Type 2 in progress (currently under evaluation, nearing completion)

- GDPR compliant (data residency options)

- SSO/SAML integration for authentication

- Audit logs for all test activity

Enterprise options:

- VPN and VPC-peering available

- AWS Marketplace deployment (in progress, contact us for updates)

For very large enterprises, we offer self-hosted solutions. Contact us to discuss your specific compliance requirements.

Objection 4: "This will eliminate QA jobs"

The concern: "Autonomous testing means firing our QA team. That's not our culture."

The honest answer: This is about people, so let's be direct.

Yes, the 10% workforce reduction at the Latin American fintech involved QA layoffs. That happened. But that's up to the company. It has nothing to do with the technology itself.

Look at the other real example: $2M financial services company repurposed 60 manual testers to strategic work, not fired. They moved to exploratory testing, security validation, and UX research.

The pattern we see in successful transitions:

Short-term: Pause QA hiring. Transition manual testers to exploratory testing. Train automation engineers on strategic initiatives.

Long-term: Small, elite QA team focused on strategy. Autonomous testing handles execution. Better quality plus lower cost.

This is workforce optimization. Companies that do this well treat it as career development: "Your role is evolving from test execution to quality strategy." Companies that handle it poorly use it as justification for layoffs.

The technology enables efficiency. How companies use that efficiency is their choice.

Objection 5: "We've tried test automation before—it failed"

The concern: "We invested in Selenium 5 years ago. Tests broke constantly. Engineers hated maintaining them. We eventually abandoned it. Why would this be different?"

The honest answer: Because autonomous testing is fundamentally different from automated testing.

Your Selenium failure wasn't your fault. Automated testing is inherently brittle.

Why automated testing failed: Selector-based tests broke every UI change. Engineers spent 30% of time maintaining tests. Flaky tests created false positives. Tests became technical debt.

Why autonomous testing is different: Intent-based tests survive UI changes (self-healing). Zero maintenance required. AI filters out flaky tests. Tests become more accurate over time (learning).

Also consider: AI has advanced at supersonic speeds. LLMs, code generators, all trash 5 years ago. Try it again.

It's free to try on Vercel, and we're happy to help. Reach out on X: @TomasPiaggio or @autonomaai.

If you tried automated testing and failed, you're the ideal customer. You know exactly what problems need solving. Tools like Cursor and Claude Code make automation easier, but you still need to provide infrastructure and maintenance. Autonomous testing eliminates both.

The Cost of Inaction

Let me share why established enterprises need to move now, not "eventually."

Wait one year to evaluate autonomous testing, and you'll spend $1.2M on QA that could be saved, waste 2,000 engineer-hours on test maintenance, and delay 12 major releases while your competitor ships 50 features.

Your best engineers leave for companies with modern tooling. Your competitor captures market share. You fall 12 months behind, with compounding disadvantages.

The talent dimension: Top engineers want to build products, not maintain tests. Companies with autonomous testing have a recruiting advantage.

Industry tipping points: Fintechs, health tech startups, and InsurTech companies already use autonomous testing. Once 30-40% of an industry adopts, the rest face existential pressure to follow.

Start now: Month 1, pilot with 10-15 critical tests. Quarter 1, expand to 50-100 tests. Quarter 2-4, full migration.

Your competitors are already evaluating this. The question isn't whether to adopt autonomous testing. It's whether you'll lead or follow.

Autonomous Testing Is Not Optional

Testing economics have fundamentally changed. The cost difference between traditional approaches and autonomous testing is an order of magnitude, but the real advantage is velocity. Companies using autonomous testing ship 4-10x faster, deploying daily instead of quarterly.

The Real Stories

Kavak: Transitioned from reactive (customers report bugs) to proactive (AI catches bugs first). SWAT team repurposed to improving transactions.

Solo CTO: Achieved full E2E coverage alone in a couple of days. Impossible with traditional testing.

Latin American fintech: 10% workforce reduction, many from QA. Quality improved.

$2M financial services: 60 manual testers repurposed to strategic work. Deployment frequency 4x faster. Quality improved.

These aren't vendor promises. These are real results.

What Autonoma Does

Self-healing tests. Visual validation. Unlimited scaling. Cross-platform (web, iOS, Android). Intent-based recording (2-5 minutes per test). Zero maintenance.

Try it: Get started free (Start with Autonoma's intelligent test automation platform) Calculate ROI: See your savings (Get custom pricing for your enterprise) Read docs: Implementation guide (Technical documentation for autonomous testing)

Frequently Asked Questions

What is autonomous testing?

Autonomous testing is the third generation of software testing, using AI agents to create, execute, maintain, and optimize tests without human intervention. Unlike automated testing (which requires humans to write and maintain test scripts), autonomous testing uses AI for self-generation, self-healing, self-execution, self-analysis, and self-optimization. The system adapts to UI changes automatically, eliminating test maintenance entirely. Examples of autonomous testing platforms include Autonoma AI, which offers comprehensive self-healing capabilities and visual validation.

How is autonomous testing different from automated testing?

Automated testing replaced manual execution (scripts run tests instead of humans clicking). Autonomous testing replaces manual maintenance (AI maintains tests instead of engineers updating selectors). Automated tests break when UI changes and require developer time to fix. Autonomous tests self-heal when UI changes and require zero maintenance. The economic difference: automated testing saves 40-50% vs manual, autonomous testing saves 80-90%.

How much does autonomous testing cost?

The price can vary. Tools like Autonoma start for free, going into Pro and Enterprise depending on the customer's needs. In terms of infrastructure costs, autonomous testing usually costs slightly above just running tests on GitHub CI or equivalent CI/CD platforms, but the productivity gains and human resource savings are incomparable.

Total cost of ownership for a mid-size enterprise (50 engineers, 10 QA team) is typically $300-500K annually (platform + small strategic QA team), compared to $1.2M for automated testing or $1.6M+ for manual testing. Real enterprise examples: $2M saved by repurposing 60 manual testers, 10% workforce reduction without quality loss.

What ROI can I expect from autonomous testing?

Most enterprises see 650-2,200% first-year ROI from autonomous testing. Direct savings come from eliminating test maintenance labor (worth $24K+ per engineer annually at 30% time spent on maintenance). Indirect value comes from faster deployment cycles (4-10x faster time-to-market), fewer production defects (comprehensive AI testing), and better talent retention (engineers prefer modern tooling). Real example: Financial services company saved $2M annually by repurposing 60 manual testers to strategic work.

Does autonomous testing eliminate QA jobs?

No. Autonomous testing transforms QA roles from repetitive execution to strategic quality leadership. Real enterprise examples: 60 manual testers at financial services company were repurposed to exploratory testing, security validation, and UX research—not fired. One subscriber (CTO) completed full E2E coverage alone, something normally requiring entire teams. The pattern: companies pause QA hiring, transition existing QA to higher-value work, and shrink teams through natural attrition—not layoffs.

Can autonomous testing handle complex enterprise applications?

Yes, for UI/UX testing. Autonomous testing excels at testing user-facing behavior (clicks, navigation, form submissions, visual validation) across complex multi-step workflows, legacy systems, and modern web/mobile apps. It struggles with business logic validation requiring deep domain expertise (complex actuarial calculations, compliance rules, clinical algorithms). Best practice: use autonomous testing for 80% of UI testing, keep traditional unit/integration tests for complex business logic. This hybrid approach provides comprehensive coverage.

How long does it take to implement autonomous testing?

Week 1 (pilot): 4-6 hours to record 10-15 critical tests and validate accuracy. Month 1 (staged rollout): 20-30 hours to expand to 50-100 tests and integrate CI/CD. Quarter 1 (full migration): 40-80 hours to migrate 80%+ of test suite. Time to create each test: 2-5 minutes (recording) vs 30-60 minutes (coding traditional test). Zero ongoing maintenance vs 30-40% engineer time for automated tests. Most teams prove ROI in first 30 days.

What about security and compliance for autonomous testing?

Autonomous testing platforms address security/compliance through: (1) Testing in staging environments with synthetic data (not production with real customer data), (2) Encrypted credential storage, (3) On-premise deployment options for regulated industries, (4) SOC2/HIPAA compliance certifications, (5) SSO/SAML integration, (6) Full audit logs. For highly regulated industries (healthcare, financial services), on-premise deployment removes data exposure concerns while maintaining autonomous testing benefits.

Which industries benefit most from autonomous testing?

Traditional enterprises in regulated industries see the highest ROI: financial services (banks, insurance, trading platforms), healthcare (EHR systems, telehealth), government (high compliance requirements), and large retail/e-commerce (complex checkout flows). These industries have: (1) Large manual QA teams (50-100+ testers = $4-8M annually), (2) Complex compliance requirements (extensive test coverage needed), (3) Legacy systems integrated with modern apps (difficult to test), (4) Slow release cycles (testing is bottleneck). Autonomous testing addresses all four pain points simultaneously.

The Bottom Line

Autonomous testing represents a fundamental shift in software quality economics: from labor-intensive to capital-intensive, from bottleneck to enabler, from cost center to competitive advantage.

The companies that adopt it early will compound their advantages: lower costs, faster shipping, better quality, stronger talent retention, superior competitive positioning.

The companies that wait will find themselves fighting uphill battles against competitors who move 4-10x faster at 80-90% lower testing cost.

The decision is yours. The window is now.

Start Your Autonomous Testing Journey:

Try Autonoma Free (Record your first test in 2 minutes)

Calculate Your Savings (See your specific ROI)

Read Implementation Guide (Technical details and best practices)

Schedule Executive Briefing (For VPs/C-level decision-makers)

Related Reading:

- Kavak Proactive AI Incident Detection (Case Study): Real enterprise autonomous testing implementation

- QA Automation Services: Agency vs In-House vs AI Cost Comparison: Compare traditional QA costs vs intelligent test automation

- State of QA 2025: How Engineering Teams Test Today: Industry trends in autonomous software testing

- Real Device Testing Strategy: Why Most Companies Waste Money: Optimize your test infrastructure

- BrowserStack Alternatives: 8 Better Testing Platforms: Modern autonomous testing platforms compared