The Language of Testing: Essential Terms Every QA Should Know

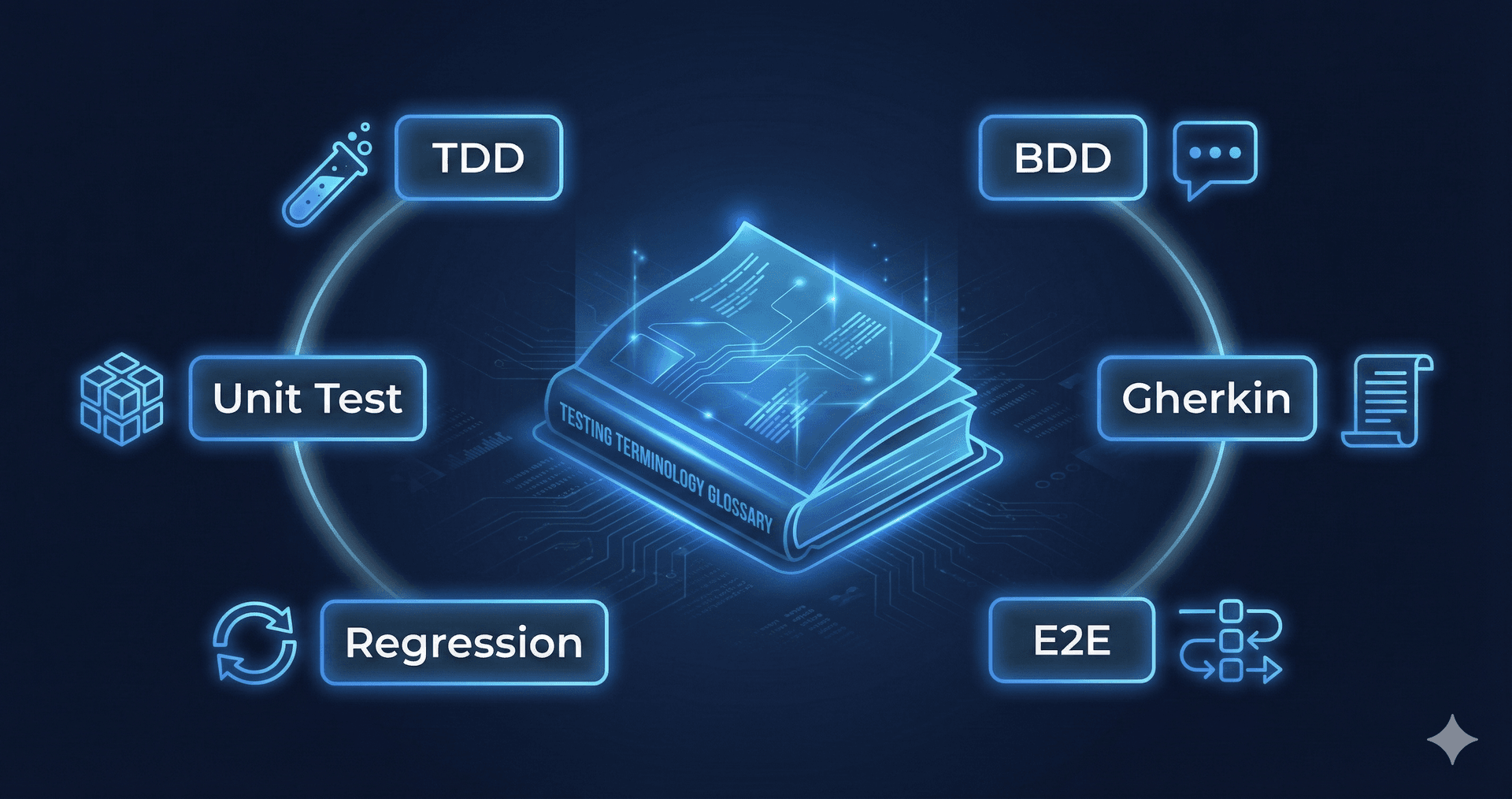

Quick summary: Test organization uses test cases, suites, and scenarios as building blocks. Methodologies like TDD, BDD, and ATDD represent different approaches (unit-focused design, behavior-focused communication, business-focused acceptance). Testing types (regression, smoke, sanity, integration, E2E, unit) each serve distinct purposes. Gherkin syntax uses Given-When-Then format to create executable specifications bridging technical and business communication.

Introduction

You're three weeks into your first QA job. Your manager asks, "Can you write some BDD scenarios for the regression suite?" You nod confidently. You have no idea what that means.

Later, a developer asks if you've tried TDD. Your team lead wants smoke tests before the sprint demo. Someone mentions Gherkin, and you're not sure if it's a testing framework or a lunch order.

Understanding software testing terminology is critical for any QA professional. Testing has its own language. Master it, and you'll communicate clearly with developers, product managers, and other QA engineers. Ignore it, and you'll spend half your time asking "What does that mean?"

This chapter decodes the essential terminology every QA professional needs to know.

Building Blocks: Tests, Cases, and Suites

Before diving into methodologies, let's clarify the fundamental units of testing.

A test case is a single scenario with specific steps, expected results, and pass/fail criteria. "User logs in with valid credentials" is a test case.

A test suite is a collection of related test cases grouped by feature, priority, or purpose. Your login test suite might include valid credentials, invalid passwords, locked accounts, and password reset flows.

A test scenario describes a user goal or workflow from a high level. "User completes checkout process" is a scenario that might contain dozens of test cases covering payment methods, shipping options, discount codes, and error handling.

Think of it like writing: test cases are sentences, test suites are chapters, and test scenarios are story arcs.

The Three Methodologies: TDD, BDD, and ATDD

Three letters appear constantly in testing conversations: TDD, BDD, and ATDD. They're not competing standards. They're different lenses for the same goal,building software that works.

Test-Driven Development (TDD)

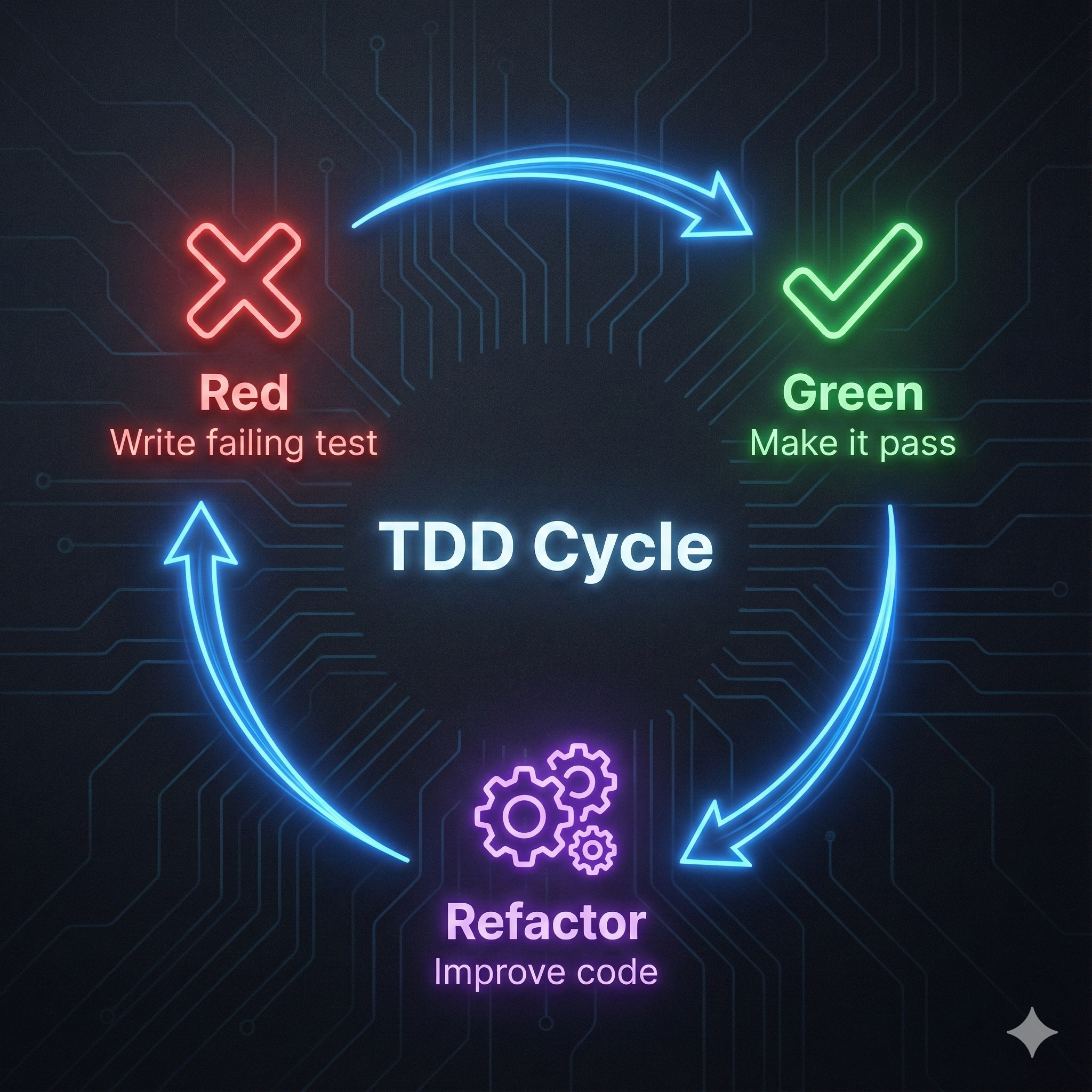

TDD focuses on unit tests and code design. Write a failing test first. Write the minimum code to make it pass. Refactor for clarity. Repeat.

This red-green-refactor cycle forces you to think about how your code will be used before you write it. The result? Modular, testable functions with clear interfaces.

Example: TDD in Python with pytest

# Step 1: Write failing test (RED)

def test_calculate_discount():

assert calculate_discount(100, 0.2) == 80.0

assert calculate_discount(50, 0.1) == 45.0

# Step 2: Write minimal code to pass (GREEN)

def calculate_discount(price, discount_rate):

return price * (1 - discount_rate)

# Step 3: Refactor if needed (BLUE)

def calculate_discount(price, discount_rate):

if not 0 <= discount_rate <= 1:

raise ValueError("Discount rate must be between 0 and 1")

return price * (1 - discount_rate)Example: TDD in JavaScript with Jest

// Step 1: Write failing test (RED)

test('calculateDiscount applies percentage correctly', () => {

expect(calculateDiscount(100, 0.2)).toBe(80.0);

expect(calculateDiscount(50, 0.1)).toBe(45.0);

});

// Step 2: Write minimal code to pass (GREEN)

function calculateDiscount(price, discountRate) {

return price * (1 - discountRate);

}

// Step 3: Refactor if needed (BLUE)

function calculateDiscount(price, discountRate) {

if (discountRate < 0 || discountRate > 1) {

throw new Error('Discount rate must be between 0 and 1');

}

return price * (1 - discountRate);

}TDD works best for developers writing libraries, APIs, and pure functions. It's less useful for UI testing or complex integrations where behavior matters more than implementation.

What TDD Really Means , In Practice

TDD (Test-Driven Development) is about writing tests before code, forcing you to think from the user's perspective:

-

Red: Write a failing test describing the desired behavior. This makes you clarify how your code should be used, often surfacing missing relationships or design flaws early.

Example: You want to calculate a child’s age, but writing the test reveals your product page doesn’t connect to the person, so you fix your data model first. -

Green: Write just enough code to pass the test. Don’t worry about polish yet, just focus on correctness.

-

Refactor: Clean up the code, knowing your tests will catch mistakes.

Benefits:

- Improves design by making you consider real use cases first

- Safer refactoring and fewer bugs

- Tests double as documentation of intent

Downsides:

- Slower for simple code

- Can create too many trivial/unit tests

- Not ideal for every situation (UI, experiments, etc.)

TDD’s main strength: it helps you discover problems and design needs before you waste time building the wrong thing.

Behavior-Driven Development (BDD)

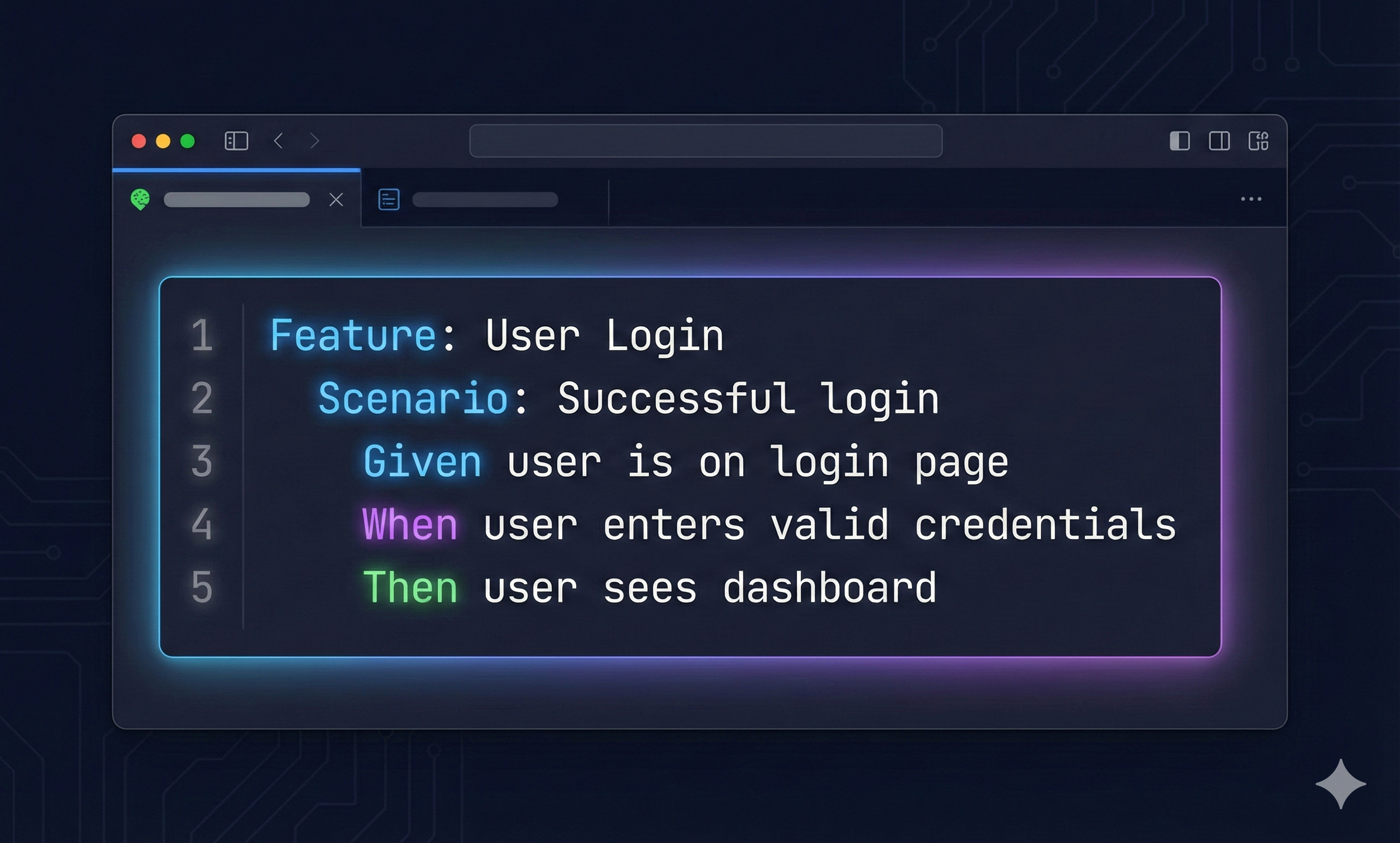

BDD shifts focus from units to behaviors. Instead of testing functions in isolation, you test how the system behaves from a user's perspective.

BDD uses natural language specifications that non-technical stakeholders can read. The most common format is Gherkin syntax: Given-When-Then.

Gherkin Feature File Example

Feature: User Login

As a registered user

I want to log in to my account

So that I can access my dashboard

Scenario: Successful login with valid credentials

Given I am on the login page

And I have a registered account with email "user@example.com"

When I enter "user@example.com" in the email field

And I enter my correct password

And I click the "Login" button

Then I should see the dashboard page

And I should see "Welcome back, User" in the header

Scenario: Failed login with incorrect password

Given I am on the login page

When I enter "user@example.com" in the email field

And I enter an incorrect password

And I click the "Login" button

Then I should see an error message "Invalid email or password"

And I should remain on the login page

The structure is simple:

- Given sets up the initial state (preconditions)

- When describes the action being tested

- Then defines the expected outcome

BDD bridges the gap between business requirements and technical tests. Product managers write scenarios. Developers implement step definitions. QA engineers verify behavior matches expectations.

Acceptance Test-Driven Development (ATDD)

ATDD sits between TDD and BDD. Like BDD, it focuses on acceptance criteria. Like TDD, it writes tests before implementation. But ATDD emphasizes collaboration among developers, testers, and business stakeholders before any code is written.

The team defines acceptance criteria together. Those criteria become automated tests. Development isn't complete until all acceptance tests pass.

ATDD works well for regulated industries where compliance and documentation matter. The acceptance tests become living specifications of what the system must do.

Testing Types: When to Use Each

Testing types describe what you're testing and when. Each serves a specific purpose in your quality strategy.

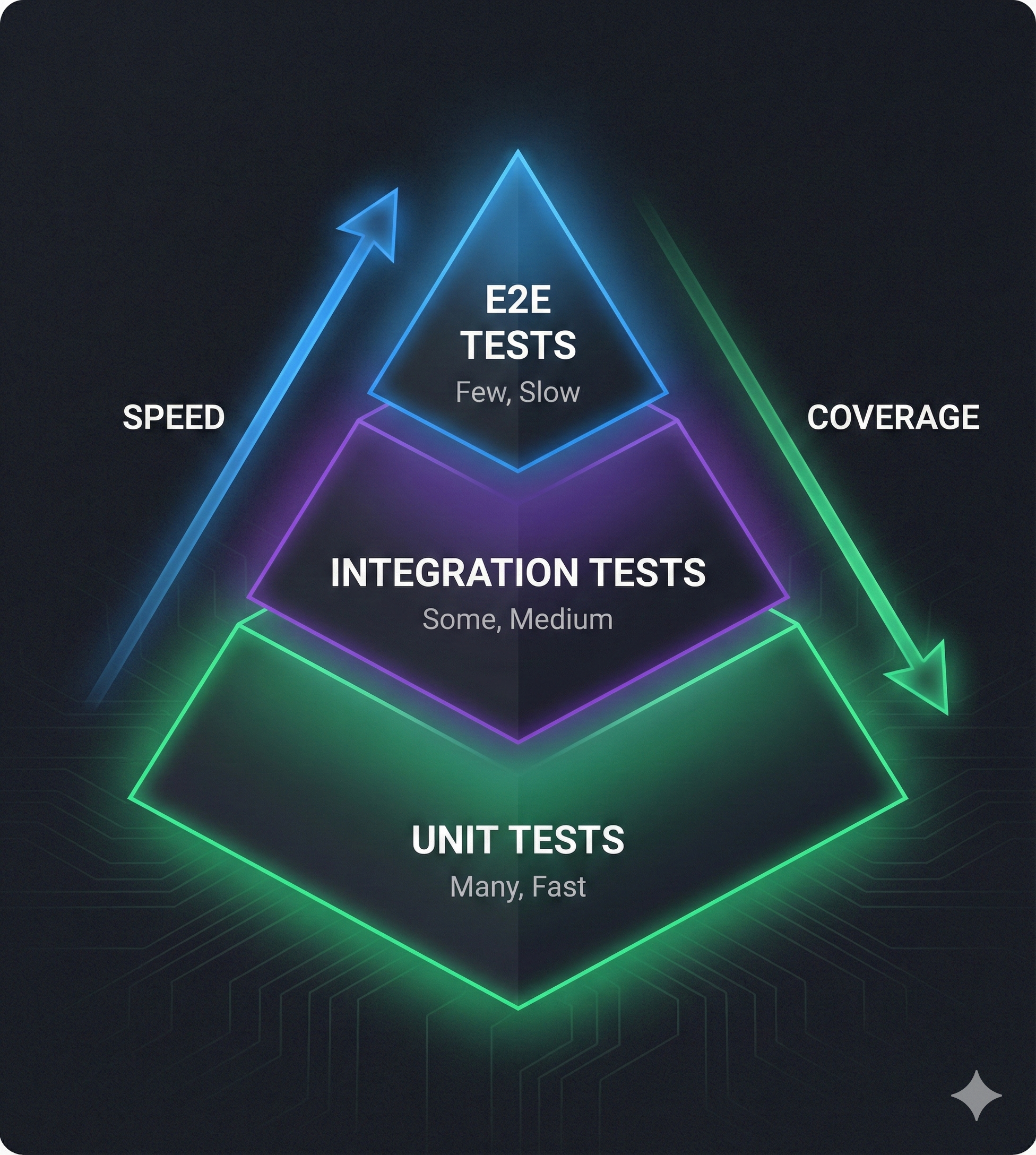

Unit tests verify individual functions or methods in isolation. Fast, focused, and plentiful, they form the base of the testing pyramid. Run them on every code change.

Integration tests check how components work together. Does your API correctly save data to the database? Do microservices communicate properly? Slower than unit tests but essential for catching interface mismatches.

End-to-end (E2E) tests simulate real user workflows from start to finish. Login, search, add to cart, checkout. E2E tests are slow and brittle but catch issues no other test type can find. Use them sparingly for critical paths.

Regression tests ensure new changes don't break existing functionality. After fixing a bug or adding a feature, run your regression suite to verify everything still works. Regression testing is a strategy, not a type,you're re-running existing tests to catch regressions.

Smoke tests are quick sanity checks after deployment. Can users log in? Does the homepage load? Are critical APIs responding? Smoke tests answer "Is the system stable enough to test further?" Think of them as a health check.

Sanity tests are narrower than smoke tests. After a bug fix, you run sanity tests on just that feature to verify the fix works. You're not testing everything,just enough to confirm you haven't made things worse.

The difference? Smoke tests are broad and shallow. Sanity tests are narrow and deep. Regression tests are comprehensive.

Testing Levels: From Units to Systems

Testing levels describe the scope of what you're testing.

Component testing (unit level) isolates individual pieces. Test a function. Test a class. Test a module. No external dependencies.

Integration testing combines components. Test how your authentication service talks to the database. Test how the payment gateway integrates with your order processing system.

System testing treats the application as a complete black box. You don't care about internal structure,you're testing user-facing functionality. Login, search, reports, admin panels. System testing happens after integration testing.

Acceptance testing validates the system meets business requirements. Product owners, stakeholders, and sometimes real users participate. Does the software solve the problem it was built to solve? Acceptance testing determines if the system is ready for production.

Testing Approaches: Black Box, White Box, Gray Box

These approaches describe how much you know about the system's internals.

Black box testing treats the system as opaque. You know inputs and expected outputs. You don't know (or care) about internal logic, code structure, or algorithms. Most manual testing is black box.

White box testing requires full knowledge of the code. You test specific functions, branches, and logic paths. You write unit tests that achieve high code coverage. Developers typically perform white box testing.

Gray box testing combines both. You understand some internals (database schema, API contracts, architecture) but test from an external perspective. You leverage internal knowledge to design better tests without directly accessing the code.

Most QA engineers operate in the gray box. You understand the system well enough to create informed tests but verify behavior through user-facing interfaces.

Test Coverage and Why It Matters (and Doesn't)

Test coverage measures how much of your code is executed during testing. Common metrics include:

- Line coverage: Percentage of code lines executed

- Branch coverage: Percentage of decision branches tested

- Function coverage: Percentage of functions called

High coverage sounds good. 90% line coverage means your tests execute 90% of your codebase. The problem? Coverage measures execution, not quality.

You can have 100% coverage with terrible tests that assert nothing. You can have 60% coverage with excellent tests targeting critical paths and edge cases.

Coverage is a tool, not a goal. Use it to find untested code. Don't chase meaningless percentages.

Putting It All Together

You now speak the language of testing. When your manager asks for BDD scenarios, you know they want Given-When-Then specifications describing user behaviors. When someone mentions TDD, you understand they're writing tests before implementation to drive design.

You know the difference between smoke tests (quick critical path checks) and regression tests (comprehensive re-testing after changes). You understand why E2E tests sit at the top of the pyramid,valuable but expensive,while unit tests form the foundation.

Most importantly, you can communicate clearly with your team. You can discuss trade-offs between black box and white box approaches. You can explain why 100% test coverage isn't the goal.

In Chapter 1, we covered why testing matters and what QA engineers do. Now you have the vocabulary to talk about how. Next, we'll explore testing strategies and when to apply each approach.

FAQ

Q: Should I use TDD, BDD, or ATDD?

A: It depends on your role and project. Developers often prefer TDD for unit-level design. Teams with non-technical stakeholders benefit from BDD's natural language scenarios. Regulated industries or teams emphasizing collaboration lean toward ATDD. Many teams combine approaches,TDD for backend logic, BDD for user-facing features.

Q: What's the difference between integration testing and E2E testing?

A: Integration tests verify how components connect (API to database, service to service). E2E tests simulate complete user workflows across the entire system (login through checkout). Integration tests are narrower and faster. E2E tests are broader and slower.

Q: How do I know if I have enough test coverage?

A: Coverage metrics alone can't answer this. Instead, ask: Are critical paths tested? Are edge cases covered? Do tests catch bugs before production? If bugs reach production frequently, you need better tests,not necessarily more coverage.

Q: When should I write automated tests vs manual tests?

A: Automate tests that run frequently (regression, smoke) or require precision (data validation, calculations). Manual testing works better for exploratory testing, usability evaluation, and one-time scenarios. Automation has upfront cost but saves time long-term.

Q: Is Gherkin only for BDD?

A: Gherkin is most commonly associated with BDD frameworks like Cucumber, but you can use Given-When-Then format anywhere you need clear, structured test scenarios. It's a communication tool, not just a technical requirement.

Q: What should I test first on a new project?

A: Start with smoke tests covering critical user paths,can users log in, access core features, and complete key workflows? Then build out unit tests for business logic and integration tests for component connections. E2E tests come last once the system stabilizes.

Course Navigation

Chapter 2 of 8: The Language of Testing ✓

Next Chapter →

Chapter 3: Test Planning and Organization

Master the complete testing workflow from receiving requirements to reporting results. Learn how to write effective test cases, prioritize bugs, and manage test data with real-world examples.

← Previous Chapter

Chapter 1: Introduction to Software Testing - Learn what testing is and why it matters