How to Plan and Organize Tests: A Step-by-Step Workflow

Quick summary: Learn how to transform vague requirements into comprehensive test scenarios. Master the complete workflow from receiving a feature to reporting results. Write effective test cases that catch edge cases and integration issues. Apply bug reporting best practices that get issues fixed faster.

Introduction

A developer hands you a Slack message: "New login feature ready for testing. It has social auth now. Can you test it?" You open the staging environment. You click around. It works. You report back: "Looks good!"

Three days later, production breaks. Users can't log in with Google on mobile Safari. The CEO is on the call. "Why didn't QA catch this?"

Proper test planning and organization would have prevented this. The difference between chaos and confidence isn't testing more, it's testing with a system. A clear workflow transforms ad-hoc clicking into structured validation that catches bugs before they reach customers.

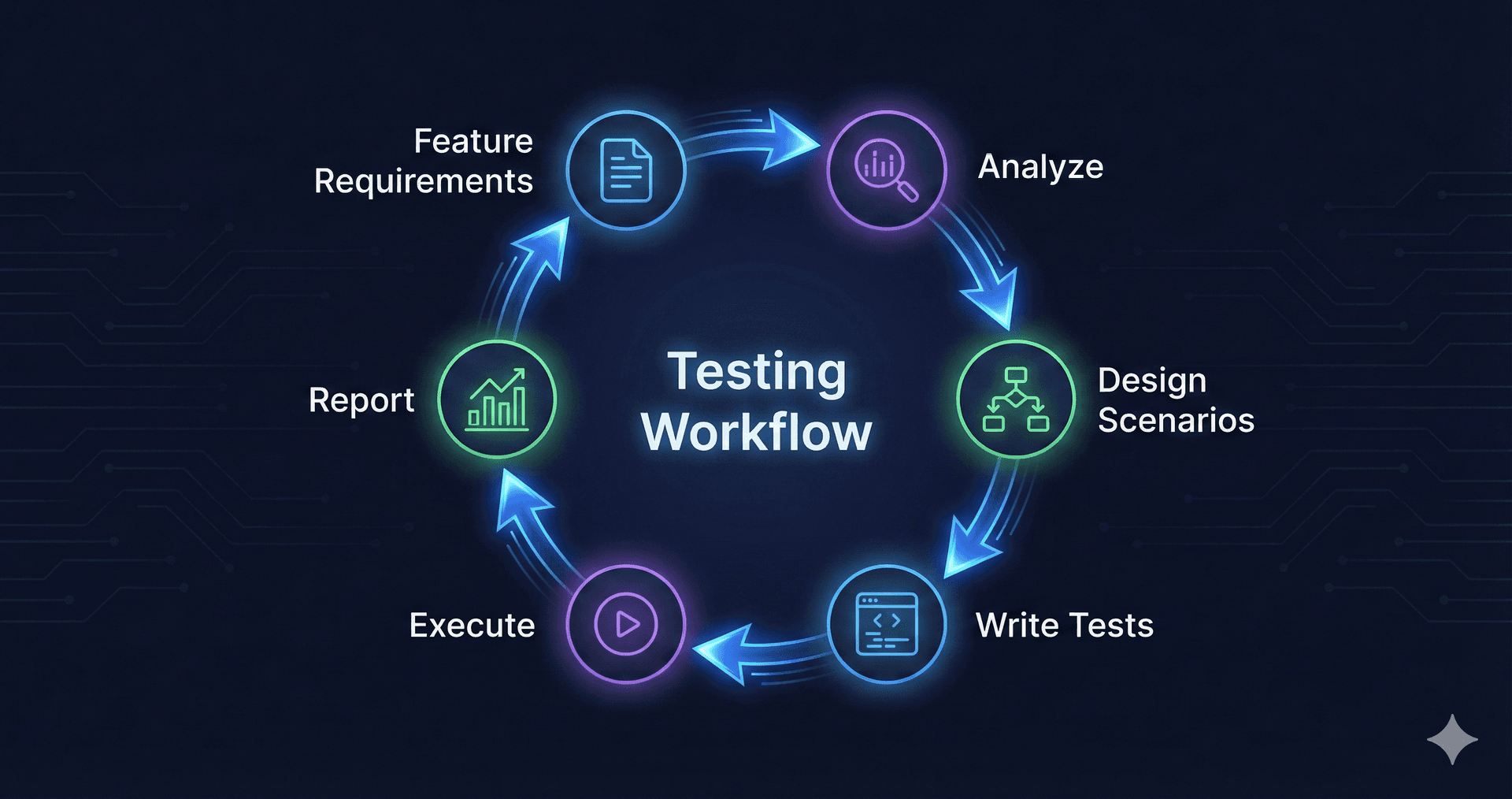

The Testing Workflow: From Requirements to Results

Let's walk through testing that same login feature, but this time, with structure.

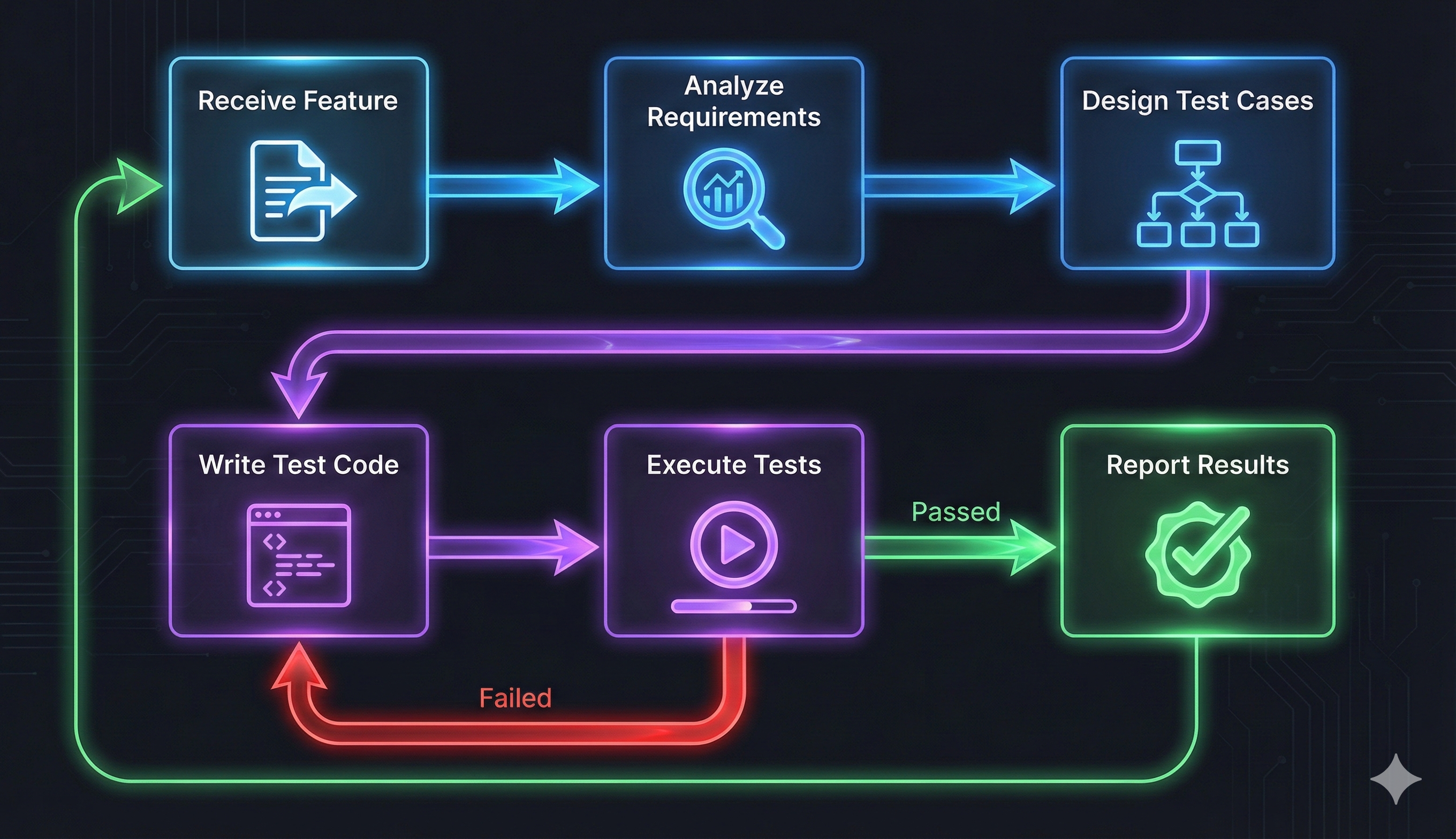

Receive and Analyze Requirements

The developer says "social auth." That's not a requirement, it's a feature name. You dig deeper:

What you need to know:

- Which social providers? (Google, Facebook, GitHub?)

- What happens to existing users who sign up via social auth?

- Does it work on mobile and desktop?

- What about users who already have accounts with the same email?

- Are there rate limits or error states to handle?

The developer clarifies: "Google OAuth only. New users create accounts automatically. Existing users with matching emails should merge accounts. Desktop and mobile web, iOS and Android apps."

Now you have something to work with. The difference between good QA and great QA starts here, before you write a single test case. Many critical bugs stem from incomplete requirements analysis in the first place.

Design Test Scenarios

Test scenarios are the "what" you're testing. Test cases are the "how." Start broad, then drill down.

Happy path scenarios prove the feature works as intended:

- User with no account clicks "Sign in with Google" and creates a new account

- User with existing email clicks "Sign in with Google" and merges accounts seamlessly

- User signs in successfully on desktop Chrome, mobile Safari, and native apps

Edge cases test boundaries and unusual conditions:

- User cancels Google OAuth flow midway

- User's Google account has no email address (yes, this happens)

- User signs in with Google, then tries to set a password manually

- Session expires during the OAuth callback

Negative tests validate error handling:

- Google OAuth service is down (simulate with network throttling)

- User denies email permission during Google consent

- Duplicate account merge fails due to conflicting user data

- Rate limiting kicks in after multiple failed attempts

Most testers stop after happy paths. The bugs live in the edges.

Write Detailed Test Cases

A test case is a precise, repeatable instruction set. Anyone on your team should be able to execute it and get the same result.

Bad test case:

- Title: Test Google login

- Steps: Try to log in with Google

- Expected: It works

Good test case:

{

"id": "TC-AUTH-001",

"title": "New user account creation via Google OAuth",

"priority": "P0",

"prerequisites": [

"User is logged out",

"No existing account with test email exists",

"Google OAuth is enabled in environment config"

],

"test_data": {

"google_account": "testuser@gmail.com",

"expected_user_id_format": "google_[hash]"

},

"steps": [

{

"step": 1,

"action": "Navigate to /login",

"expected": "Login page displays with 'Sign in with Google' button"

},

{

"step": 2,

"action": "Click 'Sign in with Google' button",

"expected": "Google OAuth consent screen opens in new window"

},

{

"step": 3,

"action": "Select testuser@gmail.com and approve permissions",

"expected": "Redirect to /dashboard with success message"

},

{

"step": 4,

"action": "Verify user profile shows Google avatar and email",

"expected": "Profile displays: testuser@gmail.com, Google avatar image"

},

{

"step": 5,

"action": "Check database for new user record",

"expected": "User record created with auth_provider='google'"

}

],

"actual_result": "",

"status": "Not Executed",

"notes": ""

}

Notice what makes this effective:

- Clear prerequisites set up the test environment

- Test data is specified, not generic

- Each step has an explicit expected result

- Verification happens at multiple layers (UI, backend, database)

The fifth step is what separates thorough testing from surface-level clicking. You're not just checking if it "looks like it works", you're verifying the system state changed correctly.

Execute Tests Systematically

Execution isn't about speed. It's about observation.

When you execute TC-AUTH-001:

- Step 1 passes. Login page renders.

- Step 2 passes. OAuth window opens.

- Step 3... the redirect goes to /login instead of /dashboard.

Don't skip ahead. Document exactly what happened:

{

"actual_result": "After OAuth approval, redirected to /login with error toast: 'Email already in use'",

"status": "Failed",

"notes": "Bug: System doesn't recognize this is the same user. Account merge logic not working."

}You found the bug. It exists on the account merge path, not the new account path. If you'd just clicked around randomly, you might have missed it, or found it, but couldn't explain how to reproduce it.

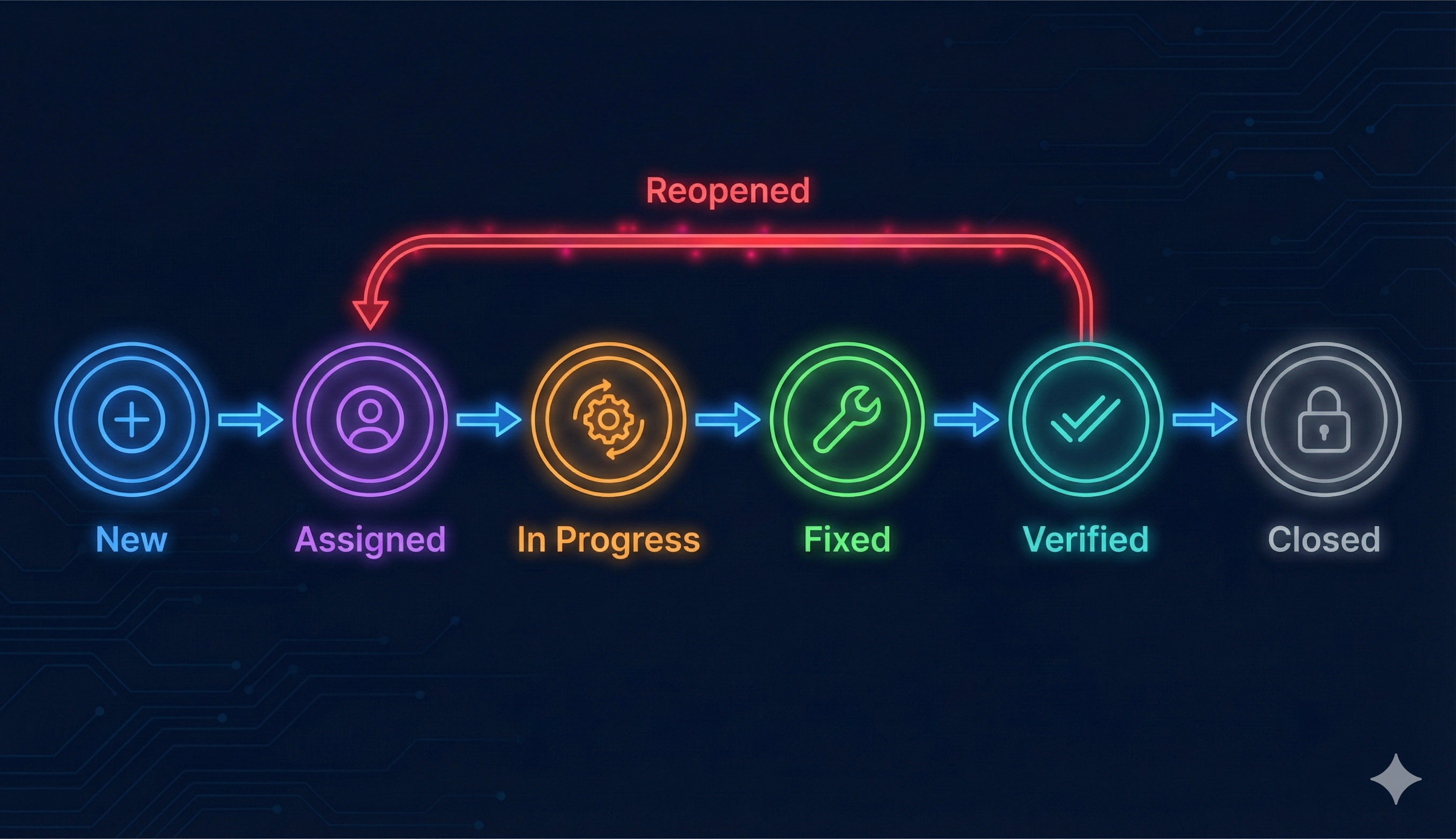

Report Results and Track Bugs

Bug reports are your professional reputation in document form. Vague reports waste developer time. Precise reports get bugs fixed.

Bad bug report:

- Title: Login broken

- Description: I tried to log in and it didn't work

Good bug report:

Bug ID: BUG-AUTH-042

Title: Google OAuth account merge fails for existing email addresses

Priority: P0 (Blocks production release)

Environment: Staging (v2.3.0-rc1)

Browser: Chrome 120.0 (also reproduces in Safari 17.1)

Steps to Reproduce:

1. Create account manually with email: testuser@gmail.com, password: Test123!

2. Log out

3. Click "Sign in with Google"

4. Authenticate with Google account: testuser@gmail.com

5. Observe redirect behavior

Expected Result:

- User is redirected to /dashboard

- Existing account is merged with Google OAuth

- User can now log in with either password or Google

Actual Result:

- User is redirected back to /login

- Error toast displays: "Email already in use"

- No account merge occurs

- User is stuck - cannot use Google auth or password auth

Impact:

- Blocks all existing users from using Google OAuth

- Affects ~40% of user base (users who signed up via email)

- Production blocker for v2.3.0 release

Additional Context:

- Database shows two user records with same email after reproduction

- Console error: "Unique constraint violation on users.email"

- Related test case: TC-AUTH-001 (steps 1-3)

Attachments:

- Screenshot: error-toast.png

- Console logs: console-output.txt

- Network HAR file: oauth-callback.har

The developer reads this and knows exactly what to fix. No back-and-forth. No "I can't reproduce it." No wasted time.

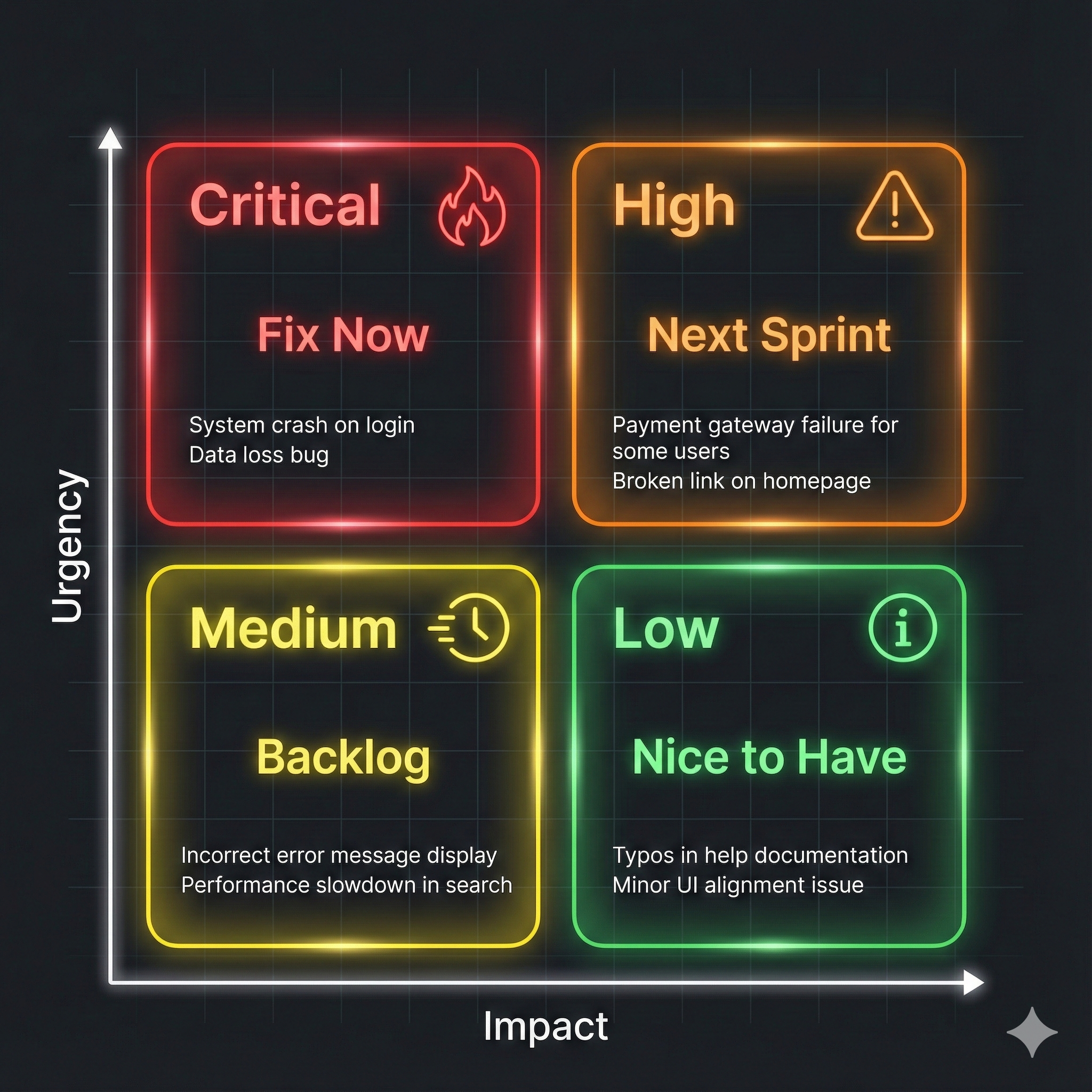

Prioritization: Not All Bugs Are Equal

You've found twelve bugs. The release is in three days. Which ones must be fixed, and which can wait?

P0 (Critical) - High severity, high frequency:

- Authentication completely broken for 40% of users

- Payment processing fails on checkout

- Data loss or corruption bugs

These block releases. Drop everything.

P1 (High) - High severity, low frequency OR low severity, high frequency:

- Rare crash on specific Android devices

- Confusing error message that affects every user

- Performance degradation under load

Fix before release if possible. Document workarounds if not.

P2 (Medium) - Low severity, low frequency:

- Visual misalignment on small screens

- Tooltip text has typo

- Edge case that requires 8 specific conditions

Ship with these. Fix in the next sprint.

The account merge bug? That's P0. The Google consent screen has a slightly outdated logo? That's P2.

Test Data Management: The Invisible Foundation

Your test cases are only as good as your test data. The Google OAuth bug? It only appeared because you had an existing user with that email address.

Test data strategy:

- Fresh environment for happy path tests (clean slate, no conflicts)

- Pre-seeded data for edge cases (existing users, filled databases, conflicting records)

- Boundary values for validation tests (empty strings, max lengths, special characters)

For the login feature, you need:

- A Google account that's never been used in the system

- A Google account that has been used in the system

- An existing email/password user with the same email

- An account with maximum profile data (long names, special characters)

Without test data, you're testing in a vacuum. With the right test data, you're testing reality.

Common Pitfalls and How to Avoid Them

Pitfall 1: Testing only on your machine

Your MacBook Pro with 32GB RAM and fiber internet is not your user's 2019 Android phone on 3G. Test on real devices, real networks, real constraints.

Pitfall 2: Assuming the developer tested it

They tested the happy path. On their machine. With the debugger attached. Your job is to test everything else.

Pitfall 3: Not retesting after bug fixes

The developer fixed the account merge bug. Does Google OAuth still work for new users? Regression testing catches when fixing one thing breaks another.

Pitfall 4: Writing test cases during execution

You're clicking through the app, finding bugs, but you have no idea what you've already tested. Write test cases first. Execute them second. Document everything.

Pitfall 5: Treating test cases as checklists

Test cases are starting points, not prisons. If you notice something odd during execution, even if it's not in the test case, investigate it. Scripted testing finds expected bugs. Exploratory testing finds surprises.

Bringing It All Together

Let's revisit that opening scenario. The developer says: "New login feature ready. Social auth. Can you test it?"

Without a workflow:

- Click around for 10 minutes

- Report "looks good"

- Production breaks

- Blame game begins

With a workflow:

- Clarify requirements (5 minutes)

- Design 8 test scenarios covering happy paths, edges, and negatives (15 minutes)

- Write 12 detailed test cases (30 minutes)

- Execute systematically on 5 devices/browsers (2 hours)

- Find the account merge bug in test case 3

- Report bug with full reproduction steps (10 minutes)

- Bug gets fixed before release

- Users never experience the issue

The workflow took longer. It also prevented a production incident, emergency fixes, and angry customers.

What's Next

You now understand the complete testing workflow, from vague requirements to precise bug reports. But workflow is just the foundation. Next, you'll learn about test automation frameworks and which tools to choose for your testing needs.

Course Navigation

Chapter 3 of 8: Test Planning and Organization ✓

Next Chapter →

Chapter 4: Test Automation Frameworks Guide

Compare Playwright, Selenium, Cypress, and Appium. Learn when to automate vs when to test manually, and discover common automation challenges with solutions and code examples.

← Previous Chapter

Chapter 2: The Language of Testing - Master essential testing terminology

Want to see how modern teams are handling test planning and automation? Check out the State of QA 2025 report to see how automation testing services are transforming workflows.