Test Automation Frameworks: Playwright, Selenium, Cypress, and Appium Compared

Introduction

You run 500 test cases manually every sprint. Each takes 8 minutes. That's 66 hours of clicking buttons, filling forms, and checking outputs. Your team decides to adopt test automation frameworks. Now they run in 20 minutes.

Except a significant portion of tests fail randomly. You spend considerable time debugging why a test that passed yesterday fails today. The button selector changed. The API response is 50ms slower. A modal animation interferes with a click. You're maintaining tests instead of writing them.

This is the automation paradox. Tests save time, when they work. According to our State of QA 2025 report, most teams cite "flaky tests and maintenance burden" as their biggest automation challenge. The promise was less work. The reality is different work.

The question isn't whether to automate. It's what to automate, which framework to use, and how to avoid the maintenance trap.

The real challenge isn't picking a framework. It's writing tests that don't break every sprint. This guide shows you how.

You can also follow this guide with code with this GitHub repository.

When to Automate vs When to Test Manually

You can't automate everything. Trying to automate everything guarantees failure.

Manual testing excels at exploration. When you don't know what you're looking for, edge cases, usability issues, visual inconsistencies, human intuition outperforms scripts. Manual testing is fast for one-off scenarios: testing a hotfix, exploring a prototype, or validating something that happens once.

Automation excels at repetition. Run the same 100 scenarios every deployment? Automate them. Regression tests that verify existing functionality? Automate. Tests that run in CI/CD pipelines? Must automate. Tests that require precise timing or complex data setups? Automation handles this better than humans.

The mistake is automating tests that change frequently. If your UI redesigns every quarter, automating pixel-perfect visual tests creates maintenance hell. If your API contracts shift weekly, brittle integration tests break constantly. Automate the stable parts first. Leave the volatile parts manual until they stabilize.

Web Testing Frameworks: Playwright, Selenium, and Cypress

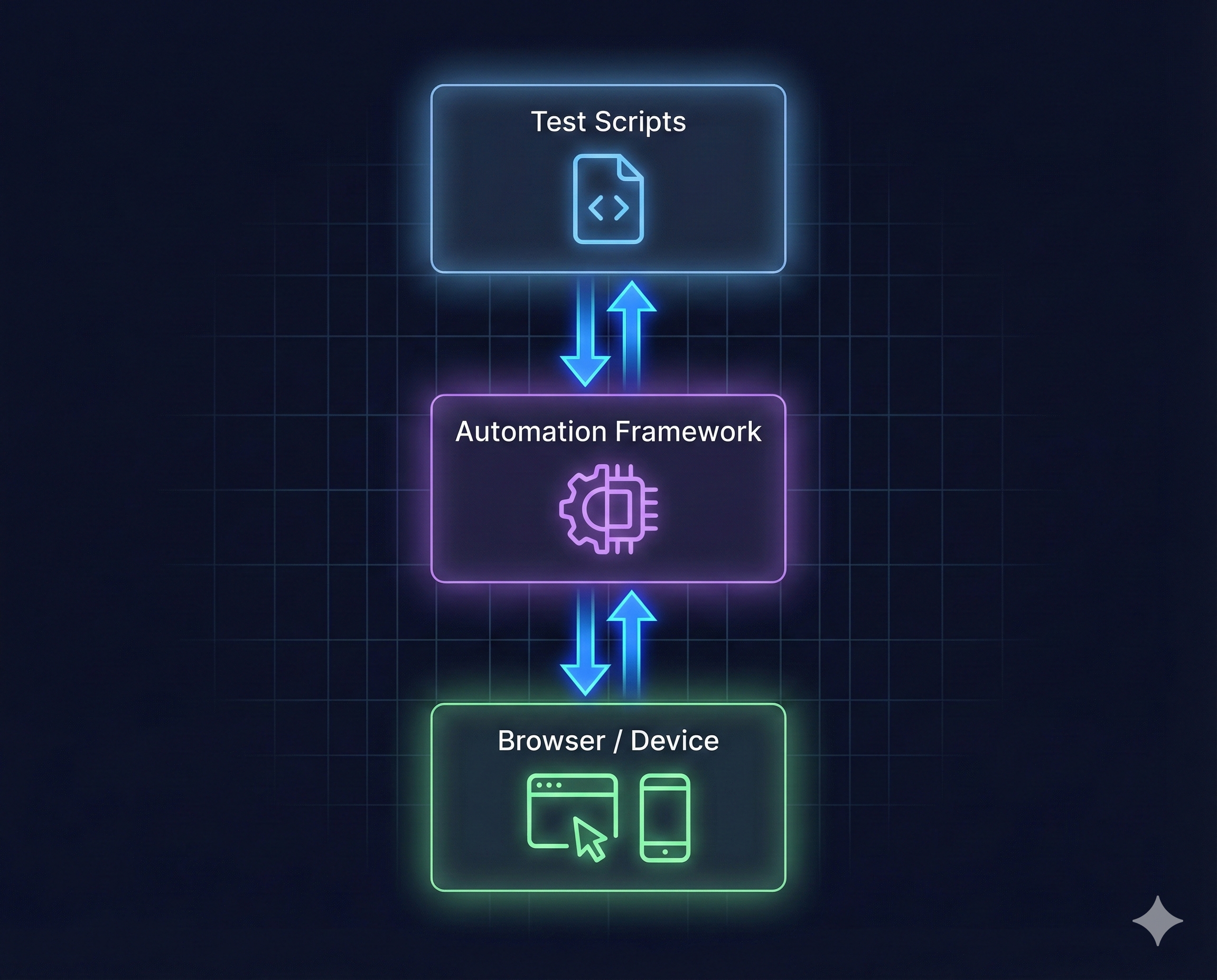

Three frameworks dominate web testing. They take different approaches to the same problem: controlling a browser programmatically.

Selenium emerged in 2004. It's mature, supports every major language (Python, Java, JavaScript, C#, Ruby), and works with Chrome, Firefox, Safari, and Edge. Its architecture uses the WebDriver protocol, a W3C standard for browser automation. This means broad compatibility. It also means overhead. Every action requires JSON serialization, HTTP requests, and browser-side deserialization. Selenium tests are slower and more prone to timing issues.

Cypress arrived in 2017 with a different architecture. Instead of external control via WebDriver, Cypress runs inside the browser alongside your application. This makes tests faster and more reliable, no network latency between test and app. The developer experience is exceptional: automatic waiting, time-travel debugging, and intuitive APIs. The limitation? Cypress only works for web apps. No mobile testing. No multi-tab testing. No cross-domain testing (mostly). If your testing stays within web boundaries, Cypress is compelling.

Playwright launched in 2020 from Microsoft. It learned from Selenium's slowness and Cypress's limitations. Playwright uses modern browser automation protocols (Chrome DevTools Protocol for Chromium, similar for Firefox and WebKit). It's faster than Selenium, more capable than Cypress. Playwright handles multi-tab scenarios, cross-domain testing, and mobile browsers. It auto-waits intelligently, less flakiness from timing issues. Tests written in Playwright tend to be more stable.

Which should you use? Playwright is the best choice for new projects, faster, more reliable, actively developed. Selenium makes sense if you need Java/C#/Ruby or maintain existing Selenium suites. Cypress wins for pure web testing with emphasis on developer experience.

Mobile Testing: Appium

Web frameworks don't work for mobile apps. Mobile apps don't run in browsers, they run natively on iOS and Android. Appium bridges this gap.

Appium uses the WebDriver protocol (like Selenium) to control mobile devices and simulators. Write tests once, run them on both iOS and Android, in theory. In practice, iOS and Android differ enough that tests require platform-specific adjustments. Appium tests use element locators (accessibility IDs, XPath) just like web tests. They interact with native components: tap buttons, swipe screens, verify text.

The setup is complex. You need Xcode for iOS simulators, Android Studio for Android emulators, plus Appium server and device drivers. Debugging mobile tests is harder than web tests, emulators crash, timing issues are worse, and element inspection tools are less mature.

Despite the complexity, Appium dominates mobile automation because the alternative is worse: maintaining separate test suites for iOS and Android in native test frameworks (XCUITest and Espresso). If you test mobile apps, Appium is the standard.

API Testing: Postman and REST Assured

Before testing UIs, test APIs. API tests are faster and more stable.

Postman provides visual API testing, useful for non-programmers. REST Assured (Java), Requests (Python), and SuperTest (JavaScript) offer code-based API testing with version control and complex logic support. Choose based on team preference: visual tools for accessibility, code-based for power users.

Setting Up Your First Automation Project

Let's build a real test. We'll create a login test in Playwright (TypeScript) and Selenium (Python) to show the differences.

Playwright Setup:

npm init playwright@latestThis creates a complete project structure with config files and example tests. Now write a login test:

import { test, expect } from '@playwright/test';

test('user can log in successfully', async ({ page }) => {

// Navigate to login page

await page.goto('https://example.com/login');

// Fill credentials

await page.fill('[data-testid="email"]', 'user@example.com');

await page.fill('[data-testid="password"]', 'SecurePass123');

// Click login button

await page.click('[data-testid="login-button"]');

// Verify successful login

await expect(page).toHaveURL(/.*dashboard/);

await expect(page.locator('[data-testid="welcome-message"]'))

.toContainText('Welcome back');

});Run it with npx playwright test. Playwright automatically waits for elements to be ready before interacting. No manual waits needed.

Selenium Setup (Python):

pip install seleniumDownload ChromeDriver or use webdriver-manager to handle drivers automatically:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def test_user_login():

# Initialize driver

driver = webdriver.Chrome()

try:

# Navigate to login page

driver.get('https://example.com/login')

# Wait for and fill credentials

email_field = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CSS_SELECTOR, '[data-testid="email"]'))

)

email_field.send_keys('user@example.com')

password_field = driver.find_element(By.CSS_SELECTOR, '[data-testid="password"]')

password_field.send_keys('SecurePass123')

# Click login button

login_button = driver.find_element(By.CSS_SELECTOR, '[data-testid="login-button"]')

login_button.click()

# Verify successful login

WebDriverWait(driver, 10).until(

EC.url_contains('dashboard')

)

welcome_message = driver.find_element(By.CSS_SELECTOR, '[data-testid="welcome-message"]')

assert 'Welcome back' in welcome_message.text

finally:

driver.quit()Notice the difference? Selenium requires explicit waits (WebDriverWait) and try-finally blocks to ensure cleanup. Playwright handles this automatically.

Appium Mobile Test:

For mobile, the setup is more involved:

const { remote } = require('webdriverio');

const capabilities = {

platformName: 'iOS',

'appium:deviceName': 'iPhone 14',

'appium:platformVersion': '16.0',

'appium:app': '/path/to/YourApp.app',

'appium:automationName': 'XCUITest'

};

async function testMobileLogin() {

const driver = await remote({

hostname: 'localhost',

port: 4723,

capabilities

});

try {

// Find and interact with mobile elements

const emailField = await driver.$('~email-input'); // accessibility ID

await emailField.setValue('user@example.com');

const passwordField = await driver.$('~password-input');

await passwordField.setValue('SecurePass123');

const loginButton = await driver.$('~login-button');

await loginButton.click();

// Verify login success

const welcomeText = await driver.$('~welcome-message');

const text = await welcomeText.getText();

assert(text.includes('Welcome back'));

} finally {

await driver.deleteSession();

}

}Mobile tests use accessibility IDs (~element-id) instead of CSS selectors. This requires developers to add accessibility labels to UI components.

Common Automation Challenges and Solutions

Real automation fails for predictable reasons. Here's how to avoid them.

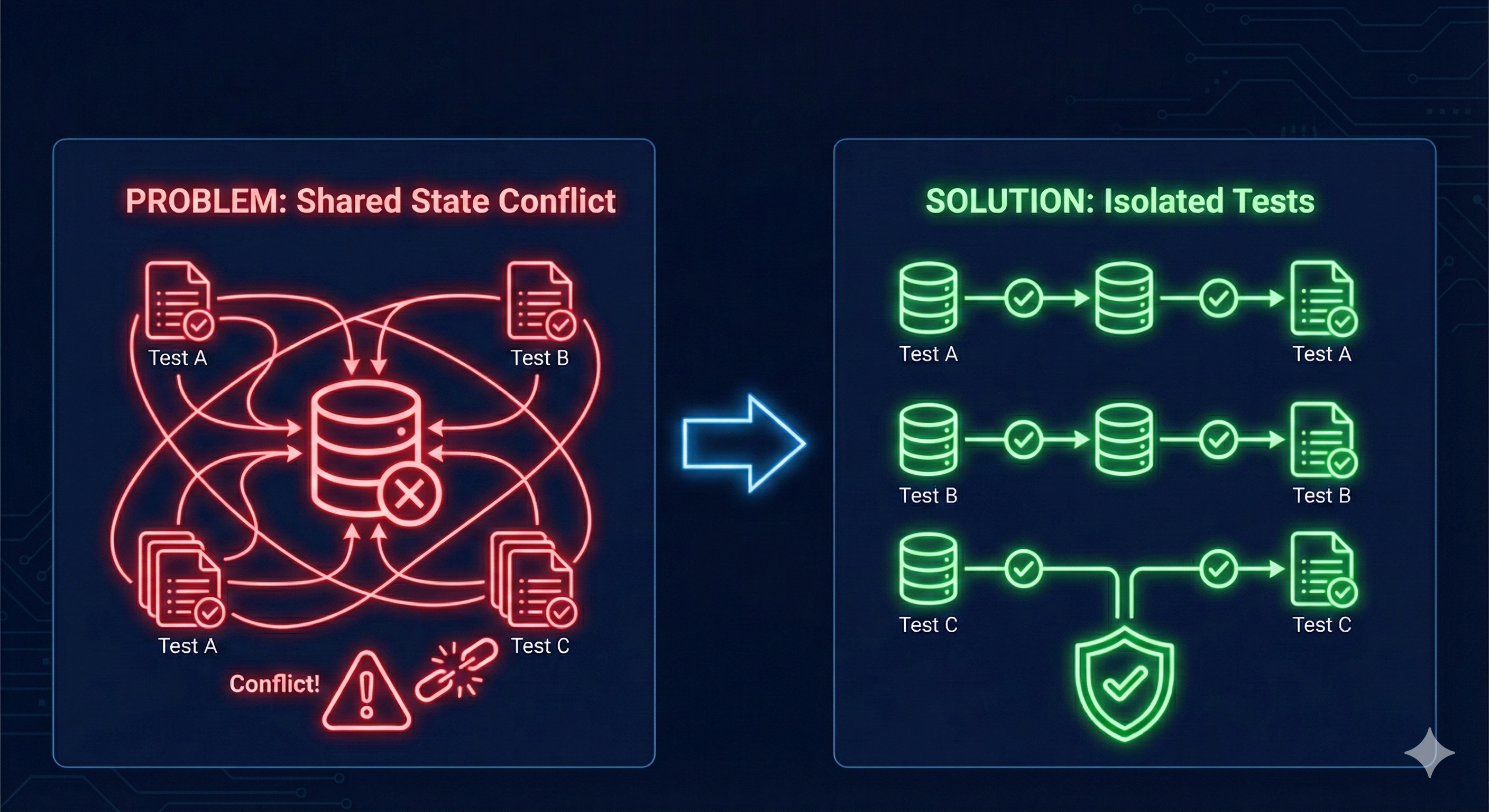

Idempotency: Tests That Don't Interfere

Test A creates a user. Test B expects that user doesn't exist. Test A runs first. Test B fails.

The solution: Each test creates its own data and cleans up afterward. Use unique identifiers (timestamps, UUIDs) for test data, "testuser-1733317890123@example.com" instead of "testuser@example.com". This allows parallel test execution without conflicts.

test.beforeEach(async () => {

// Create unique test data

testUser = {

email: `test-${Date.now()}@example.com`,

password: 'TestPass123'

};

});

test.afterEach(async ({ page }) => {

// Clean up after test

await page.request.delete(`/api/users/${testUser.email}`);

});

Test Data Management

Hard-coded test data makes tests brittle. Centralize it:

export const TEST_USERS = {

validUser: { email: 'valid@example.com', password: 'ValidPass123!' },

adminUser: { email: 'admin@example.com', password: 'AdminPass123!', role: 'admin' }

};Or generate programmatically:

function createTestUser(overrides = {}) {

return {

email: `test-${Date.now()}@example.com`,

password: 'DefaultPass123!',

...overrides

};

}Timing and Synchronization Issues

The browser renders asynchronously. Your test runs synchronously. This mismatch causes flaky tests.

Bad approach:

# Selenium - BAD

driver.get('https://example.com')

button = driver.find_element(By.ID, 'submit')

button.click() # Fails if page isn't loaded yetBetter approach - explicit waits:

# Selenium - BETTER

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver.get('https://example.com')

button = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.ID, 'submit'))

)

button.click()Playwright handles this automatically:

// Playwright - automatic waiting

await page.goto('https://example.com');

await page.click('#submit'); // Automatically waits for element to be readyWhen automatic waiting isn't enough, wait for specific conditions:

// Wait for network request to complete

await page.waitForResponse(response =>

response.url().includes('/api/data') && response.status() === 200

);

// Wait for element state

await page.waitForSelector('[data-testid="results"]', { state: 'visible' });

// Wait for custom condition

await page.waitForFunction(() => {

return document.querySelectorAll('.item').length > 0;

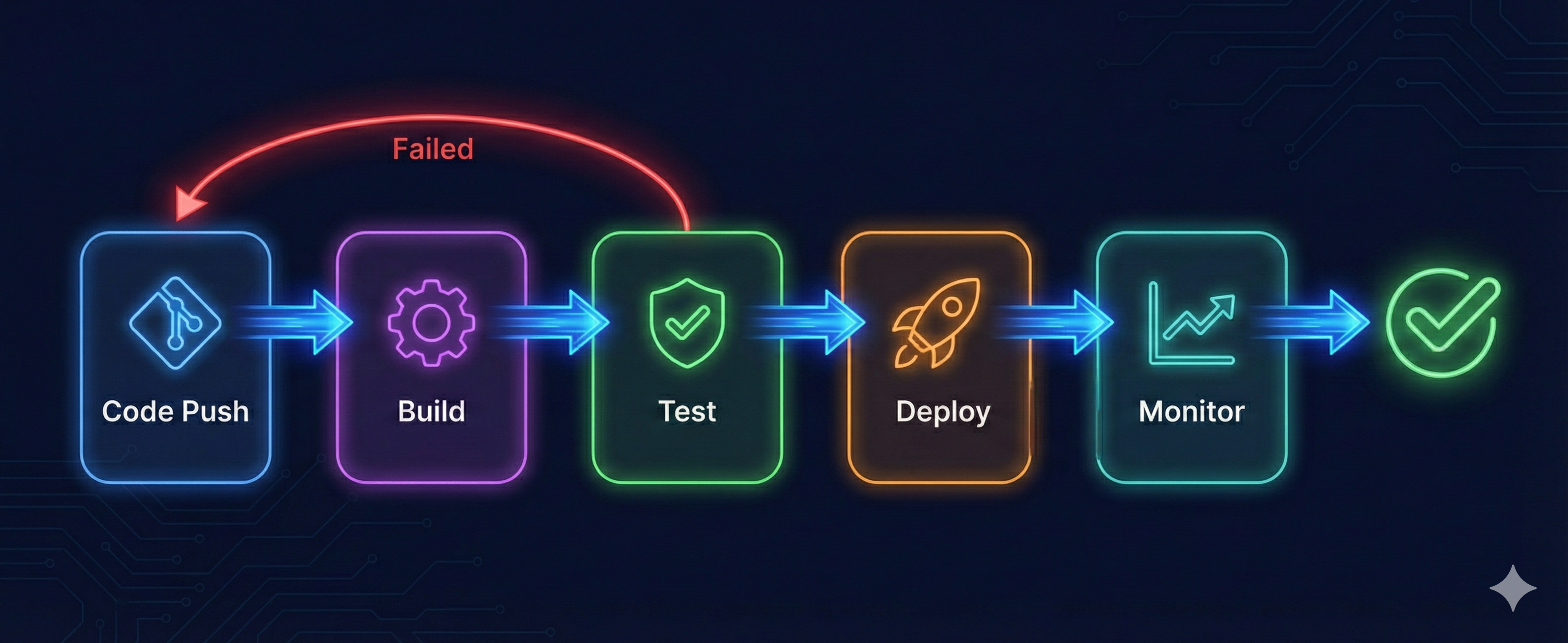

});Integrating Tests Into CI/CD Pipelines

Tests must run automatically in CI/CD. A typical pipeline runs unit tests first (fastest), then integration, then E2E (slowest).

GitHub Actions example:

name: E2E Tests

on: [push, pull_request]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

- run: npm ci

- run: npx playwright install --with-deps

- run: npx playwright test

- uses: actions/upload-artifact@v3

if: always()

with:

name: playwright-report

path: playwright-report/Use if: always() to upload reports even when tests fail.

What We Didn't Cover: The Maintenance Problem

These frameworks let you write tests. The hard part is keeping them working.

According to our State of QA 2025 report, teams spend 30-40% of QA time maintaining test suites. Selectors break when UIs change. APIs evolve. Tests that passed last month fail this month.

The traditional solution is discipline: use stable selectors, write atomic tests, maintain test data carefully. This helps. It doesn't solve the problem.

There's a different approach. Instead of scripts that break when your app changes, use systems that adapt. We'll explore this in Chapter 8 when we discuss AI-powered testing. For now, understand that choosing a framework is the easy part. Making automation sustainable is the real challenge.

For complete working examples of all frameworks discussed in this chapter, visit our GitHub repository.

Course Navigation

Chapter 4 of 8: Test Automation Frameworks Guide ✓

Next Chapter →

Chapter 5: Page Object Model & Test Architecture

Learn how to build maintainable test automation with Page Object Model. Reduce test maintenance by 80% with proper architecture, complete Python and TypeScript examples included.

← Previous Chapter

Chapter 3: Test Planning and Organization - Master the testing workflow