Test Automation with Python and JavaScript: Complete Setup Guide

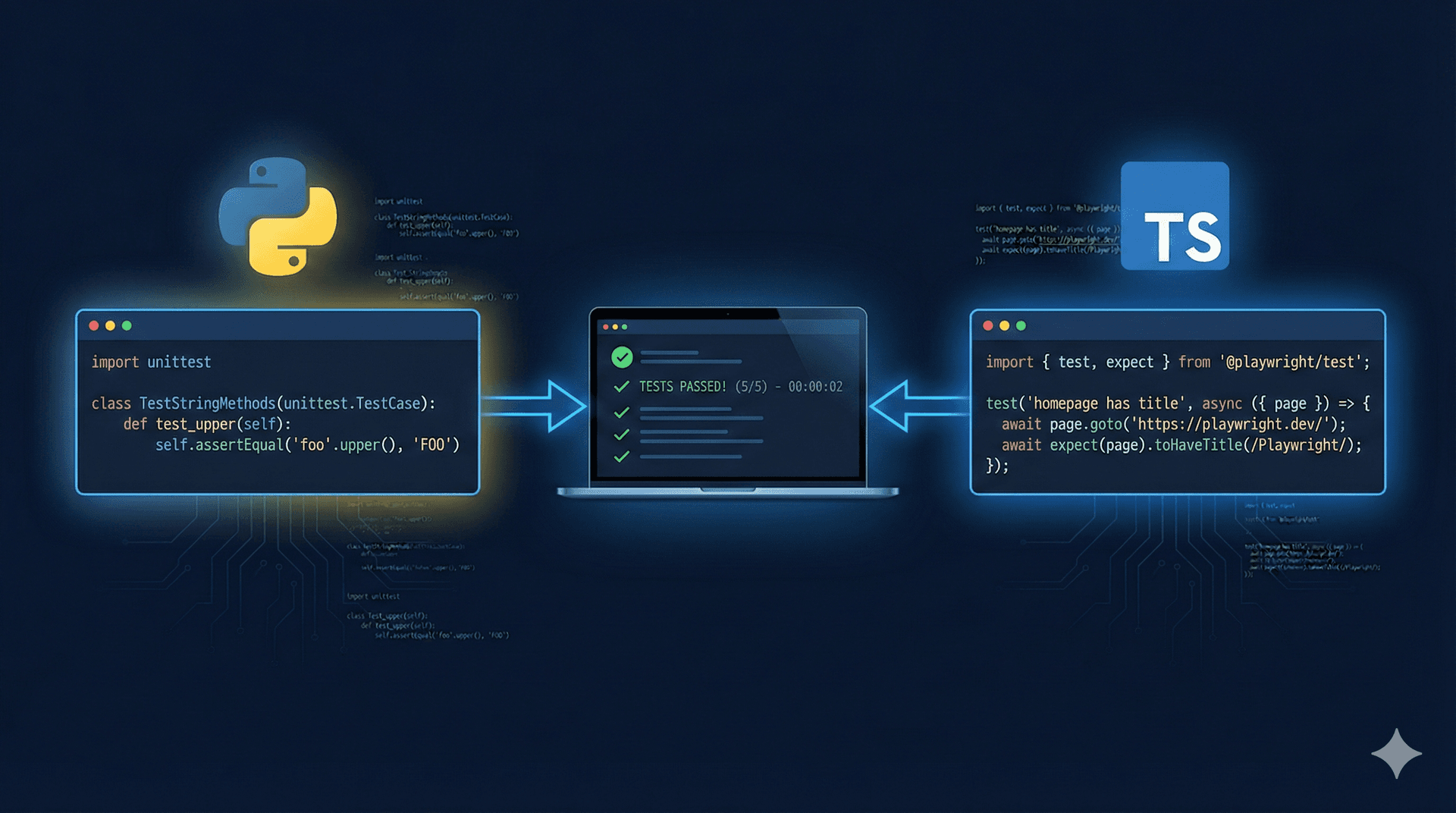

Quick summary: This chapter provides complete setup guides for Python + pytest + Playwright and TypeScript + Playwright test automation. Python has simpler syntax and is faster to start (ideal for QA teams), while TypeScript offers better IDE support and type safety (preferred by JavaScript teams). Both include CI/CD configuration with GitHub Actions, parallel execution, cross-browser testing, and debugging tools like trace viewer and HTML reports.

Introduction

You've learned the concepts. You understand what tests are, why automation matters, and which tools exist. Now let's build something real.

This chapter covers complete test automation with Python and JavaScript, giving you hands-on implementation guides for Playwright. I'm going to show you how to set up test automation from scratch. Not theory, actual working projects you can run today. We'll build the same login test in both Python and TypeScript. You'll see the differences, the trade-offs, and which one fits your team.

By the end, you'll have complete projects running locally and in CI/CD.

You can follow this guide with code examples with this GitHub repository.

Choosing Your Stack: Python vs TypeScript

You need to pick a language. Both work brilliantly with Playwright. The choice comes down to your team.

Python has simpler syntax. You write less code to achieve the same result. If your QA team isn't deeply technical or comes from a manual testing background, Python removes friction. The learning curve is gentler.

TypeScript offers type safety. Your IDE catches errors before you run tests. If your team already writes JavaScript for the product, TypeScript keeps everyone in the same ecosystem. No context switching.

We'll build both. You decide which fits.

Python Test Automation Setup

Environment Setup

First, create a virtual environment. This isolates your dependencies from other Python projects.

# Create project directory

mkdir test-automation-python

cd test-automation-python

# Create virtual environment

python -m venv venv

# Activate it (Mac/Linux)

source venv/bin/activate

# Activate it (Windows)

venv\Scripts\activate

# Install dependencies

pip install pytest playwright pytest-playwright

# Install browser binaries

playwright installYour virtual environment keeps projects separate. When you activate it, pip install only affects this project.

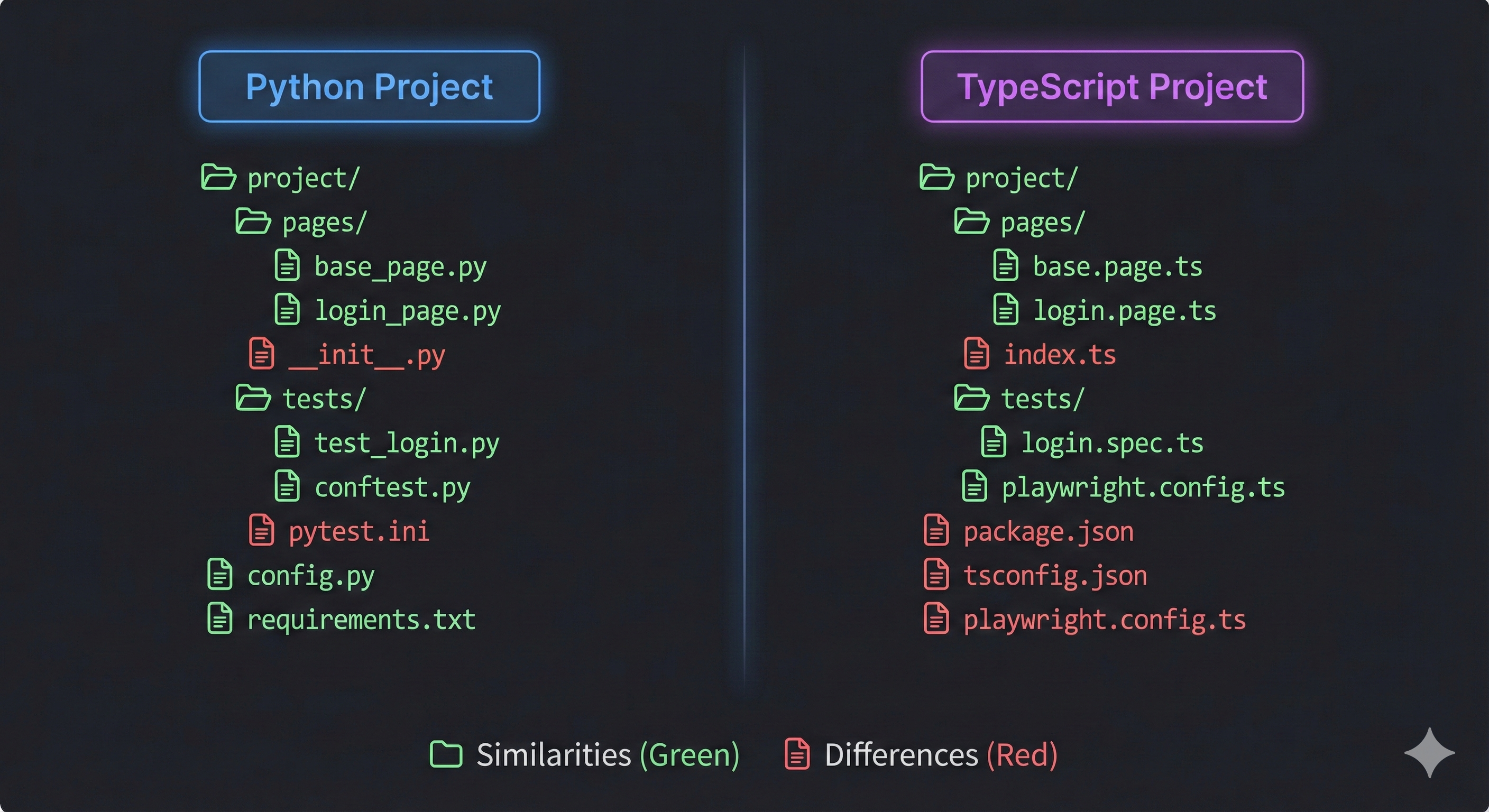

Project Structure

Create this structure:

test-automation-python/

├── tests/

│ ├── __init__.py

│ └── test_login.py

├── pytest.ini

├── requirements.txt

└── .github/

└── workflows/

└── tests.yml

The tests/ directory holds your test files. pytest discovers anything starting with test_.

Configuration Files

Create pytest.ini:

[pytest]

# Run tests in parallel

addopts = -n auto --headed=false --tracing=retain-on-failure

# Where to find tests

testpaths = tests

# Markers for organizing tests

markers =

smoke: Quick smoke tests

regression: Full regression suiteCreate requirements.txt:

pytest==8.0.0

playwright==1.40.0

pytest-playwright==0.4.4

pytest-xdist==3.5.0Now anyone can install your exact dependencies:

pip install -r requirements.txtWriting Your First Test

Create tests/test_login.py:

import pytest

from playwright.sync_api import Page, expect

def test_successful_login(page: Page):

"""Test user can log in with valid credentials"""

# Navigate to login page

page.goto("https://bank-demo-web-app.vercel.app/")

# Fill in credentials

page.get_by_label("Username").fill("testuser")

page.get_by_label("Password").fill("password123")

# Click login button

page.get_by_role("button", name="Sign in").click()

# Verify successful login

expect(page.get_by_text("Welcome back")).to_be_visible()

expect(page).to_have_url("https://bank-demo-web-app.vercel.app/dashboard")

@pytest.mark.smoke

def test_login_validation(page: Page):

"""Test login shows error for invalid credentials"""

page.goto("https://bank-demo-web-app.vercel.app/")

# Try logging in without credentials

page.get_by_role("button", name="Sign in").click()

# Verify error message appears

expect(page.get_by_text("Username is required")).to_be_visible()Run it:

pytest tests/test_login.pyYou should see:

tests/test_login.py::test_successful_login PASSED

tests/test_login.py::test_login_validation PASSED

What Just Happened?

The page parameter is a pytest fixture provided by pytest-playwright. It automatically launches a browser, creates a page, and cleans up after the test.

The test uses Playwright's locator strategy. get_by_label finds inputs by their label text. get_by_role finds elements by their ARIA role. These locators are resilient,they don't break when you change CSS classes.

The @pytest.mark.smoke decorator lets you run subsets:

# Run only smoke tests

pytest -m smoke

# Run everything except smoke tests

pytest -m "not smoke"TypeScript Test Automation Setup

Environment Setup

TypeScript requires Node.js. Install it from nodejs.org if you haven't already.

# Create project directory

mkdir test-automation-typescript

cd test-automation-typescript

# Initialize npm project

npm init -y

# Install Playwright

npm init playwright@latestThe Playwright installer asks questions:

- Choose TypeScript

- Use

testsfor test directory - Add GitHub Actions workflow (we'll customize it later)

This creates everything you need.

Project Structure

test-automation-typescript/

├── tests/

│ └── login.spec.ts

├── playwright.config.ts

├── package.json

├── tsconfig.json

└── .github/

└── workflows/

└── playwright.yml

Configuration Files

The playwright.config.ts is already created. Key settings:

import { defineConfig, devices } from '@playwright/test';

export default defineConfig({

testDir: './tests',

fullyParallel: true,

forbidOnly: !!process.env.CI,

retries: process.env.CI ? 2 : 0,

workers: process.env.CI ? 1 : undefined,

reporter: 'html',

use: {

baseURL: 'https://bank-demo-web-app.vercel.app',

trace: 'retain-on-failure',

screenshot: 'only-on-failure',

},

projects: [

{

name: 'chromium',

use: { ...devices['Desktop Chrome'] },

},

{

name: 'firefox',

use: { ...devices['Desktop Firefox'] },

},

{

name: 'webkit',

use: { ...devices['Desktop Safari'] },

},

],

});This runs tests on Chrome, Firefox, and Safari. In parallel. Automatically.

Writing Your First Test

Create tests/login.spec.ts:

import { test, expect } from '@playwright/test';

test('successful login', async ({ page }) => {

// Navigate to login page

await page.goto('/login');

// Fill in credentials

await page.getByLabel('Username').fill('testuser');

await page.getByLabel('Password').fill('password123');

// Click login button

await page.getByRole('button', { name: 'Sign in' }).click();

// Verify successful login

await expect(page.getByText('Welcome back')).toBeVisible();

await expect(page).toHaveURL('/dashboard');

});

test('login validation', async ({ page }) => {

await page.goto('/login');

// Try logging in without credentials

await page.getByRole('button', { name: 'Sign in' }).click();

// Verify error message appears

await expect(page.getByText('Username is required')).toBeVisible();

});Run it:

npx playwright test

You'll see tests run across all three browsers:

Running 6 tests using 3 workers

6 passed (12.3s)

To open last HTML report run:

npx playwright show-report

The TypeScript Advantage

TypeScript caught an error I almost made. I typed page.getByLable (missing the 'e'). My IDE showed a red squiggle immediately. Python wouldn't catch this until runtime.

The trade-off? More setup complexity. TypeScript requires a compiler, type definitions, and configuration. Python just runs.

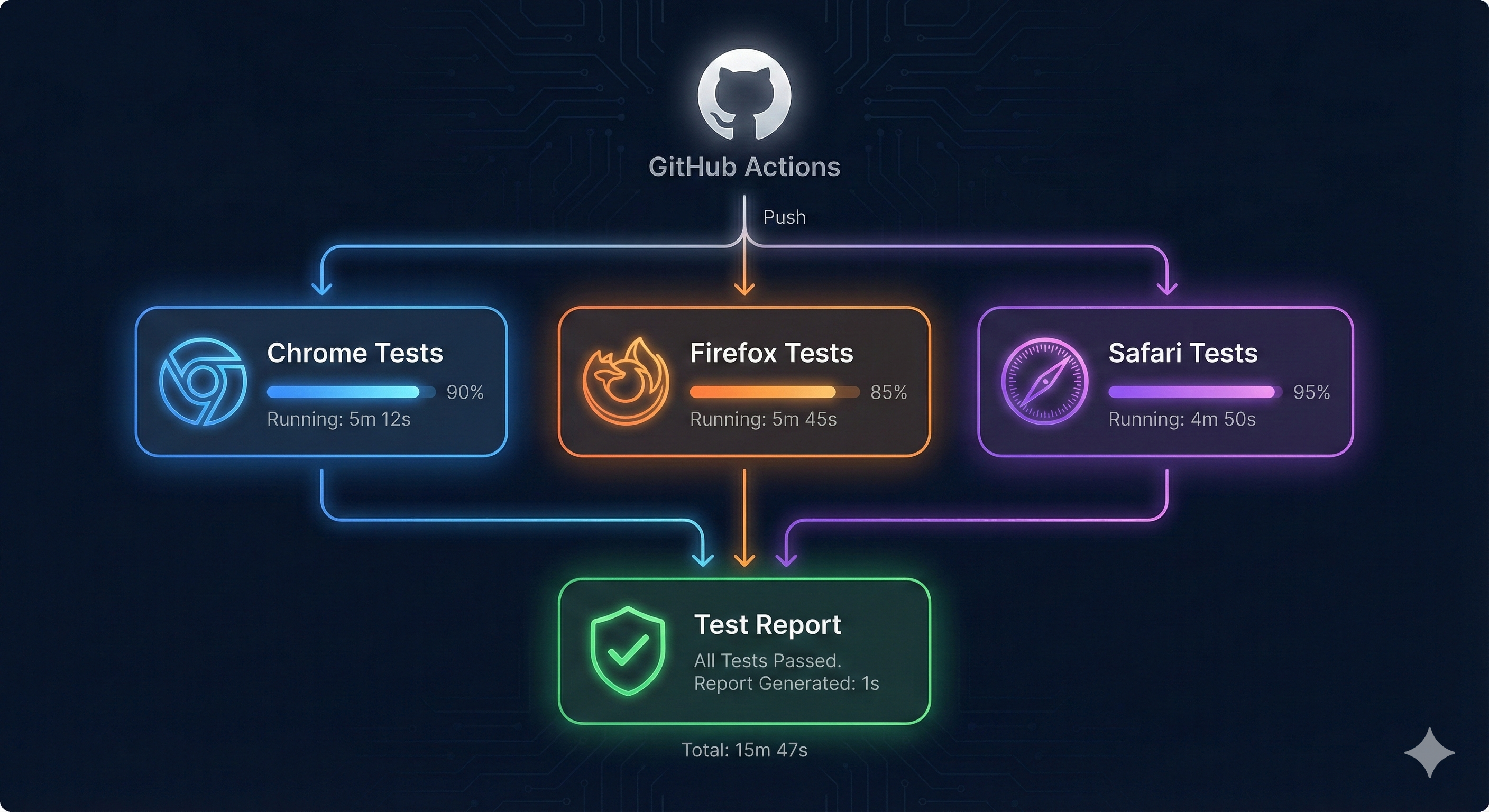

Running Tests in CI/CD

Tests that only run on your laptop aren't reliable. CI/CD runs them on every code change.

GitHub Actions Configuration

Both projects need a .github/workflows/tests.yml file.

For Python:

name: Playwright Tests

on:

push:

branches: [ main, master ]

pull_request:

branches: [ main, master ]

jobs:

test:

timeout-minutes: 60

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Install Playwright browsers

run: playwright install --with-deps

- name: Run tests

run: pytest tests/

- uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report

path: test-results/

retention-days: 30For TypeScript:

name: Playwright Tests

on:

push:

branches: [ main, master ]

pull_request:

branches: [ main, master ]

jobs:

test:

timeout-minutes: 60

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

with:

node-version: 18

- name: Install dependencies

run: npm ci

- name: Install Playwright Browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

run: npx playwright test

- uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report

path: playwright-report/

retention-days: 30

Push your code. GitHub Actions runs your tests automatically. If they fail, your pull request gets blocked.

This prevents bugs from reaching production.

Test Reports and Debugging

When tests fail, you need context. Screenshots, videos, traces.

HTML Reports

Playwright generates beautiful HTML reports automatically.

Python: Add to pytest.ini:

[pytest]

addopts = --html=report.html --self-contained-htmlInstall the plugin:

pip install pytest-htmlTypeScript: Already configured. Just run:

npx playwright show-report

The report shows:

- Which tests passed or failed

- Execution time per test

- Screenshots at failure point

- Full traces you can replay

Click a failed test. You see exactly what the browser saw when it failed.

Trace Viewer

Traces are time-travel debugging. Playwright records every action, screenshot, and network request.

When a test fails in CI/CD, download the trace artifact. Open it:

npx playwright show-trace trace.zipYou can step through the test action by action. See what the page looked like. Check network requests. Inspect the DOM at any point.

This is how you debug flaky tests.

Parallel Execution

Running tests one at a time is slow. Run them in parallel.

Python: pytest-xdist handles this:

# Auto-detect CPU cores and use them all

pytest -n auto

# Use specific number of workers

pytest -n 4TypeScript: Playwright parallelizes by default:

// In playwright.config.ts

export default defineConfig({

workers: process.env.CI ? 1 : undefined, // All cores locally, 1 in CI

fullyParallel: true,

});My laptop has 8 cores. Without parallelization, 24 tests take 3 minutes. With parallelization? 30 seconds.

The catch: tests must be independent. If one test depends on another's data, parallel execution breaks them. Write tests that set up their own data.

Cross-Browser Testing

Your users don't all use Chrome. Test Firefox and Safari too.

Python: Configure in pytest.ini:

[pytest]

addopts = --browser chromium --browser firefox --browser webkitTypeScript: Already configured in playwright.config.ts. The projects array defines browsers:

projects: [

{ name: 'chromium', use: { ...devices['Desktop Chrome'] } },

{ name: 'firefox', use: { ...devices['Desktop Firefox'] } },

{ name: 'webkit', use: { ...devices['Desktop Safari'] } },

],Run tests:

# Python

pytest tests/

# TypeScript

npx playwright testPlaywright runs your tests on all three browsers. If a test passes on Chrome but fails on Firefox, you found a real browser compatibility bug.

Common Errors and Fixes

"Timeout waiting for element"

Your test looks for an element that never appears. Either the selector is wrong or the page is slow.

Fix: Increase timeout or use better selectors:

# Python - increase timeout

page.get_by_text("Loading...").wait_for(timeout=10000)

# TypeScript

await page.getByText('Loading...').waitFor({ timeout: 10000 });"Browser executable not found"

You forgot to install browser binaries.

Fix:

# Python

playwright install

# TypeScript

npx playwright install"Tests pass locally but fail in CI"

Usually timing issues. CI is slower than your laptop.

Fix: Add explicit waits:

# Wait for network to be idle

page.wait_for_load_state("networkidle")Or increase global timeout in CI:

// playwright.config.ts

export default defineConfig({

timeout: process.env.CI ? 60000 : 30000,

});Where to Go From Here

You have working test automation. Tests run locally and in CI/CD. You can run them in parallel across multiple browsers.

For complete starter projects with more examples, see our GitHub repository (Python and TypeScript templates ready to clone).

But there's still friction. You write tests manually. When your UI changes, tests break. You spend time maintaining selectors and fixing flaky tests.

Chapter 8 explores how AI changes everything. Autonomous testing that generates, runs, and maintains tests without human intervention. You're about to see how teams ship 4x faster without hiring QA engineers.

Course Navigation

Chapter 7 of 8: Test Automation with Python and JavaScript ✓

Next Chapter →

Chapter 8: AI-Powered Software Testing with Autonoma

See how AI transforms testing. Learn about self-healing tests, natural language test creation, and how companies ship 10x faster with autonomous testing that eliminates maintenance.

← Previous Chapter

Chapter 6: How to Reduce Test Flakiness - Fix unreliable tests