How to Reduce Test Flakiness: Best Practices and Solutions

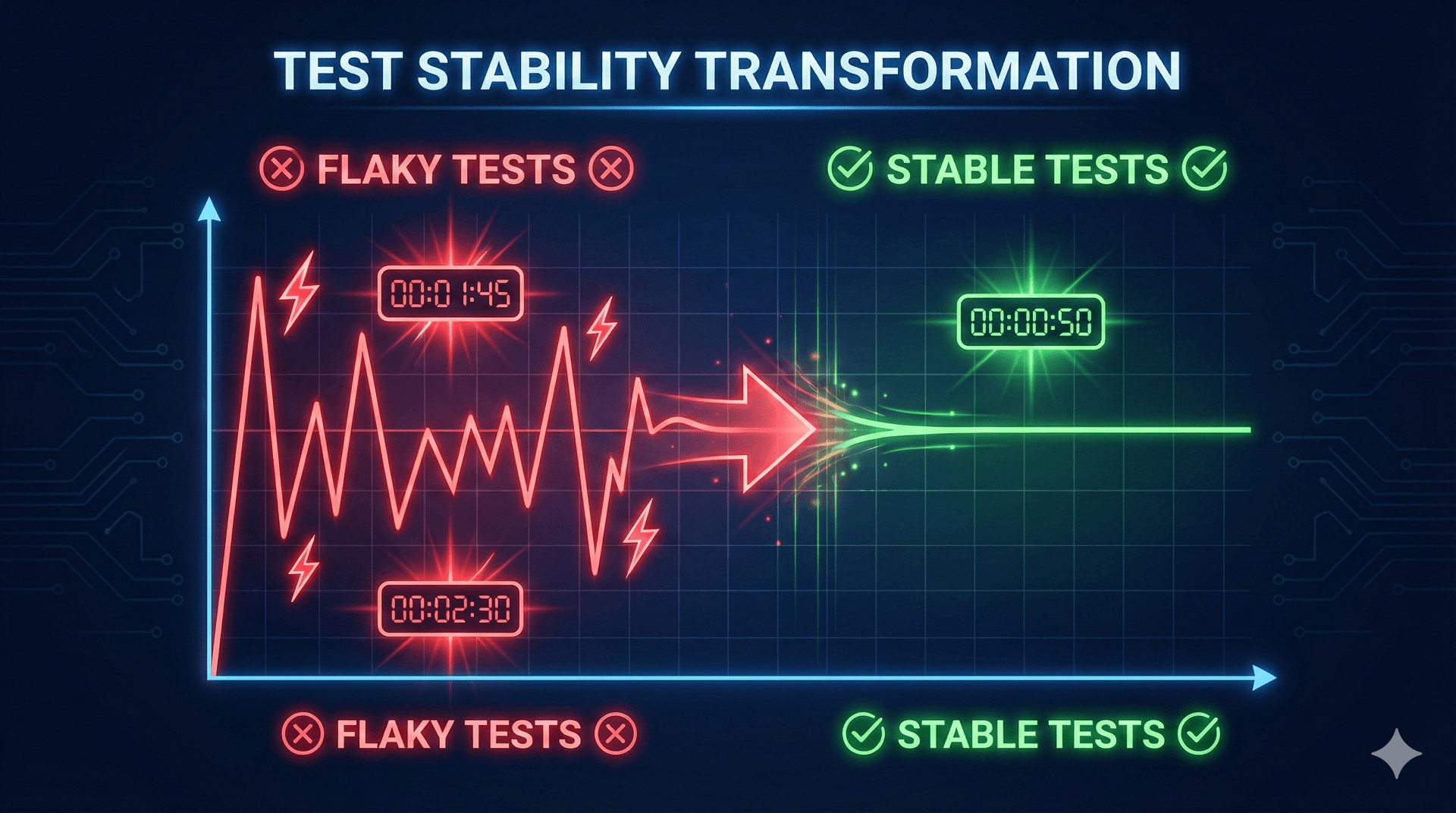

Quick summary: Flaky tests pass or fail unpredictably without code changes. Root causes include timing issues, poor selectors, external dependencies, and environment instability (90% of flakiness). Use dynamic waits instead of fixed sleeps, stable test IDs instead of brittle CSS selectors, and ensure each test sets up and tears down its own data without depending on other tests.

The "It Passed on My Machine" Problem

The test passed on your machine. You committed the code. CI failed. You re-ran it. It passed. You merged. Production deployment failed on the same test. You re-ran it again. It passed.

This is test flakiness, the silent killer of automation credibility.

I've watched engineering teams lose faith in their entire test suite because 5% of tests fail randomly. "Just re-run it" becomes the team's default response. When tests can't be trusted, they become noise instead of signal. Bugs slip through because nobody believes the failures anymore.

The worst part? Flaky tests aren't always obvious. They pass 95% of the time. Just often enough that you think they're reliable. Just rarely enough to wreck your deployment pipeline when you're on a deadline.

What Is Test Flakiness?

Test flakiness happens when a test produces inconsistent results without any changes to the code under test. The same test, the same code, different outcome.

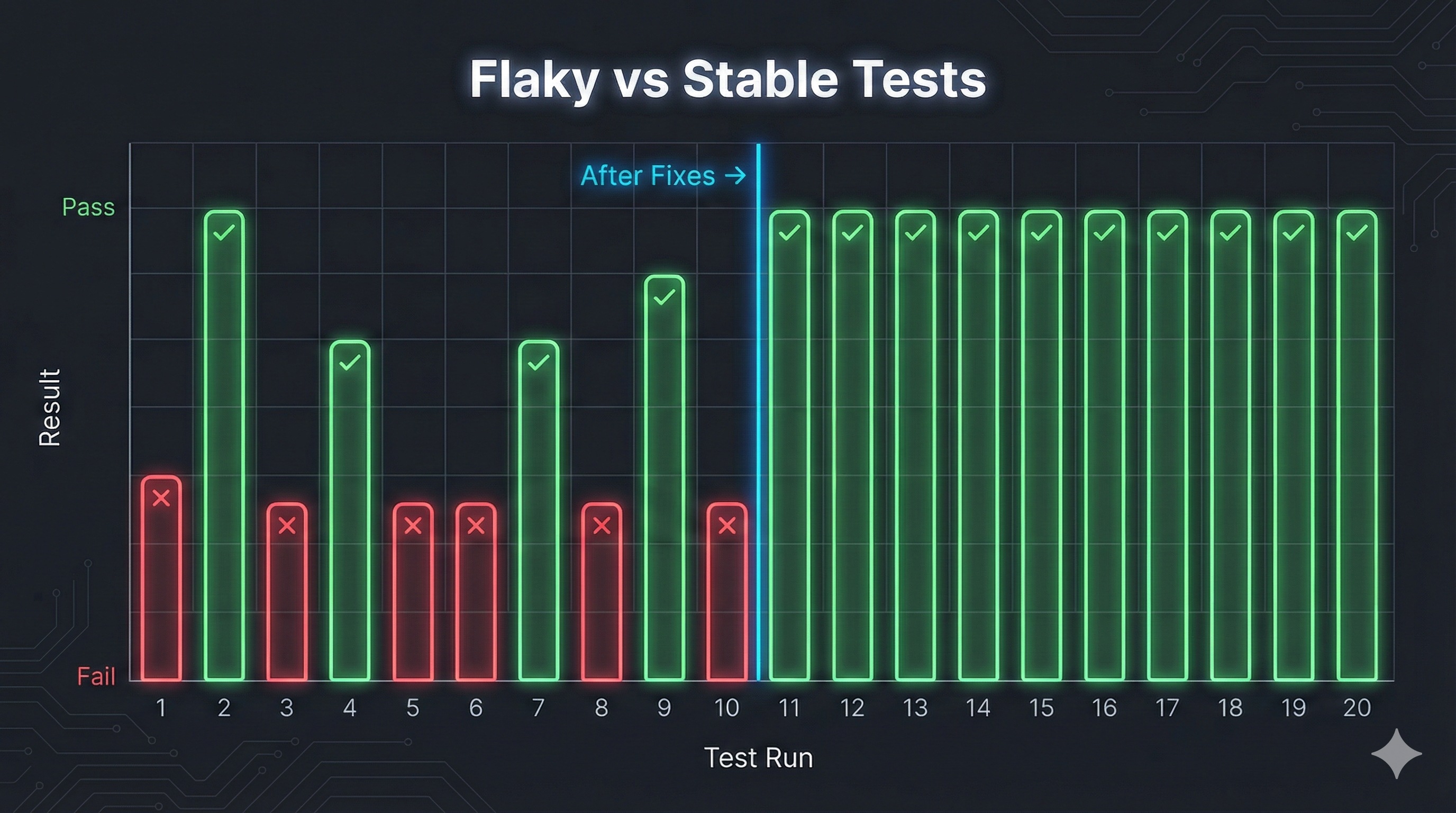

A flaky test might pass 18 out of 20 times. Those two failures aren't random, they're symptoms of an underlying problem. The test is racing against something: a loading animation, a network request, a database query, an animation that hasn't finished.

According to our State of QA 2025 report, test maintenance, including fighting flakiness, consumes 40% of QA team time. That's hundreds of hours per year spent debugging tests instead of finding bugs.

The cost isn't just developer time. It's lost confidence. When developers stop trusting tests, they stop writing them.

Root Causes: Where Flakiness Comes From

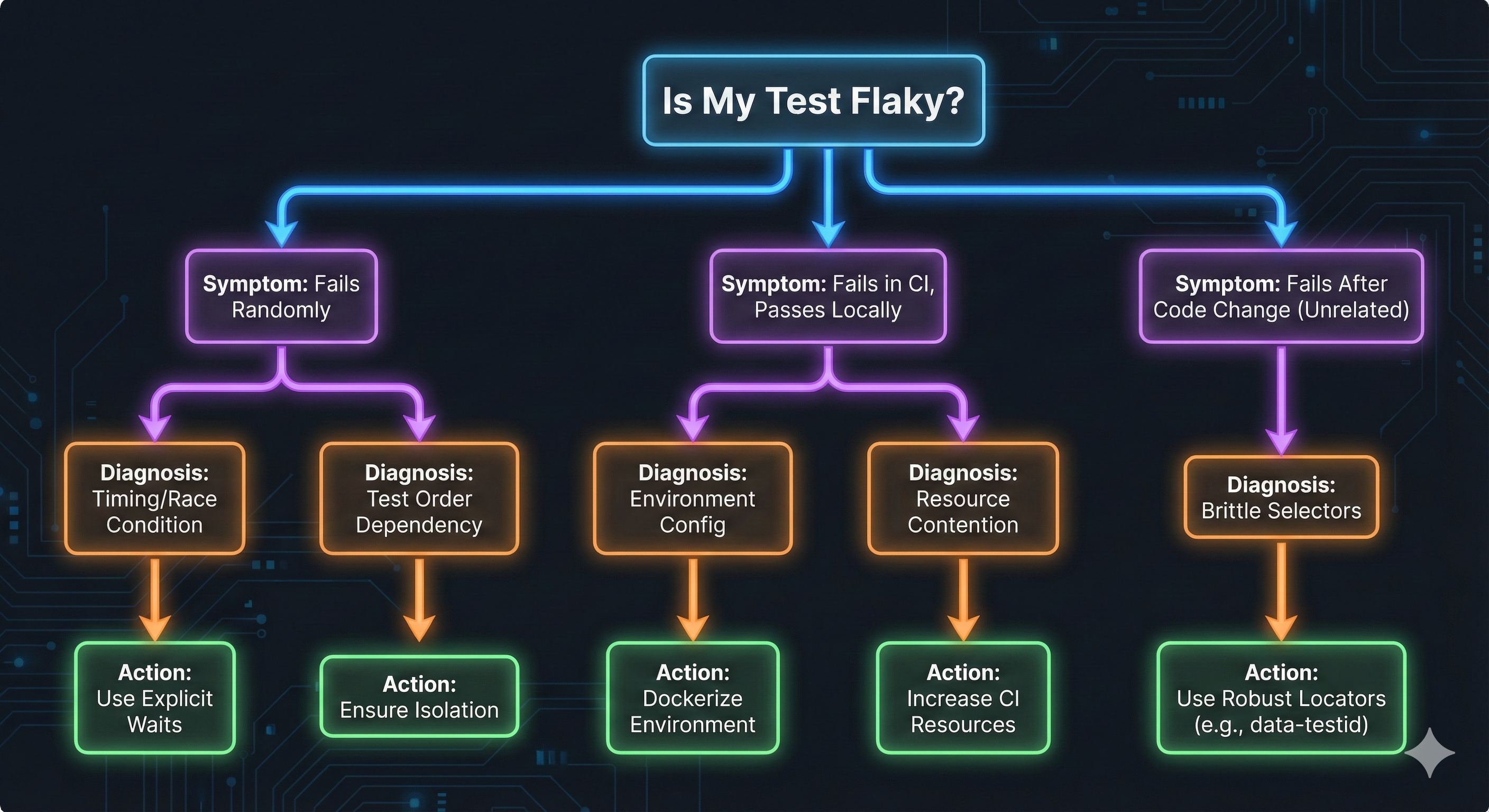

Flaky tests don't appear randomly. They emerge from predictable patterns.

Timing Issues and Race Conditions

Timing problems are the most common culprit. Your test clicks a button before the JavaScript has fully loaded. It checks for text before the API response has rendered. It interacts with an element before the animation completes.

Race conditions happen when tests assume things happen instantly. They don't. Networks have latency. Databases have query time. Browsers have render cycles.

Dynamic Content and Loading States

Modern web applications don't load all at once. They show spinners, skeleton screens, loading states, and progressive rendering. Your test needs to know when the actual content has loaded, not when the loading spinner appeared.

External Dependencies

Tests that call real APIs, connect to databases, or depend on third-party services inherit those systems' instability. The API times out occasionally. The database is under load. The third-party service rate limits you. Your test fails.

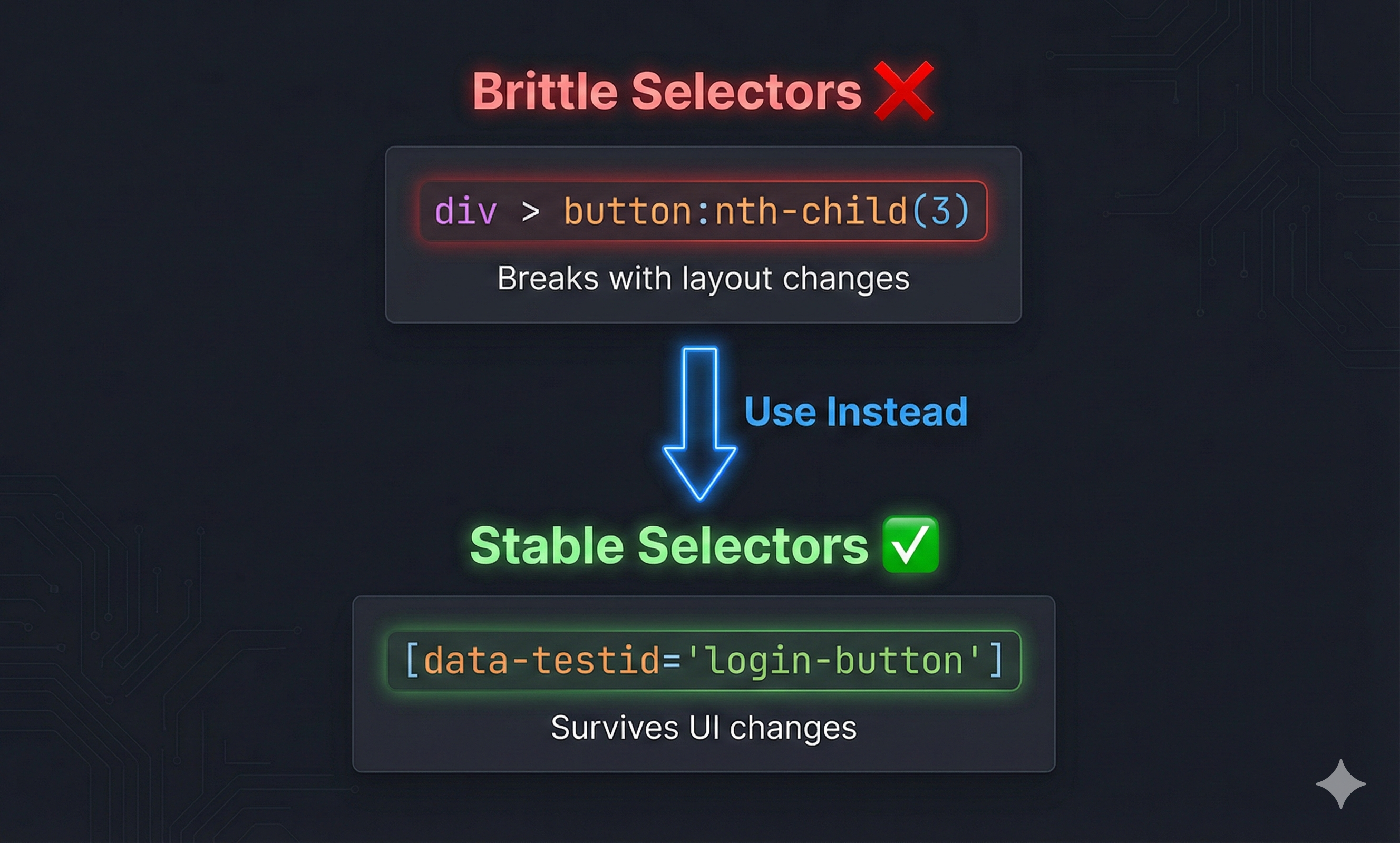

Poor Selectors

CSS selectors break. You target div > button:nth-child(3) and it works perfectly, until someone adds a button. Or changes the layout. Or uses a different CSS framework. The selector stops matching and the test fails.

These causes aren't independent. A test might use a brittle selector that works fine when the page loads quickly, but fails when there's network lag because it targets the wrong element during a loading state.

Flakiness Reduction Techniques: A Debugging Story

Let me show you how to fix flaky tests by walking through a real debugging session.

The Problem: A Login Test That Fails Randomly

I had a test that logged in, navigated to the dashboard, and verified the user's name appeared. It passed most of the time. Every fifth run, it failed with "Element not found."

Bad approach (what I tried first):

# BAD: Fixed sleep - The lazy solution

def test_login():

driver.get("https://app.example.com/login")

driver.find_element(By.ID, "email").send_keys("user@example.com")

driver.find_element(By.ID, "password").send_keys("password123")

driver.find_element(By.CSS_SELECTOR, "button[type='submit']").click()

time.sleep(5) # "Just wait 5 seconds, that should be enough"

username = driver.find_element(By.CSS_SELECTOR, "div.header > span:nth-child(2)").text

assert username == "John Doe"This "worked", for a while. Then it started failing again. Sometimes the page loaded in 3 seconds. Sometimes 7 seconds. The fixed sleep was a guess, not a solution.

Good approach (what actually fixed it):

# GOOD: Dynamic wait + stable selector

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def test_login():

driver.get("https://app.example.com/login")

driver.find_element(By.ID, "email").send_keys("user@example.com")

driver.find_element(By.ID, "password").send_keys("password123")

driver.find_element(By.CSS_SELECTOR, "button[type='submit']").click()

# Wait for the actual element we need, with a timeout

wait = WebDriverWait(driver, 10)

username_element = wait.until(

EC.presence_of_element_located((By.ATTR, "data-testid", "user-name"))

)

assert username_element.text == "John Doe"This version waits intelligently. It polls for the element every 500ms, up to 10 seconds. The moment the element appears, it proceeds. If the page loads in 2 seconds, the test waits 2 seconds. If it takes 8 seconds, the test waits 8 seconds.

The Selector Problem

The CSS selector div.header > span:nth-child(2) was brittle. When the design team added an icon to the header, the span became the third child. The test broke.

The fix: Use data attributes specifically for testing.

Bad selector (brittle):

// BAD: Position-based selector that breaks with layout changes

const username = await page.locator('div.header > span:nth-child(2)').textContent();Good selector (stable):

// GOOD: Test ID that survives design changes

const username = await page.locator('[data-testid="user-name"]').textContent();

The test ID doesn't care about position, styling, or class names. It targets the specific element you need.

Network and API Waits

The test was also racing against an API call. The page rendered the header, but the API call to fetch the user's name hadn't completed. The element existed, but was empty.

Playwright solution (network idle):

// Wait for network to be idle before proceeding

await page.goto('https://app.example.com/dashboard', {

waitUntil: 'networkidle'

});

// Or wait for a specific API call to complete

await page.waitForResponse(response =>

response.url().includes('/api/user') && response.status() === 200

);

const username = await page.locator('[data-testid="user-name"]').textContent();

expect(username).toBe('John Doe');

Best Practices for Stable Tests

1. Test Isolation

Each test should be completely independent. It should create its own test data, run, and clean up after itself.

Bad: Tests depend on each other:

# BAD: Test 2 depends on Test 1 creating the user

def test_1_create_user():

create_user("test@example.com")

def test_2_login_user():

login("test@example.com") # Fails if test_1 didn't run firstGood: Each test is self-contained:

# GOOD: Each test sets up and tears down its own data

def test_create_user():

user = create_user("create@example.com")

assert user.email == "create@example.com"

cleanup_user(user)

def test_login_user():

user = create_user("login@example.com") # Create fresh data

login_result = login(user.email)

assert login_result.success == True

cleanup_user(user)Use setup and teardown hooks:

import pytest

@pytest.fixture

def test_user():

# Setup: Create user before test

user = create_user(f"test_{uuid.uuid4()}@example.com")

yield user

# Teardown: Delete user after test

cleanup_user(user)

def test_login(test_user):

result = login(test_user.email)

assert result.success == True2. Idempotent Test Design

Tests should produce the same result no matter how many times you run them. They shouldn't fail because previous runs left data behind.

Use unique identifiers for test data:

// Generate unique test data each run

const testEmail = `test_${Date.now()}_${Math.random()}@example.com`;

const testProduct = `Test Product ${uuid()}`;3. Retry Mechanisms

Some flakiness is unavoidable, network blips, infrastructure hiccups, cosmic rays. Retry mechanisms catch these edge cases without hiding real bugs.

# Retry flaky operations, not entire tests

from tenacity import retry, stop_after_attempt, wait_exponential

@retry(stop=stop_after_attempt(3), wait=wait_exponential(multiplier=1, min=1, max=10))

def fetch_user_data(user_id):

response = requests.get(f"https://api.example.com/users/{user_id}")

response.raise_for_status()

return response.json()Important: Retry the flaky operation (like an API call), not the entire test. If you retry the whole test, you might be hiding bugs instead of handling instability.

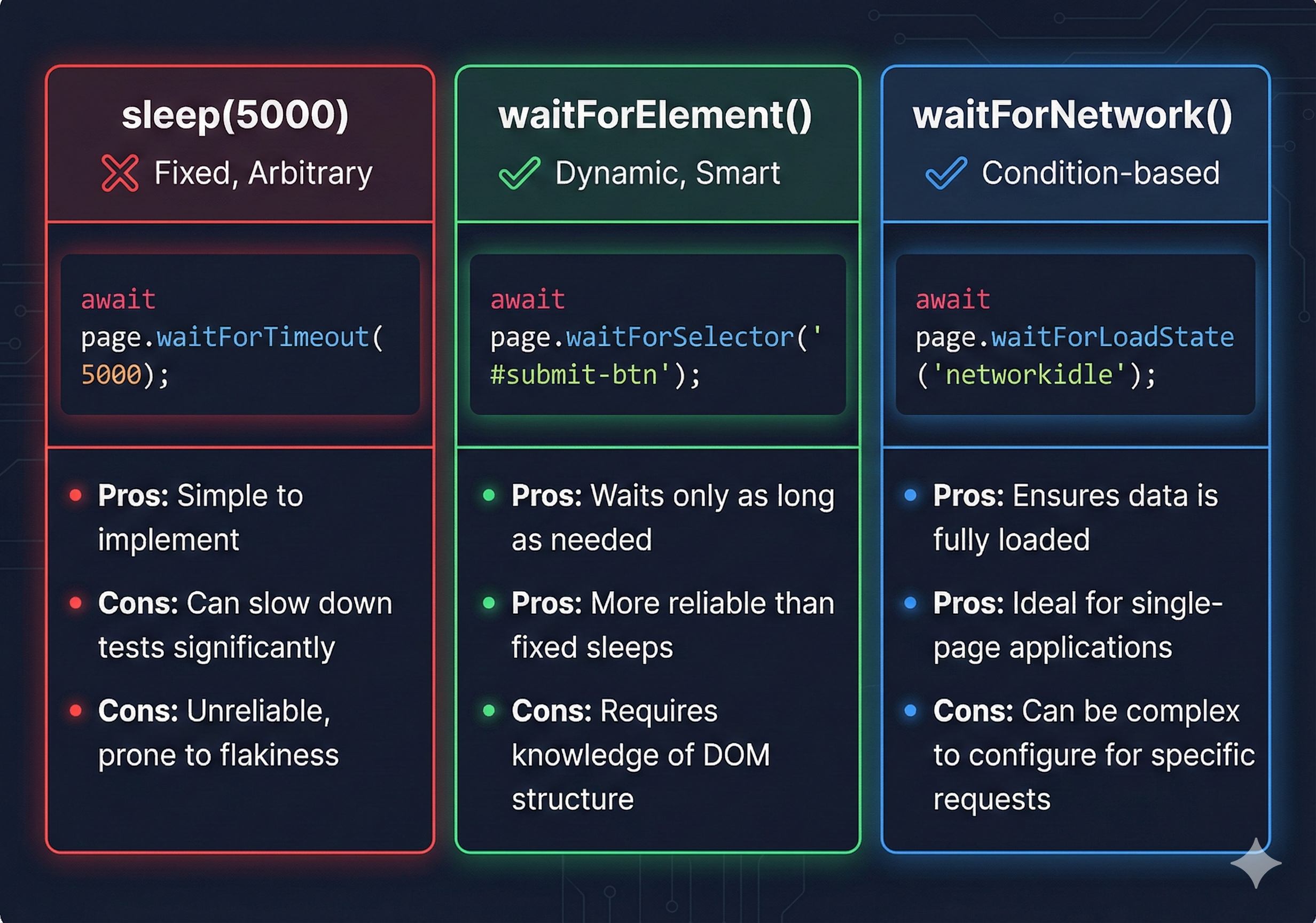

4. Explicit Waits Over Implicit Waits

Configure explicit waits for specific conditions, not global timeouts.

// BAD: Implicit wait applies to everything

await page.setDefaultTimeout(30000);

// GOOD: Explicit wait for specific condition

await page.waitForSelector('[data-testid="dashboard-loaded"]', {

state: 'visible',

timeout: 10000

});5. Component Reusability

Extract common flows into reusable functions. When you fix flakiness in the function, every test that uses it benefits.

// Reusable login function with proper waits

async function login(page: Page, email: string, password: string) {

await page.goto('/login');

await page.fill('[data-testid="email"]', email);

await page.fill('[data-testid="password"]', password);

await page.click('[data-testid="login-button"]');

// Wait for login to complete

await page.waitForURL('/dashboard');

await page.waitForSelector('[data-testid="user-menu"]', { state: 'visible' });

}

// Use it in every test that needs authentication

test('view profile', async ({ page }) => {

await login(page, 'user@example.com', 'password123');

await page.click('[data-testid="user-menu"]');

await page.click('[data-testid="profile-link"]');

// ... rest of test

});When you discover a flakiness issue in login, you fix it once in the function, not in 47 different tests.

The Path Forward

Fighting flakiness is ongoing work. Every new feature, every UI change, every third-party integration introduces new opportunities for flakiness.

The techniques in this chapter, dynamic waits, stable selectors, test isolation, retry mechanisms, reduce flakiness from a constant problem to an occasional annoyance.

But there's another approach emerging. AI-powered testing tools can automatically detect and fix flakiness patterns. They learn which waits work, which selectors are stable, and how to handle loading states without manual configuration.

We'll explore this in Chapter 8: How AI is changing test automation, including how autonomous testing handles flakiness automatically.

For now, apply these practices to your existing tests. Replace fixed sleeps with dynamic waits. Add test IDs to your application. Make your tests independent. Your future self (and your team) will thank you.

Frequently Asked Questions

How do I know if my test is flaky?

Run it 20 times in a row. If it fails even once without code changes, it's flaky. Use your CI system to track pass/fail rates, any test below 95% pass rate needs investigation.

Should I retry flaky tests automatically in CI?

Retry specific operations (API calls, waits) but not entire tests. If you automatically retry failed tests 3 times, you're hiding problems instead of fixing them. Your test suite will show green while bugs slip through.

What's the difference between implicit and explicit waits?

Implicit waits apply globally to all element lookups with a fixed timeout. Explicit waits target specific conditions with custom timeouts. Use explicit waits, they're more precise and don't slow down tests that don't need to wait.

How long should my waits be?

Long enough to catch slow cases, short enough to fail fast. For page loads, 10-15 seconds is reasonable. For element visibility, 5-10 seconds. For API calls, match your actual timeout configuration. Never use waits longer than 30 seconds, that's a symptom of a different problem.

Can I eliminate all test flakiness?

No. But you can reduce it to <1% failure rate. Some flakiness is environmental (cloud provider hiccups, network issues, CI runner problems). Focus on eliminating systematic flakiness (timing, selectors, dependencies) first.

What should I do about tests that fail in CI but pass locally?

This usually means different environments. Check: CI might have slower CPU/network, different browser versions, different viewport sizes, or different time zones. Run your tests locally with CI-like conditions (slow network simulation, small viewport) to reproduce the issue.

Are flaky tests worse than no tests?

Yes, in some ways. Flaky tests erode trust in your entire test suite. When developers learn to ignore failures, they stop investigating real bugs. Better to disable a flaky test temporarily while you fix it than to leave it failing randomly.

How do I convince my team to invest time fixing flaky tests?

Track the cost. Measure how much time your team spends re-running CI, investigating false failures, and debugging test issues. Compare that to the time it would take to fix the root causes. Most teams spend 10-20 hours per month on flakiness, enough to justify dedicated fix-it time.

Course Navigation

Chapter 6 of 8: How to Reduce Test Flakiness ✓

Next Chapter →

Chapter 7: Test Automation with Python and JavaScript

Get hands-on with complete setup guides for Python and TypeScript automation. Includes project structures, CI/CD configurations, and working examples you can use immediately.

← Previous Chapter

Chapter 5: Page Object Model & Test Architecture - Build maintainable test architecture