AI-Powered Software Testing: The Future is Here with Autonoma

Quick summary: AI-powered testing with Autonoma understands intent rather than recording actions, enabling self-healing tests that adapt automatically to UI changes. Tests are written as step-by-step natural language instructions (not code), eliminating brittle selectors and maintenance overhead. Real-world results show 10x faster delivery with proactive incident detection. Companies can create production-ready tests in under 10 minutes and scale across critical user journeys without hiring QA specialists.

Introduction

You've built tests. You've maintained them. You've watched them break.

You learned about test types and chose E2E testing. You picked Playwright over Cypress. You wrote your first automated test. It worked. Then the button moved. The test failed. You fixed it. A week later, the form changed. The test failed again. You fixed it again.

You added waits to handle flakiness. You refactored selectors for maintainability. You built a framework for reusability. Each solution created new problems. Your test suite grew. So did the maintenance burden.

AI-powered software testing changes everything. There's a better way.

The Evolution of Testing

Manual testing was slow and expensive. Automation made testing faster. It also made testing brittle.

Traditional automation scripts every interaction. Click this element. Type in that field. Assert this text appears. When the UI changes, and it always changes, the script breaks. You update selectors. You adjust waits. You fix flaky tests. The cycle repeats.

The problem isn't automation. The problem is how we automate. Traditional tools record actions. AI understands intent.

How AI Changes Testing

AI doesn't just speed up test creation. It fundamentally changes what testing can be.

Understanding Intent vs Recording Actions

Traditional automation records every detail. "Find the button with class 'btn-primary' at position [240, 180], wait 5 seconds, then click." When the button moves or the class name changes, the test fails.

AI captures intent instead. "Click the submit button." The AI understands what a submit button is, by label, position, context, or behavior. When the button moves, the AI finds it. When the class changes, the AI adapts. The test keeps working.

This isn't about smarter selectors. It's about not using selectors at all.

Self-Healing: Tests That Fix Themselves

Here's what a traditional Playwright test looks like:

import { test, expect } from '@playwright/test';

test('user can complete checkout', async ({ page }) => {

await page.goto('https://shop.example.com');

// Brittle selector - breaks when HTML changes

await page.locator('#product-grid > div:nth-child(3) > button.add-to-cart').click();

// Hard-coded wait - causes flakiness

await page.waitForTimeout(2000);

// Specific class name - breaks when CSS refactors

await page.locator('.cart-icon.header-cart').click();

// Exact text match - breaks with copy changes

await page.locator('button:has-text("Proceed to Checkout")').click();

await page.locator('#email').fill('test@example.com');

await page.locator('#card-number').fill('4242424242424242');

// Another brittle selector

await page.locator('button[type="submit"].checkout-button').click();

// Assertion breaks if wording changes

await expect(page.locator('.success-message')).toHaveText('Order confirmed!');

});This test works. Until the designer moves the cart icon. Or marketing changes "Proceed to Checkout" to "Continue to Payment." Or engineering refactors the CSS classes. Each change breaks the test.

Now compare that to describing the same test in Autonoma:

Test: User can complete checkout

1. Go to the shop homepage

2. Add the third product to cart

3. Open the cart

4. Proceed to checkout

5. Fill in email as test@example.com

6. Fill in card number as 4242424242424242

7. Complete the purchase

8. Verify order confirmation appears

The difference is intent vs implementation. Autonoma's AI understands "add the third product to cart" regardless of selector structure. It knows "open the cart" whether the cart is an icon, a button, or a dropdown. It finds "proceed to checkout" whether the text says "Proceed," "Continue," or "Next."

When the UI changes, traditional tests break and wait for manual fixes. Autonoma tests adapt automatically.

Intelligent Flakiness Reduction

Remember fighting flaky tests with arbitrary waits? await page.waitForTimeout(5000) worked sometimes. Other times it didn't. You tried waitForSelector. You tried waitForLoadState. Each solution worked for some cases and failed for others.

AI-powered testing eliminates most flakiness by understanding context. Instead of waiting for an element to exist, the AI waits for the page to be ready for interaction. Instead of clicking immediately, it ensures the element is actually clickable, not just present, but loaded, visible, enabled, and stable.

Traditional tools check if an element exists. AI checks if an element is ready. That subtle shift eliminates the majority of flaky test failures.

Our Approach: Testing Without Code

Of course, I had to include our own tool. We built Autonoma because we experienced every problem described in this course. We maintained brittle test suites. We debugged flaky tests at 2 AM. We watched productivity sink as test maintenance grew.

So we built something different.

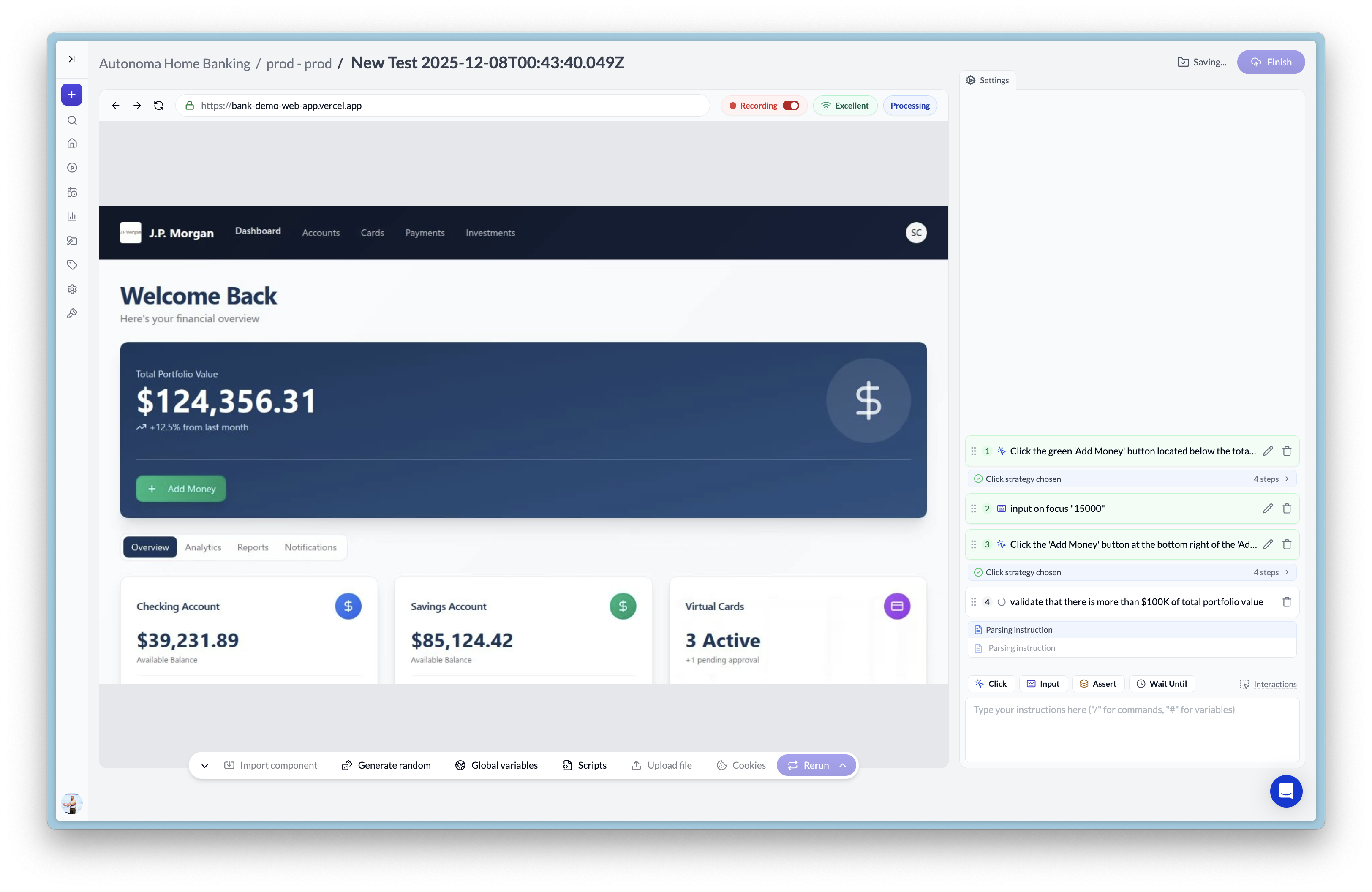

Create Tests by Showing, Not Coding

You don't write Playwright scripts. You don't configure Selenium. You show Autonoma your application and describe what to test in plain language.

"Go to the login page. Enter valid credentials. Verify the dashboard appears."

That's it. Autonoma records your interactions, understands the intent, and creates a test. The entire process takes under 10 minutes. Not 10 minutes per test, 10 minutes to create your first production-ready test including setup.

Natural Language Test Descriptions

Traditional automation requires understanding selectors, async/await, page objects, fixtures, and test framework APIs. Autonoma requires understanding what you want to test.

Instead of:

await page.locator('[data-testid="search-input"]').fill('laptop');

await page.locator('button[aria-label="Search"]').click();

await expect(page.locator('.product-grid')).toBeVisible();You write:

Search for "laptop" and verify results appear

The AI handles finding elements, waiting appropriately, and verifying outcomes. When the search input's data attribute changes from data-testid="search-input" to data-testid="search-field", traditional tests fail. Autonoma tests keep working.

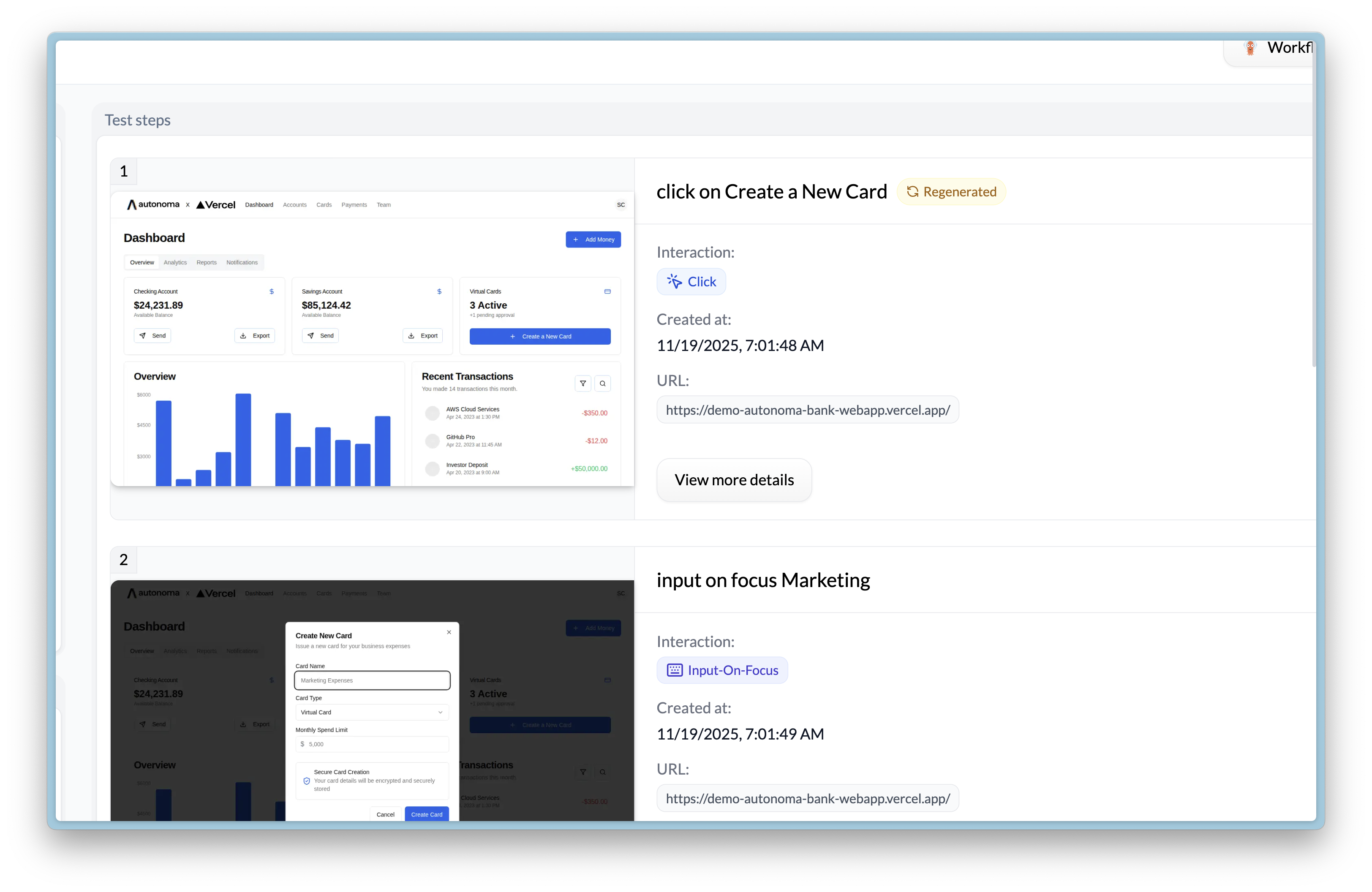

Self-Healing in Action

Self-healing isn't magic. It's intelligence. When Autonoma encounters a UI change, it uses multiple strategies to adapt:

- Semantic understanding: The AI knows a "submit button" can be labeled "Submit," "Send," "Continue," or "Confirm"

- Visual context: If a button moves, the AI finds it by understanding surrounding elements and layout

- Behavioral patterns: The AI recognizes standard UI patterns like dropdowns, modals, and navigation menus

- Intent preservation: The AI focuses on what you want to accomplish, not the specific path to accomplish it

This is why traditional automation requires constant maintenance while Autonoma tests remain stable across UI changes.

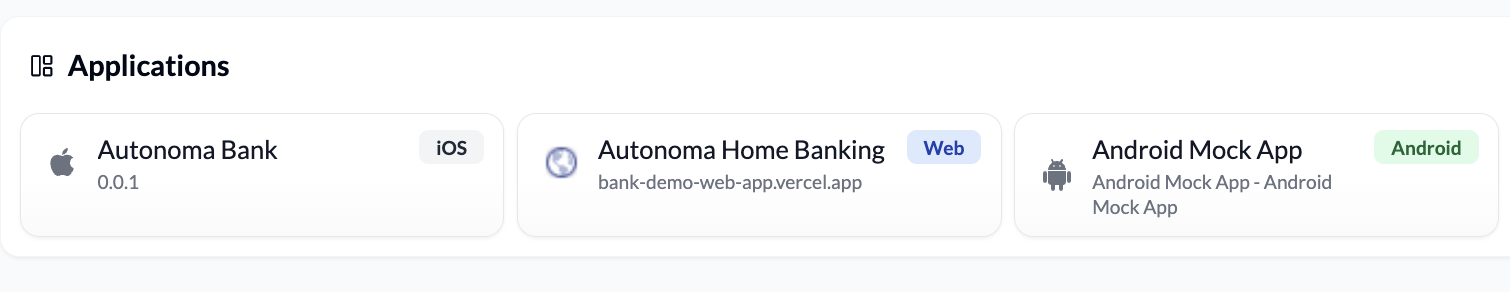

Multi-Platform Testing

Autonoma enables comprehensive mobile and web testing. For mobile apps, one test description works across both iOS and Android, describe "log in with valid credentials" once, and Autonoma runs it on both iPhone and Android devices automatically, mirroring the test execution across platforms.

Web testing uses a separate approach optimized for browser environments, with full support for Chrome, Safari, Firefox, and Edge. Note: Unified cross-platform testing (one test for mobile + web simultaneously) is coming soon, currently mobile tests run across iOS/Android, while web tests run independently across browsers.

Traditional automation requires separate implementations with different tools (Playwright for web, Appium for mobile) and distinct APIs for each platform, forcing teams to maintain multiple parallel test suites.

Built-In Best Practices

Autonoma includes best practices you'd otherwise need to implement manually:

-

Accessibility validation: Every test automatically checks for accessibility issues. Missing alt text. Low contrast. Keyboard navigation problems. You get accessibility testing without writing accessibility tests.

-

Visual regression: Screenshots captured automatically. Visual changes flagged for review. No need to configure visual testing tools or maintain baseline images.

-

Intelligent waiting: No more arbitrary timeouts. The AI waits intelligently based on page state, network activity, and element readiness.

-

Error context: When tests fail, you get full context, screenshots, console logs, network activity, and AI analysis of what went wrong.

These aren't add-ons. They're core features available from your first test.

Real-World Impact

The promise of AI testing is compelling. The reality is better.

Kavak: Proactive Incident Detection

Kavak, one of Latin America's largest used car platforms, needed to detect issues before customers encountered them. Previously, they relied on a SWAT team monitoring social media for user complaints and manually browsing their site to find problems.

With Autonoma, Kavak built proactive incident detection. Autonoma runs continuous tests in production, automatically creating Jira tickets when anomalies appear, before users are affected.

The impact? Significant reduction in user complaints. The SWAT team shifted from detecting website errors to improving customer experience during transactions. Issues that would have affected thousands of users are now caught and resolved before deployment.

The State of QA 2025: Industry Transformation

Our State of QA 2025 report analyzed how companies at different growth stages approach quality assurance. The findings reveal a fundamental shift.

Traditional companies spend 3-5 days on manual regression testing every two weeks. Tech-native companies automate main flows but face constant maintenance. Both approaches bottleneck delivery speed.

AI-powered testing enables this 10x improvement. Not by making people work faster. By eliminating the bottlenecks that slow them down.

Companies using autonomous testing ship features continuously instead of in two-week sprints. They catch bugs in minutes instead of days. They scale testing without scaling QA headcount.

The data is clear: traditional QA approaches can't keep pace with modern development speed. AI-powered testing isn't an optimization, it's a requirement for competitive delivery velocity.

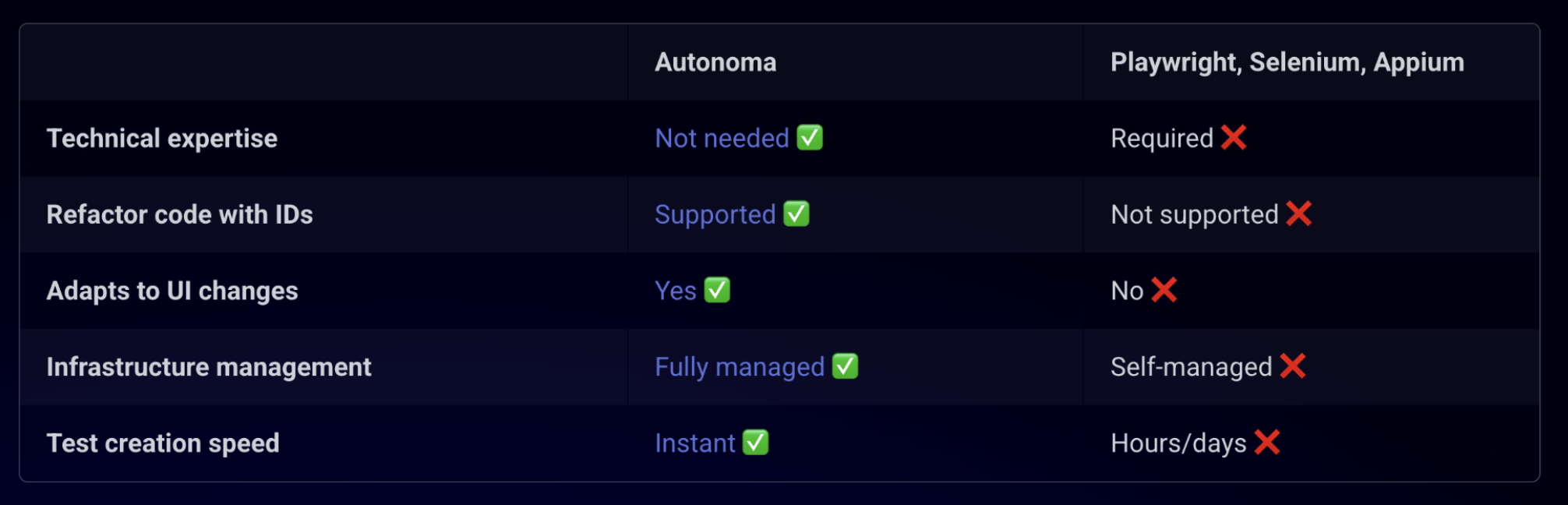

Traditional vs AI-Powered: The Real Comparison

The differences between traditional automation and AI-powered testing become clear when you examine practical realities:

Traditional automation gets expensive fast. Each new test adds maintenance burden. Each UI change requires updates across multiple tests. Each flaky test costs developer time investigating false failures.

AI-powered testing scales differently. The first test takes 10 minutes. The hundredth test takes 10 minutes. Maintenance doesn't grow linearly with test count because self-healing handles UI changes automatically.

The cost difference becomes dramatic at scale. A team maintaining 500 traditional automated tests might spend 40% of their time on test maintenance. The same team with 500 AI-powered tests spends that time building features instead.

Getting Started with Autonoma

You can start testing with AI today. Here's how:

Step 1: Contact our team at sales@autonoma.app to set up your account. We'll get you onboarded quickly with personalized guidance for your use case.

Step 2: Create your first test. Point Autonoma at your application. Interact with it. Describe what you're testing in step-by-step natural language: "User can log in and view their dashboard."

Step 3: Run the test. Watch it execute. See it pass. Change something in your UI. Run it again. Watch it adapt automatically with AI-powered self-healing.

Step 4: Add more tests. Describe critical user journeys in plain language. Checkout flows. Search functionality. Account management. Each test takes minutes to create.

Step 5: Integrate with CI/CD. Connect Autonoma to your deployment pipeline. Tests run automatically on every commit. You get instant feedback on whether changes break user workflows.

The entire process, from onboarding to running tests in CI/CD, takes under an hour with our team's support. Compare that to traditional automation where setup alone can take weeks.

What to Test First

Start with your most critical user journey. The flow that generates revenue or represents core product value.

For e-commerce: checkout flow. For SaaS: signup and onboarding. For content platforms: search and content consumption.

Create one test for this critical flow. Run it. See it work. Then expand from there.

Don't try to achieve complete coverage immediately. Start with the test that matters most. The one that, if it broke, would cost you money or users.

Scaling Beyond First Tests

Once you validate Autonoma works for your critical flow, scaling is straightforward:

Add tests for secondary user journeys. Authentication. Settings changes. Data export. Each takes minutes.

Enable tests in your CI/CD pipeline. Get automatic test runs on every pull request. Catch regressions before they reach production.

Expand to edge cases. Error handling. Validation messages. Loading states. These tests are fast to create and maintain themselves.

The pattern is simple: identify what matters, describe it in plain language, let AI handle the complexity.

The Future of Testing is Autonomous

This is the last chapter of our Software Testing Basics course, but it's the beginning of how you'll actually test software.

You learned about test types and why E2E testing matters. You discovered when to choose Playwright over Cypress. You wrote your first automated test and learned how to structure it properly. You encountered flaky tests and maintenance challenges. You tried frameworks and patterns to manage complexity.

Each chapter taught a solution. Each solution created new problems. More code to maintain. More edge cases to handle. More time spent on tests instead of features.

AI-powered testing solves the fundamental problem: testing complexity that grows faster than team capacity. Self-healing tests eliminate maintenance. Natural language descriptions eliminate coding barriers. Intelligent execution eliminates flakiness. Built-in best practices eliminate the need for custom frameworks.

The question isn't whether to adopt AI-powered testing. It's whether you can afford not to while your competitors already have.

Next Steps

Get Started with Autonoma: Contact our team at sales@autonoma.app to create your first test and see AI-powered testing in action with personalized onboarding.

See it in action: Schedule a demo to see how Autonoma handles your specific testing challenges and eliminates maintenance overhead.

Learn more: Read about how Kavak uses Autonoma for proactive incident detection and explore the State of QA 2025 to understand industry trends.

Join the companies shipping 10x faster. The future of testing is here. It's autonomous. It's intelligent. It starts today.

Course Navigation

Chapter 8 of 8: AI-Powered Software Testing ✓

← Previous Chapter

Chapter 7: Test Automation with Python and JavaScript - Complete implementation guides

🎓 Course Complete!

Congratulations on completing the Software Testing Basics course! You've learned:

- Testing fundamentals and why they matter

- Essential testing terminology (TDD, BDD, Gherkin)

- How to plan and organize tests effectively

- Test automation frameworks (Playwright, Selenium, Cypress, Appium)

- Page Object Model for maintainable architecture

- Techniques to reduce test flakiness

- Implementation in Python and JavaScript/TypeScript

- How AI is transforming testing

Start Over: Chapter 1 - Review the complete course from the beginning